如果您对关于人脸识别引擎FaceRecognitionDotNet的实例感兴趣,那么本文将是一篇不错的选择,我们将为您详在本文中,您将会了解到关于关于人脸识别引擎FaceRecognitionDotN

如果您对关于人脸识别引擎FaceRecognitionDotNet的实例感兴趣,那么本文将是一篇不错的选择,我们将为您详在本文中,您将会了解到关于关于人脸识别引擎FaceRecognitionDotNet的实例的详细内容,我们还将为您解答人脸识别基于的相关问题,并且为您提供关于.NET的关于人脸识别引擎分享(C#)、Android打开相机进行人脸识别,使用虹软人脸识别引擎、com.google.android.gms.location.ActivityRecognitionClient的实例源码、com.google.android.gms.location.ActivityRecognitionResult的实例源码的有价值信息。

本文目录一览:- 关于人脸识别引擎FaceRecognitionDotNet的实例(人脸识别基于)

- .NET的关于人脸识别引擎分享(C#)

- Android打开相机进行人脸识别,使用虹软人脸识别引擎

- com.google.android.gms.location.ActivityRecognitionClient的实例源码

- com.google.android.gms.location.ActivityRecognitionResult的实例源码

关于人脸识别引擎FaceRecognitionDotNet的实例(人脸识别基于)

根据我上篇文章的分享,我提到了FaceRecognitionDotNet,它是python语言开发的一个项目face_recognition移植。结果真是有喜有忧,喜的是很多去关注了,进行了下载,我看到项目Star从十几个变成了现在将近两百多个,忧的是很多人看不懂这项目,加了我的群来问怎么用,或者缺少的Dll在哪里。其实作者本身已经在项目介绍里面都写清楚了,真的是明明白白的。缺的dll可以nuget上下载,缺少的模型文件可以去它所移植的原项目上下载。很多人良莠不齐的,问的问题也五花八门,好歹也追求一下本质问题,好好看看介绍和文档,仔仔细细阅读一下,也没有多少字的。各位有点焦急了。

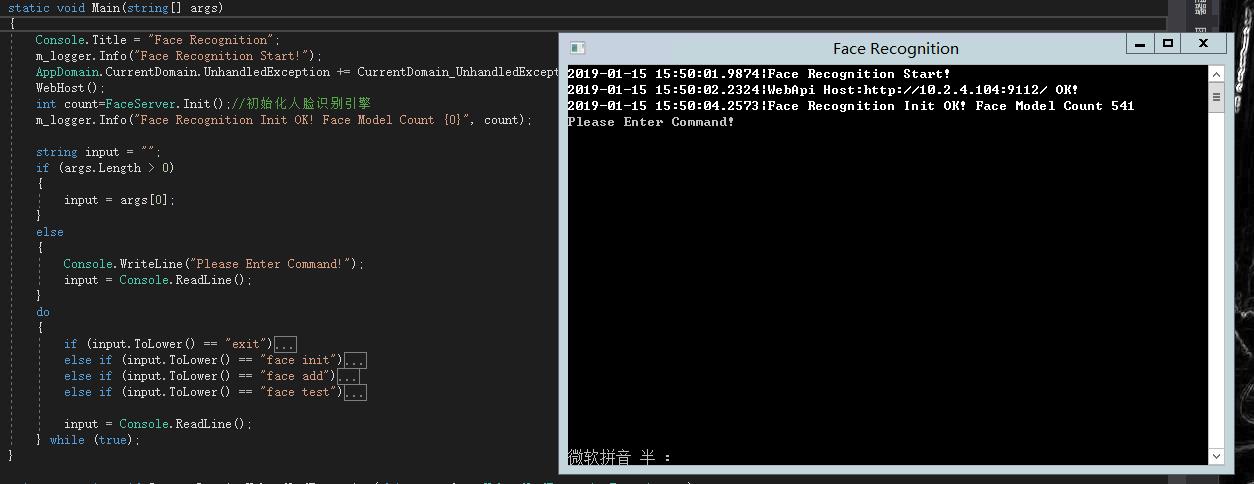

我对整个项目做了整体的梳理,我主要看重的是人脸识别部分,其实作者在编码上原先不太支持中文,我做了一些修改还加了一些函数,整体如下图。我做成了一个OWIN自宿主程序,将人脸识别的接口以WebApi的形式暴露给外部调用。本身也提供命令行进行一些操作。

1.exit顾名思义是退出指令

2.face init是用于初始化模型数据

3.face add用于添加新的模型数据

4.face test用于做测试

日志记录工具是用了NLog,别的都是项目的依赖包。说了这么多估计很多人急着要源码,急于求成的心态很不好啊。诶,能仔仔细细去看别人项目的沉静心思还是要有的,不然还是渣,向移植代码的大佬致敬,这才是中流砥柱,希望用了源码的各位去给大佬也提交一下代码或者提一些建议,让这样好的项目存在下去。源码地址(包比较大我上传到了百度云,我把所有文件都放在了一起,vs2017社区版创建,免得不会搭建项目的小白问):

链接:https://pan.baidu.com/s/1mI5vLNOgE6amEcYiiGOzpg

提取码:54th

最后说一下成果,里面有不是点会踩到,比如内存增长问题,需要手动释放一些资源,我已经在包函数的时候做了简单处理。整体性能还是不错的,两千张照片,处理花了700秒,我是在一台虚机上做的测试,当然开发也在那台机器上。总体和我同事调用百度AI的API不相上下(免费版),对于需要离线的项目很有帮助。会做一定移植的可以在linux上运行,我看到作者在Mac和linux都有测试,也在支持.NET Core的。真心佩服那个作者。

.NET的关于人脸识别引擎分享(C#)

最近在Github上找合适的人脸识别引擎,想要本地化用,不用开放的一些API(比如腾讯AI、百度AI),有些场景联不了网,一开始搜索的是时候(关键字:face recognition)就找到了最出名的face_recognition,star将近两万个,大佬级别的项目,一看开发语言python,调用的库是Dlib,本想着要不下载下来玩玩,奈何python的水平一般,包装水平太烂。后来再一阵寻找,略过了Emgu GV,找到了一个face_recognition的C#包装,大喜呀,用了一下,不错:FaceRecognitionDotNet,不亏是包装界的大佬,推荐给各位,和face_recognition一样的原理,函数实现也相同,调用的也是DLib,效果拔群。作者的功力是有目共睹的(意外的发现应该是霓虹国的,很硬气,附上其博客),国人共勉。

图一是face_recognition

图二是FaceRecognition.NET

为各位的项目添砖加瓦,祝各位新年愉快,健康生活!

补充一下,使用该引擎需要再nuget上下载库,https://www.nuget.org/packages/FaceRecognitionDotNet,不想下载的自己编译这个包装的C++库DlibDotNet

还有就是再使用中文路径编码的时候会出错,需要改一下源码,我已经给了修改的地方:https://github.com/takuya-takeuchi/FaceRecognitionDotNet/issues/21,已经提交给了作者

因缘际会的相遇,自当有非同寻常的结局 QQ交流群:110826636

原文地址:https://www.cnblogs.com/RainbowInTheSky/p/10247921.html

.NET社区新闻,深度好文,欢迎访问公众号文章汇总 http://www.csharpkit.com

本文分享自微信公众号 - dotNET跨平台(opendotnet)。

如有侵权,请联系 support@oschina.cn 删除。

本文参与“OSC源创计划”,欢迎正在阅读的你也加入,一起分享。

Android打开相机进行人脸识别,使用虹软人脸识别引擎

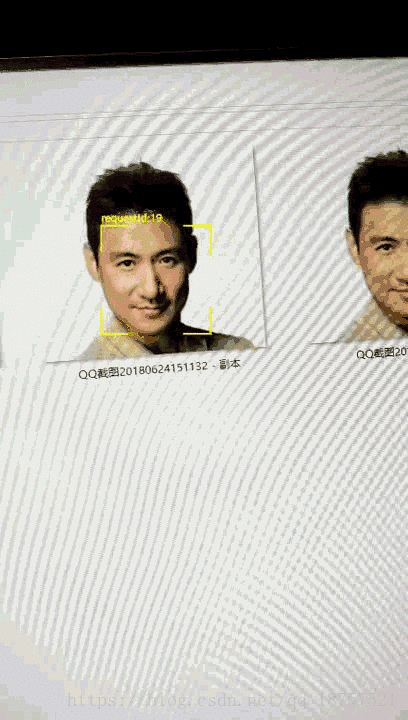

上一张效果图,渣画质,能看就好

功能说明:

人脸识别使用的是虹软的FreeSDK,包含人脸追踪,人脸检测,人脸识别,年龄、性别检测功能,其中本demo只使用了FT和FR(人脸追踪和人脸识别),封装了开启相机和人脸追踪、识别功能在FaceCameraHelper中。

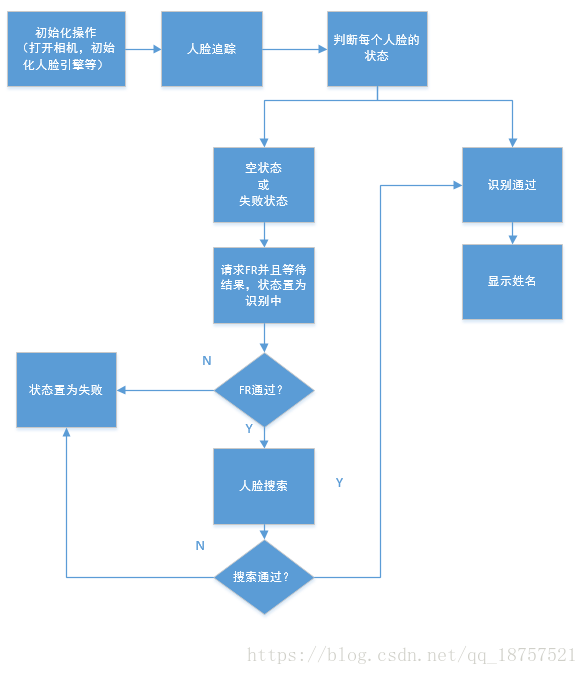

实现逻辑:

打开相机,监听预览数据回调进行人脸追踪,且为每个检测到的人脸都分配一个trackID(上下帧位置变化不大的人脸框可认为是同一个人脸,具体实现的逻辑可见代码),同时,为了人脸搜索,为每个trackID都分配一个状态(识别中,识别失败,识别通过)、姓名,识别通过则在人脸框上显示姓名,否则只显示trackID(本demo没配服务端,只做了模拟操作)。流程说明见下图。

FaceCameraHelper包含的接口:

public interface FaceTrackListener {

/**

* 回传相机预览数据和人脸框位置

*

* @param nv21 相机预览数据

* @param ftFaceList 待处理的人脸列表

* @param trackIdList 人脸追踪ID列表

*/

void onPreviewData(byte[] nv21, List<AFT_FSDKFace> ftFaceList, List<Integer> trackIdList);

/**

* 当出现异常时执行

*

* @param e 异常信息

*/

void onFail(Exception e);

/**

* 当相机打开时执行

*

* @param camera 相机实例

*/

void onCameraOpened(Camera camera);

/**

* 根据自己的需要可以删除部分人脸,比如指定区域、留下最大人脸等

*

* @param ftFaceList 人脸列表

* @param trackIdList 人脸追踪ID列表

*/

void adjustFaceRectList(List<AFT_FSDKFace> ftFaceList, List<Integer> trackIdList);

/**

* 请求人脸特征后的回调

*

* @param frFace 人脸特征数据

* @param requestId 请求码

*/

void onFaceFeatureInfoGet(@Nullable AFR_FSDKFace frFace, Integer requestId);

}

```

FT人脸框绘制并回调数据:

@Override

public void onPreviewFrame(byte[] nv21, Camera camera) {

if (faceTrackListener != null) {

ftFaceList.clear();

int ftCode = ftEngine.AFT_FSDK_FaceFeatureDetect(nv21, previewSize.width, previewSize.height, AFT_FSDKEngine.CP_PAF_NV21, ftFaceList).getCode();

if (ftCode != 0) {

faceTrackListener.onFail(new Exception("ft failed,code is " + ftCode));

}

refreshTrackId(ftFaceList);

faceTrackListener.adjustFaceRectList(ftFaceList, currentTrackIdList);

if (surfaceViewRect != null) {

Canvas canvas = surfaceViewRect.getHolder().lockCanvas();

if (canvas == null) {

faceTrackListener.onFail(new Exception("can not get canvas of surfaceViewRect"));

return;

}

canvas.drawColor(0, PorterDuff.Mode.CLEAR);

if (ftFaceList.size() > 0) {

for (int i = 0; i < ftFaceList.size(); i++) {

Rect adjustedRect = TrackUtil.adjustRect(new Rect(ftFaceList.get(i).getRect()), previewSize.width, previewSize.height, surfaceWidth, surfaceHeight, cameraOrientation, mCameraId);

TrackUtil.drawFaceRect(canvas, adjustedRect, faceRectColor, faceRectThickness, currentTrackIdList.get(i), nameMap.get(currentTrackIdList.get(i)));

}

}

surfaceViewRect.getHolder().unlockCanvasAndPost(canvas);

}

faceTrackListener.onPreviewData(nv21, ftFaceList, currentTrackIdList);

}

}

大多数设备相机预览数据图像的朝向在横屏时为0度。其他情况按逆时针依次增加90度,因此人脸框的绘制需要做同步转化。CameraID为0时,也就是后置摄像头情况,相机预览数据的显示为原画面,而CameraID为1时,也就是前置摄像头情况,相机的预览画面显示为镜像画面,适配的代码:

/**

* @param rect FT人脸框

* @param previewWidth 相机预览的宽度

* @param previewHeight 相机预览高度

* @param canvasWidth 画布的宽度

* @param canvasHeight 画布的高度

* @param cameraOri 相机预览方向

* @param mCameraId 相机ID

* @return

*/

static Rect adjustRect(Rect rect, int previewWidth, int previewHeight, int canvasWidth, int canvasHeight, int cameraOri, int mCameraId) {

if (rect == null) {

return null;

}

if (canvasWidth < canvasHeight) {

int t = previewHeight;

previewHeight = previewWidth;

previewWidth = t;

}

float horizontalRatio;

float verticalRatio;

if (cameraOri == 0 || cameraOri == 180) {

horizontalRatio = (float) canvasWidth / (float) previewWidth;

verticalRatio = (float) canvasHeight / (float) previewHeight;

} else {

horizontalRatio = (float) canvasHeight / (float) previewHeight;

verticalRatio = (float) canvasWidth / (float) previewWidth;

}

rect.left *= horizontalRatio;

rect.right *= horizontalRatio;

rect.top *= verticalRatio;

rect.bottom *= verticalRatio;

Rect newRect = new Rect();

switch (cameraOri) {

case 0:

if (mCameraId == Camera.CameraInfo.CAMERA_FACING_FRONT) {

newRect.left = canvasWidth - rect.right;

newRect.right = canvasWidth - rect.left;

} else {

newRect.left = rect.left;

newRect.right = rect.right;

}

newRect.top = rect.top;

newRect.bottom = rect.bottom;

break;

case 90:

newRect.right = canvasWidth - rect.top;

newRect.left = canvasWidth - rect.bottom;

if (mCameraId == Camera.CameraInfo.CAMERA_FACING_FRONT) {

newRect.top = canvasHeight - rect.right;

newRect.bottom = canvasHeight - rect.left;

} else {

newRect.top = rect.left;

newRect.bottom = rect.right;

}

break;

case 180:

newRect.top = canvasHeight - rect.bottom;

newRect.bottom = canvasHeight - rect.top;

if (mCameraId == Camera.CameraInfo.CAMERA_FACING_FRONT) {

newRect.left = rect.left;

newRect.right = rect.right;

} else {

newRect.left = canvasWidth - rect.right;

newRect.right = canvasWidth - rect.left;

}

break;

case 270:

newRect.left = rect.top;

newRect.right = rect.bottom;

if (mCameraId == Camera.CameraInfo.CAMERA_FACING_FRONT) {

newRect.top = rect.left;

newRect.bottom = rect.right;

} else {

newRect.top = canvasHeight - rect.right;

newRect.bottom = canvasHeight - rect.left;

}

break;

default:

break;

}

return newRect;

}

由于FR引擎不支持多线程调用,因此只能串行执行,若需要更高效的实现,可创建多个FREngine实例进行任务分配。

FR线程队列:

private LinkedBlockingQueue<FaceRecognizeRunnable> faceRecognizeRunnables = new LinkedBlockingQueue<FaceRecognizeRunnable>(MAX_FRTHREAD_COUNT);

FR线程:

public class FaceRecognizeRunnable implements Runnable {

private Rect faceRect;

private int width;

private int height;

private int format;

private int ori;

private Integer requestId;

private byte[]nv21Data;

public FaceRecognizeRunnable(byte[]nv21Data,Rect faceRect, int width, int height, int format, int ori, Integer requestId) {

if (nv21Data==null) {

return;

}

this.nv21Data = new byte[nv21Data.length];

System.arraycopy(nv21Data,0,this.nv21Data,0,nv21Data.length);

this.faceRect = new Rect(faceRect);

this.width = width;

this.height = height;

this.format = format;

this.ori = ori;

this.requestId = requestId;

}

@Override

public void run() {

if (faceTrackListener!=null && nv21Data!=null) {

if (frEngine != null) {

AFR_FSDKFace frFace = new AFR_FSDKFace();

int frCode = frEngine.AFR_FSDK_ExtractFRFeature(nv21Data, width, height, format, faceRect, ori, frFace).getCode();

if (frCode == 0) {

faceTrackListener.onFaceFeatureInfoGet(frFace, requestId);

} else {

faceTrackListener.onFaceFeatureInfoGet(null, requestId);

faceTrackListener.onFail(new Exception("fr failed errorCode is " + frCode));

}

nv21Data = null;

}else {

faceTrackListener.onFaceFeatureInfoGet(null, requestId);

faceTrackListener.onFail(new Exception("fr failed ,frEngine is null" ));

}

if (faceRecognizeRunnables.size()>0){

executor.execute(faceRecognizeRunnables.poll());

}

}

}

}

上下帧是否为相同人脸的判断(trackID刷新):

/**

* 刷新trackId

*

* @param ftFaceList 传入的人脸列表

*/

public void refreshTrackId(List<AFT_FSDKFace> ftFaceList) {

currentTrackIdList.clear();

//每项预先填充-1

for (int i = 0; i < ftFaceList.size(); i++) {

currentTrackIdList.add(-1);

}

//前一次无人脸现在有人脸,填充新增TrackId

if (formerTrackIdList.size() == 0) {

for (int i = 0; i < ftFaceList.size(); i++) {

currentTrackIdList.set(i, ++currentTrackId);

}

} else {

//前后都有人脸,对于每一个人脸框

for (int i = 0; i < ftFaceList.size(); i++) {

//遍历上一次人脸框

for (int j = 0; j < formerFaceRectList.size(); j++) {

//若是同一张人脸

if (TrackUtil.isSameFace(SIMILARITY_RECT, formerFaceRectList.get(j), ftFaceList.get(i).getRect())) {

//记录ID

currentTrackIdList.set(i, formerTrackIdList.get(j));

break;

}

}

}

}

//上一次人脸框不存在此人脸

for (int i = 0; i < currentTrackIdList.size(); i++) {

if (currentTrackIdList.get(i) == -1) {

currentTrackIdList.set(i, ++currentTrackId);

}

}

formerTrackIdList.clear();

formerFaceRectList.clear();

for (int i = 0; i < ftFaceList.size(); i++) {

formerFaceRectList.add(new Rect(ftFaceList.get(i).getRect()));

formerTrackIdList.add(currentTrackIdList.get(i));

}

}

项目地址:https://github.com/wangshengyang1996/FaceTrackDemo

若有不当的地方望指出。

com.google.android.gms.location.ActivityRecognitionClient的实例源码

@Override

public void onCreate() {

PreferenceManager.getDefaultSharedPreferences(this).registerOnSharedPreferencechangelistener(this);

mActivityRecognitionClient =

new ActivityRecognitionClient(this,this,this);

/*

* Create the PendingIntent that Location Services uses

* to send activity recognition updates back to this app.

*/

Intent intent = new Intent(

this,ActivityRecognitionIntentService.class);

/*

* Return a PendingIntent that starts the IntentService.

*/

mActivityRecognitionPendingIntent =

PendingIntent.getService(this,intent,PendingIntent.FLAG_UPDATE_CURRENT);

}

@SuppressWarnings("unchecked")

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.main_activity);

mContext = this;

// Get the UI widgets.

mRequestActivityUpdatesButton = (Button) findViewById(R.id.request_activity_updates_button);

mRemoveActivityUpdatesButton = (Button) findViewById(R.id.remove_activity_updates_button);

ListView detectedActivitiesListView = (ListView) findViewById(

R.id.detected_activities_listview);

// Enable either the Request Updates button or the Remove Updates button depending on

// whether activity updates have been requested.

setButtonsEnabledState();

ArrayList<DetectedActivity> detectedActivities = Utils.detectedActivitiesFromJson(

PreferenceManager.getDefaultSharedPreferences(this).getString(

Constants.KEY_DETECTED_ACTIVITIES,""));

// Bind the adapter to the ListView responsible for display data for detected activities.

mAdapter = new DetectedActivitiesAdapter(this,detectedActivities);

detectedActivitiesListView.setAdapter(mAdapter);

mActivityRecognitionClient = new ActivityRecognitionClient(this);

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

btn = (Button) findViewById(R.id.button);

btn.setonClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

//do something...

if (!gettingupdates) {

startActivityUpdates();

} else {

stopActivityUpdates();

}

}

});

mContext = this;

logger = (TextView) findViewById(R.id.logger);

// Check to be sure that TTS exists and is okay to use

Intent checkIntent = new Intent();

checkIntent.setAction(TextToSpeech.Engine.ACTION_CHECK_TTS_DATA);

//The result will come back in onActivityResult with our REQ_TTS_STATUS_CHECK number

startActivityForResult(checkIntent,REQ_TTS_STATUS_CHECK);

//setup the client activity piece.

mActivityRecognitionClient = new ActivityRecognitionClient(this);

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

Toolbar toolbar = (Toolbar) findViewById(R.id.toolbar);

setSupportActionBar(toolbar);

//setup fragments

listfrag = new myListFragment();

mapfrag = new myMapFragment();

FragmentManager fragmentManager = getSupportFragmentManager();

viewPager = (ViewPager) findViewById(R.id.pager);

myFragmentPagerAdapter adapter = new myFragmentPagerAdapter(fragmentManager);

viewPager.setAdapter(adapter);

//viewPager.setCurrentItem(1);

//new Tablayout from the support design library

TabLayout mTabLayout = (TabLayout) findViewById(R.id.tablayout1);

mTabLayout.setupWithViewPager(viewPager);

//setup the client activity piece.

mActivityRecognitionClient = new ActivityRecognitionClient(this);

//setup the location pieces.

mFusedLocationClient = LocationServices.getFusedLocationProviderClient(this);

mSettingsClient = LocationServices.getSettingsClient(this);

createLocationRequest();

createLocationCallback();

buildLocationSettingsRequest();

initialsetup(); //get last location and call it current spot.

}

public void startActivityUpdates(Googleapiclient googleapiclient) {

this.googleapiclient = googleapiclient;

Intent intent = new Intent(context,DetectedActivitiesIntentService.class );

pendingIntent = PendingIntent.getService( context,PendingIntent.FLAG_UPDATE_CURRENT );

ActivityRecognitionClient activityRecognitionClient = ActivityRecognition.getClient(context);

activityRecognitionClient.requestActivityUpdates(3000,pendingIntent);

LocalbroadcastManager.getInstance(context).registerReceiver(broadcastReceiver,new IntentFilter(Constants.ACTIVITY_broADCAST_ACTION));

}

public ActivityRecognitionManager(Context context){

this.context = context;

connecting = false;

recognitionClient = new ActivityRecognitionClient(context,this);

Intent intent = new Intent(context,ActivityRecognitionIntentService.class);

recognitionIntent = PendingIntent.getService(context,PendingIntent.FLAG_UPDATE_CURRENT);

}

@SuppressWarnings("unchecked")

@Override

public void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.main_activity);

mContext = this;

// Get the UI widgets.

mRequestActivityUpdatesButton = (Button) findViewById(R.id.request_activity_updates_button);

mRemoveActivityUpdatesButton = (Button) findViewById(R.id.remove_activity_updates_button);

ListView detectedActivitiesListView = (ListView) findViewById(

R.id.detected_activities_listview);

// Enable either the Request Updates button or the Remove Updates button depending on

// whether activity updates have been requested.

setButtonsEnabledState();

ArrayList<DetectedActivity> detectedActivities = Utils.detectedActivitiesFromJson(

PreferenceManager.getDefaultSharedPreferences(this).getString(

Constants.KEY_DETECTED_ACTIVITIES,detectedActivities);

detectedActivitiesListView.setAdapter(mAdapter);

mActivityRecognitionClient = new ActivityRecognitionClient(this);

}

public ActivitySensor(AbstractMode parentMode,int delay,int confidenceCutoff) {

super(parentMode);

Log.i("AS","activity sensor constructor");

mContext = parentMode.getContext();

mInProgress = false;

this.delay = delay;

this.confidenceCutoff = confidenceCutoff;

if(servicesConnected()) {

/*

* Instantiate a new activity recognition client. Since the

* parent Activity implements the connection listener and

* connection failure listener,the constructor uses "this"

* to specify the values of those parameters.

*/

mActivityRecognitionClient = new ActivityRecognitionClient(mContext,this);

// Register the receiver of the intents sent from the IntentService.

LocalbroadcastManager.getInstance(mContext).registerReceiver(mMessageReceiver,new IntentFilter("ACTIVITY_RECOGNITION_DATA"));

startUpdates();

/*

* Create the PendingIntent that Location Services uses

* to send activity recognition updates back to this app.

*/

Intent intent = new Intent(mContext,ActivitySensorIntentService.class);

/*

* Return a PendingIntent that starts the IntentService.

*/

mActivityRecognitionPendingIntent = PendingIntent.getService(mContext,PendingIntent.FLAG_UPDATE_CURRENT);

}

}

@Override

public void onInit() {

checkNewState(Input.State.INITED);

int resultCode = GooglePlayServicesUtil.isGooglePlayServicesAvailable(getContext());

if (ConnectionResult.SUCCESS == resultCode) {

_isLibraryAvailable = true;

_activityRecognitionClient = new ActivityRecognitionClient(getContext(),this);

_intervall = getContext().getSharedPreferences(MoSTApplication.PREF_INPUT,Context.MODE_PRIVATE).getLong(PREF_KEY_GOOGLE_ACTIVITY_RECOGNITION_PERIOD,DEFAULT_GOOGLE_ACTIVITY_RECOGNITION_PERIOD);

_intent = new Intent();

_intent.setAction(GOOGLE_ACTIVITY_RECOGNITION_ACTION);

_filter = new IntentFilter();

_filter.addAction(GOOGLE_ACTIVITY_RECOGNITION_ACTION);

_receiver = new GoogleActivityRecognitionbroadcastReceiver();

_pendingIntent = PendingIntent.getbroadcast(getContext(),_intent,PendingIntent.FLAG_UPDATE_CURRENT);

} else {

Log.e(TAG,"Google Play Service Library not available.");

}

super.onInit();

}

@Override

public void onInit() {

checkNewState(Input.State.INITED);

int resultCode = GooglePlayServicesUtil.isGooglePlayServicesAvailable(getContext());

if (ConnectionResult.SUCCESS == resultCode) {

_isLibraryAvailable = true;

_activityRecognitionClient = new ActivityRecognitionClient(getContext(),Context.MODE_PRIVATE).getLong(PREF_KEY_GOOGLE_ACTIVITY_RECOGNITION_INTERVALL,DEFAULT_GOOGLE_ACTIVITY_RECOGNITION_INTERVALL);

_intent = new Intent();

_intent.setAction(GOOGLE_ACTIVITY_RECOGNITION_ACTION);

_filter = new IntentFilter();

_filter.addAction(GOOGLE_ACTIVITY_RECOGNITION_ACTION);

_receiver = new GoogleActivityRecognitionbroadcastReceiver();

_pendingIntent = PendingIntent.getbroadcast(getContext(),"Google Play Service Library not available.");

}

super.onInit();

}

/**

* Call this to start a scan - don't forget to stop the scan once it's done.

* Note the scan will not start immediately,because it needs to establish a connection with Google's servers - you'll be notified of this at onConnected

*/

public void startActivityRecognitionScan(){

mActivityRecognitionClient = new ActivityRecognitionClient(mContext,this);

mActivityRecognitionClient.connect();

Log.i(TAG,"startActivityRecognitionScan");

}

/**

* Constructor

*

* @param context

* the application context.

*/

public ActivityRequester(Context context) {

/* The application context. */

this.context = context;

/*

* Create a new activity client,using this class to handle the

* callbacks.

*/

activityClient = new ActivityRecognitionClient(this.context,this);

activityClient.connect();

locbroadcastManager = LocalbroadcastManager.getInstance(context);

}

@Override

public void onCreate() {

super.onCreate();

executorService = Executors.newSingleThreadExecutor();

context = this;

myTracksProviderUtils = MyTracksProviderUtils.Factory.get(this);

handler = new Handler();

myTracksLocationManager = new MyTracksLocationManager(this,handler.getLooper(),true);

activityRecognitionPendingIntent = PendingIntent.getService(context,new Intent(context,ActivityRecognitionIntentService.class),PendingIntent.FLAG_UPDATE_CURRENT);

activityRecognitionClient = new ActivityRecognitionClient(

context,activityRecognitionCallbacks,activityRecognitionFailedListener);

activityRecognitionClient.connect();

voiceExecutor = new PeriodicTaskExecutor(this,new AnnouncementPeriodicTaskFactory());

splitExecutor = new PeriodicTaskExecutor(this,new SplitPeriodicTaskFactory());

sharedPreferences = getSharedPreferences(Constants.SETTINGS_NAME,Context.MODE_PRIVATE);

sharedPreferences.registerOnSharedPreferencechangelistener(sharedPreferencechangelistener);

// onSharedPreferenceChanged might not set recordingTrackId.

recordingTrackId = PreferencesUtils.RECORDING_TRACK_ID_DEFAULT;

// Require voiceExecutor and splitExecutor to be created.

sharedPreferencechangelistener.onSharedPreferenceChanged(sharedPreferences,null);

handler.post(registerLocationRunnable);

/*

* Try to restart the prevIoUs recording track in case the service has been

* restarted by the system,which can sometimes happen.

*/

Track track = myTracksProviderUtils.getTrack(recordingTrackId);

if (track != null) {

restartTrack(track);

} else {

if (isRecording()) {

Log.w(TAG,"track is null,but recordingTrackId not -1L. " + recordingTrackId);

updateRecordingState(PreferencesUtils.RECORDING_TRACK_ID_DEFAULT,true);

}

showNotification(false);

}

}

public void stopActivityUpdates() {

ActivityRecognitionClient activityRecognitionClient = ActivityRecognition.getClient(context);

activityRecognitionClient.removeActivityUpdates(pendingIntent);

this.googleapiclient.disconnect();

LocalbroadcastManager.getInstance(context).unregisterReceiver(broadcastReceiver);

}

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

// Activity Recog

mActivityRecognitionClient = new ActivityRecognitionClient(

getApplicationContext(),(com.google.android.gms.common.GooglePlayServicesClient.ConnectionCallbacks) this,this);

Intent intent = new Intent(getApplicationContext(),ActivityRecognitionIntentService.class);

mActivityRecognitionClient.connect();

mActivityRecognitionPendingIntent = PendingIntent.getService(

getApplicationContext(),PendingIntent.FLAG_UPDATE_CURRENT);

mDrawerLayout = (DrawerLayout) findViewById(R.id.drawer_layout);

mDrawerList = (ListView) findViewById(R.id.left_drawer);

mDrawerList.setAdapter(new ArrayAdapter<String>(this,R.layout.drawer_list_item,drawerNames));

mDrawerList.setonItemClickListener(new DrawerItemClickListener());

mDrawerToggle = new ActionBarDrawerToggle(this,/* host Activity */

mDrawerLayout,/* DrawerLayout object */

R.drawable.ic_drawer,/* nav drawer icon to replace 'Up' caret */

R.string.drawer_open,/* "open drawer" description */

R.string.drawer_close /* "close drawer" description */

) {

/** Called when a drawer has settled in a completely closed state. */

public void onDrawerClosed(View view) {

super.onDrawerClosed(view);

}

/** Called when a drawer has settled in a completely open state. */

public void onDrawerOpened(View drawerView) {

super.onDrawerOpened(drawerView);

}

};

// Set the drawer toggle as the DrawerListener

mDrawerLayout.setDrawerListener(mDrawerToggle);

getSupportActionBar().setdisplayHomeAsUpEnabled(true);

getSupportActionBar().setHomeButtonEnabled(true);

if (savedInstanceState == null) {

selectItem(0);

}

}

/**

* Call this to start a scan - don't forget to stop the scan once it's done.

* Note the scan will not start immediately,because it needs to establish a connection with Google's servers - you'll be notified of this at onConnected

*/

public void startActivityRecognitionScan(){

mActivityRecognitionClient = new ActivityRecognitionClient(context,this);

mActivityRecognitionClient.connect();

Log.d(TAG,"startActivityRecognitionScan");

}

@Override

public void run() {

Debug.log("Thread init");

databaseHelper = Database.Helper.getInstance(context);

alarm = (AlarmManager)context.getSystemService(ALARM_SERVICE);

{

Intent i = new Intent(context.getApplicationContext(),BackgroundService.class);

i.putExtra(EXTRA_ALARM_CALLBACK,1);

alarmCallback = PendingIntent.getService(BackgroundService.this,i,0);

}

Looper.prepare();

handler = new Handler();

Debug.log("Registering for updates");

prefs = PreferenceManager.getDefaultSharedPreferences(context);

long id = prefs.getLong(SettingsFragment.PREF_CURRENT_PROFILE,0);

if (id > 0) currentProfile = Database.Profile.getById(databaseHelper,id,null);

if (currentProfile == null) currentProfile = Database.Profile.getoffProfile(databaseHelper);

metric = !prefs.getString(SettingsFragment.PREF_UNITS,SettingsFragment.PREF_UNITS_DEFAULT).equals(SettingsFragment.VALUE_UNITS_IMPERIAL);

prefs.registerOnSharedPreferencechangelistener(preferencesUpdated);

LocalbroadcastManager.getInstance(context).registerReceiver(databaseUpdated,new IntentFilter(Database.Helper.NOTIFY_broADCAST));

Debug.log("Registering for power levels");

context.registerReceiver(batteryReceiver,new IntentFilter(Intent.ACTION_BATTERY_CHANGED));

Debug.log("Connecting ActivityRecognitionClient");

activityIntent = PendingIntent.getService(context,1,BackgroundService.class),0);

activityClient = new ActivityRecognitionClient(context,activityConnectionCallbacks,activityConnectionFailed);

activityClient.connect();

Debug.log("Connecting LocationClient");

locationClient = new LocationClient(context,locationConnectionCallbacks,locationConnectionFailed);

locationClient.connect();

Debug.log("Entering loop");

handler.post(new Runnable() {

@Override

public void run() {

updateListeners(FLAG_SETUP);

}

});

Looper.loop();

Debug.log("Exiting loop");

context.unregisterReceiver(batteryReceiver);

LocalbroadcastManager.getInstance(context).unregisterReceiver(databaseUpdated);

prefs.unregisterOnSharedPreferencechangelistener(preferencesUpdated);

if (activityConnected) {

activityClient.removeActivityUpdates(activityIntent);

activityClient.disconnect();

}

if (locationConnected) {

locationClient.removeLocationUpdates(locationListener);

locationClient.disconnect();

}

}

com.google.android.gms.location.ActivityRecognitionResult的实例源码

@Override

public void onReceive(Context context,Intent intent) {

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

ArrayList<DetectedActivity> detectedActivities = (ArrayList) result.getProbableActivities();

//Find the activity with the highest percentage

lastActivity = getProbableActivity(detectedActivities);

Log.d(TAG,"MOST LIKELY ACTIVITY: " + getActivityString(lastActivity.getType()) + " " + lastActivity.getConfidence());

if (lastActivity.getType() == DetectedActivity.STILL) {

if (config.isDebugging()) {

Toast.makeText(context,"Detected STILL Activity",Toast.LENGTH_SHORT).show();

}

// stopTracking();

// we will delay stop tracking after position is found

} else {

if (config.isDebugging()) {

Toast.makeText(context,"Detected ACTIVE Activity",Toast.LENGTH_SHORT).show();

}

startTracking();

}

//else do nothing

}

/**

* Handles incoming intents.

* @param intent The Intent is provided (inside a PendingIntent) when requestActivityUpdates()

* is called.

*/

@SuppressWarnings("unchecked")

@Override

protected void onHandleIntent(Intent intent) {

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

// Get the list of the probable activities associated with the current state of the

// device. Each activity is associated with a confidence level,which is an int between

// 0 and 100.

ArrayList<DetectedActivity> detectedActivities = (ArrayList) result.getProbableActivities();

PreferenceManager.getDefaultSharedPreferences(this)

.edit()

.putString(Constants.KEY_DETECTED_ACTIVITIES,Utils.detectedActivitiesToJson(detectedActivities))

.apply();

// Log each activity.

Log.i(TAG,"activities detected");

for (DetectedActivity da: detectedActivities) {

Log.i(TAG,Utils.getActivityString(

getApplicationContext(),da.getType()) + " " + da.getConfidence() + "%"

);

}

}

@Override

protected void onNewIntent(Intent intent) {

Log.v(TAG,"onNewIntent");

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

//get most probable activity

DetectedActivity probably = result.getMostProbableActivity();

if (probably.getConfidence() >= 50) { //doc's say over 50% is likely,under is not sure at all.

speech(getActivityString(probably.getType()));

}

logger.append("Most Probable: " +getActivityString(probably.getType()) + " "+ probably.getConfidence()+"%\n" );

//or we Could go through the list,which is sorted by most likely to least likely.

List<DetectedActivity> fulllist = result.getProbableActivities();

for (DetectedActivity da: fulllist) {

if (da.getConfidence() >=50) {

logger.append("-->" + getActivityString(da.getType()) + " " + da.getConfidence() + "%\n");

speech(getActivityString(da.getType()));

}

}

}

@Override

public void onReceive(Context context,Intent intent) {

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

ArrayList<DetectedActivity> detectedActivities = (ArrayList) result.getProbableActivities();

//Find the activity with the highest percentage

lastActivity = Constants.getProbableActivity(detectedActivities);

Log.w(TAG,"MOST LIKELY ACTIVITY: " + Constants.getActivityString(lastActivity.getType()) + " " + lastActivity.getConfidence());

Intent mIntent = new Intent(Constants.CALLBACK_ACTIVITY_UPDATE);

mIntent.putExtra(Constants.ACTIVITY_EXTRA,detectedActivities);

getApplicationContext().sendbroadcast(mIntent);

Log.w(TAG,"Activity is recording" + isRecording);

if(lastActivity.getType() == DetectedActivity.STILL && isRecording) {

showDebugToast(context,"Detected Activity was STILL,Stop recording");

stopRecording();

} else if(lastActivity.getType() != DetectedActivity.STILL && !isRecording) {

showDebugToast(context,"Detected Activity was ACTIVE,Start Recording");

startRecording();

}

//else do nothing

}

/**

* Handles incoming intents.

*

* @param intent The Intent is provided (inside a PendingIntent) when requestActivityUpdates()

* is called.

*/

@Override

protected void onHandleIntent(Intent intent) {

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

Intent localIntent = new Intent(Constants.broADCAST_ACTION);

// Get the list of the probable activities associated with the current state of the

// device. Each activity is associated with a confidence level,which is an int between

// 0 and 100.

ArrayList<DetectedActivity> detectedActivities = (ArrayList) result.getProbableActivities();

// Log each activity.

Log.i(TAG,"activities detected");

for (DetectedActivity da : detectedActivities) {

Log.i(TAG,Constants.getActivityString(

getApplicationContext(),da.getType()) + " " + da.getConfidence() + "%"

);

}

// broadcast the list of detected activities.

localIntent.putExtra(Constants.ACTIVITY_EXTRA,detectedActivities);

LocalbroadcastManager.getInstance(this).sendbroadcast(localIntent);

}

/**

* Handles incoming intents.

*

* @param intent The Intent is provided (inside a PendingIntent) when requestActivityUpdates()

* is called.

*/

@Override

protected void onHandleIntent(Intent intent) {

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

Intent localIntent = new Intent(Constants.ACTIVITY_broADCAST_ACTION);

if (result == null) {

return;

}

ArrayList<DetectedActivity> res = new ArrayList<>();

for (DetectedActivity d : result.getProbableActivities()) {

if (d.getConfidence() > Constants.MIN_ACTIVITY_CONFIDENCE) {

res.add(d);

}

}

// broadcast the list of detected activities.

localIntent.putExtra(Constants.ACTIVITY_EXTRA,res);

LocalbroadcastManager.getInstance(this).sendbroadcast(localIntent);

}

@Override

public void onReceive(Context context,Toast.LENGTH_SHORT).show();

}

startTracking();

}

//else do nothing

}

@Subscribe

public void messageAvailable(AndroidEventMessage m) {

if(m.getId().equals(Configuration.NO_CONNECTION)){

//detener geo e ibacon services

Log.i("pandora","stoping services because NO CONNECTION");

stopServices(contexto);

}else if(m.getId().equals(Configuration.CONNECTION)){

//activar geo e ibacon services

Log.i("pandora","restarting services because CONNECTION");

startServices(contexto,this.iBeacon,true);

}else if(m.getId().equals(Configuration.NEW_ACTIVITY) && GeoService.receivedFix>2){

ActivityRecognitionResult result = (ActivityRecognitionResult) m.getContent();

String activity = parseActivity(result.getMostProbableActivity());

Log.i("pandora","new activity: "+activity);

if(result.getMostProbableActivity().getType()==DetectedActivity.STILL && result.getMostProbableActivity().getConfidence()>80){

Log.i("pandora","stoping services because STILL");

stopServices(contexto);

}else {

Log.i("pandora","restarting services because NOT STILL");

startServices(contexto,true);

}

}

}

/**

* Handles incoming intents.

* @param intent The Intent is provided (inside a PendingIntent) when requestActivityUpdates()

* is called.

*/

@SuppressWarnings("unchecked")

@Override

protected void onHandleIntent(Intent intent) {

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

// Get the list of the probable activities associated with the current state of the

// device. Each activity is associated with a confidence level,which is an int between

// 0 and 100.

ArrayList<DetectedActivity> detectedActivities = (ArrayList) result.getProbableActivities();

PreferenceManager.getDefaultSharedPreferences(this)

.edit()

.putString(Constants.KEY_DETECTED_ACTIVITIES,Utils.detectedActivitiesToJson(detectedActivities))

.apply();

// Log each activity.

Log.i(TAG,Utils.getActivityString(

getApplicationContext(),da.getType()) + " " + da.getConfidence() + "%"

);

}

}

@Override

protected void onHandleIntent(Intent intent) {

if (ActivityRecognitionResult.hasResult(intent)) {

ActivityRecognitionResult result =

ActivityRecognitionResult.extractResult(intent);

DetectedActivity mostProbableActivity = result.getMostProbableActivity();

int confidence = mostProbableActivity.getConfidence();

int activityType = mostProbableActivity.getType();

long waitTime = UserSettings.getNotDrivingTime(this);

boolean diffTimeOK = (new Date().getTime() - waitTime) >= Utils.minutesToMillis(10);

if (confidence >= Utils.DETECTION_THRESHOLD && activityType == DetectedActivity.IN_VEHICLE && diffTimeOK) {

if (!CarMode.running) {

if (UserSettings.getGPS(this) && ContextCompat.checkSelfPermission(this,Manifest.permission.ACCESS_FINE_LOCATION) == PackageManager.PERMISSION_GRANTED)

startService(new Intent(this,SpeedService.class));

else

startService(new Intent(this,CarMode.class));

}

} else if (confidence >= Utils.DETECTION_THRESHOLD) {

stopService(new Intent(this,CarMode.class));

UserSettings.setGPSDrive(this,false);

}

}

}

@Override

public void onReceive(Context context,Intent intent) {

if (ActivityRecognitionResult.hasResult(intent)) {

// Get the update

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

// Get the most probable activity

DetectedActivity mostProbableActivity = result.getMostProbableActivity();

int confidence = mostProbableActivity.getConfidence();

int activityType = mostProbableActivity.getType();

String activityName = getNameFromType(activityType);

DataBundle b = _bundlePool.borrowBundle();

b.putInt(KEY_CONFIDENCE,confidence);

b.putString(KEY_RECOGNIZED_ACTIVITY,activityName);

b.putLong(Input.KEY_TIMESTAMP,System.currentTimeMillis());

b.putInt(Input.KEY_TYPE,getType().toInt());

post(b);

}

}

@Override

protected void onHandleIntent(Intent intent) {

if (mPreferenceUtils.isActivityUpdatesstarted()) {

if (ActivityRecognitionResult.hasResult(intent)) {

mPreferenceUtils.setLastActivityTime(System.currentTimeMillis());

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

boolean moving = isMoving(result);

mPreferenceUtils.setMoving(moving);

if (moving) {

if (!mPreferenceUtils.isLocationUpdatesstarted()) {

mLocationUpdatesController.startLocationUpdates();

}

} else {

if (mPreferenceUtils.isLocationUpdatesstarted()) {

mLocationUpdatesController.stopLocationUpdates();

}

}

}

}

}

/**

* Detecta si el dispositivo se encuentra en un vehículo en movimiento o no

*

* @param result resultado de la detección de actividad

* @return <code>true</code> si se encuentra en un vehículo.

*/

private boolean isMoving(ActivityRecognitionResult result) {

long currentTime = System.currentTimeMillis();

DetectedActivity mostProbableActivity = result.getMostProbableActivity();

int confidence = mostProbableActivity.getConfidence();

int type = mostProbableActivity.getType();

if ((type == ON_FOOT || type == RUNNING || type == WALKING) && confidence > 75) {

return false;

}

if (type == IN_VEHICLE && confidence > 75) {

mPreferenceUtils.setDetectionTimeMillis(currentTime);

return true;

}

if (mPreferenceUtils.wasMoving()) {

long tolerance = mPreferenceUtils.getActivityRecognitionToleranceMillis();

long lastDetectionTime = mPreferenceUtils.getDetectionTimeMillis();

long elapsedtime = currentTime - lastDetectionTime;

return elapsedtime <= tolerance;

}

return false;

}

@Override

protected void onHandleIntent(Intent intent) {

// If the intent contains an update

if (ActivityRecognitionResult.hasResult(intent)) {

// Get the update

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

Intent i = new Intent(broADCAST_UPDATE);

i.putExtra(RECOGNITION_RESULT,result);

LocalbroadcastManager manager = LocalbroadcastManager.getInstance(this);

manager.sendbroadcast(i);

}

}

private DetectedActivity getActivity(ActivityRecognitionResult result) {

// Get the most probable activity from the list of activities in the result

DetectedActivity mostProbableActivity = result.getMostProbableActivity();

// If the activity is ON_FOOT,choose between WALKING or RUNNING

if (mostProbableActivity.getType() == DetectedActivity.ON_FOOT) {

// Iterate through all possible activities. The activities are sorted by most probable activity first.

for (DetectedActivity activity : result.getProbableActivities()) {

if (activity.getType() == DetectedActivity.WALKING || activity.getType() == DetectedActivity.RUNNING) {

return activity;

}

}

// It is ON_FOOT,but not sure if it is WALKING or RUNNING

Log.i(TAG,"Activity ON_FOOT,but not sure if it is WALKING or RUNNING.");

return mostProbableActivity;

}

else

{

return mostProbableActivity;

}

}

/**

* Called when a new activity detection update is available.

*/

@Override

protected void onHandleIntent(Intent intent) {

//...

// If the intent contains an update

if (ActivityRecognitionResult.hasResult(intent)) {

// Get the updatenex

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

DetectedActivity mostProbableActivity = result.getMostProbableActivity();

// Get the confidence % (probability)

int confidence = mostProbableActivity.getConfidence();

// Get the type

int activityType = mostProbableActivity.getType();

Intent sendToLoggerIntent = new Intent("com.pinsonault.androidsensorlogger.ACTIVITY_RECOGNITION_DATA");

sendToLoggerIntent.putExtra("Activity",activityType);

sendToLoggerIntent.putExtra("Confidence",confidence);

sendbroadcast(sendToLoggerIntent);

}

}

@Override

public void onHandleIntent(Intent intent) {

if (DEBUG) Log.d(TAG,"onHandleIntent: intent="+intent);

if (!ActivityRecognitionResult.hasResult(intent)) {

return;

}

ActivityRecognitionResult arr = ActivityRecognitionResult.extractResult(intent);

DetectedActivity mpa = arr.getMostProbableActivity();

Intent i = new Intent(Constants.ACTION_RECOGNITION);

i.putExtra(Constants.EXTRA_TYPE,mpa.getType());

i.putExtra(Constants.EXTRA_CONFIDENCE,mpa.getConfidence());

LocalbroadcastManager.getInstance(this).sendbroadcast(i);

}

@Override

protected void onHandleIntent(Intent intent) {

if (intent.getAction() != LocationActivity.ACTION_ACTIVITY_RECOGNITION) {

return;

}

if (ActivityRecognitionResult.hasResult(intent)) {

ActivityRecognitionResult result = ActivityRecognitionResult

.extractResult(intent);

DetectedActivity detectedActivity = result

.getMostProbableActivity();

int activityType = detectedActivity.getType();

Log.v(LocationActivity.TAG,"activity_type == " + activityType);

// Put the activity_type as an intent extra and send a broadcast.

Intent send_intent = new Intent(

LocationActivity.ACTION_ACTIVITY_RECOGNITION);

send_intent.putExtra("activity_type",activityType);

sendbroadcast(send_intent);

}

}

@Override

protected void onGoogleapiclientReady(Googleapiclient apiclient,Observer<? super ActivityRecognitionResult> observer) {

receiver = new ActivityUpdatesbroadcastReceiver(observer);

context.registerReceiver(receiver,new IntentFilter(ACTION_ACTIVITY_DETECTED));

PendingIntent receiverIntent = getReceiverPendingIntent();

ActivityRecognition.ActivityRecognitionApi.requestActivityUpdates(apiclient,detectionIntervalMilliseconds,receiverIntent);

}

@Override

public void onReceive(Context context,Intent intent) {

if (ActivityRecognitionResult.hasResult(intent)) {

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

observer.onNext(result);

}

}

@Override

protected void onHandleIntent(final Intent intent) {

if (DEBUG) {

MyLog.i(CLS_NAME,"onHandleIntent");

}

if (SPH.getMotionEnabled(getApplicationContext())) {

if (intent != null) {

if (DEBUG) {

examineIntent(intent);

}

if (ActivityRecognitionResult.hasResult(intent)) {

final Motion motion = extractMotion(intent);

if (motion != null) {

MotionHelper.setMotion(getApplicationContext(),motion);

} else {

if (DEBUG) {

MyLog.i(CLS_NAME,"onHandleIntent: motion null: ignoring");

}

}

} else {

if (DEBUG) {

MyLog.i(CLS_NAME,"onHandleIntent: no ActivityRecognition results");

}

}

} else {

if (DEBUG) {

MyLog.w(CLS_NAME,"onHandleIntent: intent: null");

}

}

} else {

if (DEBUG) {

MyLog.i(CLS_NAME,"onHandleIntent: user has switched off. Don't store.");

}

}

}

private void handleResult(@NonNull DetectedActivityResult detectedActivityResult){

ActivityRecognitionResult ar = detectedActivityResult.getActivityRecognitionResult();

RecognizedActivityResult result = new RecognizedActivityResult();

List<DetectedActivity> acts = ar.getProbableActivities();

result.activities = new RecognizedActivity[acts.size()];

for(int i = 0; i < acts.size(); ++i){

DetectedActivity act = acts.get(i);

result.activities[i] = new RecognizedActivity(act.getType(),act.getConfidence());

}

resultCallback.onResult(result);

}

public static SubjectFactory<ActivityRecognitionResultSubject,ActivityRecognitionResult> type() {

return new SubjectFactory<ActivityRecognitionResultSubject,ActivityRecognitionResult>() {

@Override

public ActivityRecognitionResultSubject getSubject(FailureStrategy fs,ActivityRecognitionResult that) {

return new ActivityRecognitionResultSubject(fs,that);

}

};

}

/**

* Handles new motion activity data

*

* @param userActivityResult

*/

public void handleData(ActivityRecognitionResult userActivityResult) {

probableActivities = userActivityResult.getProbableActivities();

mostProbableActivity = userActivityResult.getMostProbableActivity();

dumpdata();

}

@Override

public void onReceive(Context context,Intent intent) {

// Modify the status of mRealTimePositionVeLocityCalculator only if the status is set to auto

// (indicated by mAutoSwitchGroundTruthMode).

if (mAutoSwitchGroundTruthMode) {

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

setGroundTruthModeOnResult(result);

}

}

/**

* Sets up the ground truth mode of {@link RealTimePositionVeLocityCalculator} given an result

* from Activity Recognition update. For activities other than {@link DetectedActivity#STILL}

* and {@link DetectedActivity#TILTING},we conservatively assume the user is moving and use the

* last WLS position solution as ground truth for corrected residual computation.

*/

private void setGroundTruthModeOnResult(ActivityRecognitionResult result){

if (result != null){

int detectedActivityType = result.getMostProbableActivity().getType();

if (detectedActivityType == DetectedActivity.STILL

|| detectedActivityType == DetectedActivity.TILTING){

mRealTimePositionVeLocityCalculator.setResidualPlotMode(

RealTimePositionVeLocityCalculator.RESIDUAL_MODE_STILL,null);

} else {

mRealTimePositionVeLocityCalculator.setResidualPlotMode(

RealTimePositionVeLocityCalculator.RESIDUAL_MODE_MOVING,null);

}

}

}

/**

* Called when a new activity detection update is available.

*/

@Override

protected void onHandleIntent(final Intent intent) {

if (ActivityRecognitionResult.hasResult(intent)) {

new Handler(getMainLooper()).post(new Runnable() {

@Override

public void run() {

activityRecognizerListener.onActivityRecognized(

ActivityType.values()[ActivityRecognitionResult.extractResult(intent).getMostProbableActivity().getType()]

);

}

});

}

}

@Override

protected void onHandleIntent(Intent intent) {

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

ArrayList<DetectedActivity> detectedActivities = (ArrayList) result.getProbableActivities();

Log.d(TAG,"Detected activities:");

for (DetectedActivity da: detectedActivities) {

Log.d(TAG,getActivityString(da.getType()) + " (" + da.getConfidence() + "%)");

}

Intent localIntent = new Intent(broADCAST_ACTION);

localIntent.putExtra(ACTIVITY_EXTRA,detectedActivities);

LocalbroadcastManager.getInstance(this).sendbroadcast(localIntent);

}

@Override

protected void onHandleIntent(Intent intent) {

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

if (LocationTrackingService.isRunning()) {

LocationTrackingService.get().locationManager().onActivitiesDetected(result);

}

}

public void onActivitiesDetected(ActivityRecognitionResult recognitionResult) {

// if you tilt the phone,this will come through,ignore it

if (onlyTilting(recognitionResult.getProbableActivities())) {

return;

}

if (_detectedActivitiesCache.size() == 5) {

_detectedActivitiesCache.remove(0);

}

_detectedActivitiesCache.add(recognitionResult);

}

/**

* Handles incoming intents.

*

* @param intent The Intent is provided (inside a PendingIntent) when requestActivityUpdates()

* is called.

*/

@Override

protected void onHandleIntent(Intent intent) {

int currentActivity = DetectedActivity.UNKNowN;

//get the message handler

Messenger messenger = null;

Bundle extras = intent.getExtras();

if (extras != null) {

messenger = (Messenger) extras.get("MESSENGER");

}

//get the activity info

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

//get most probable activity

DetectedActivity probably = result.getMostProbableActivity();

if (probably.getConfidence() >= 50) { //doc's say over 50% is likely,under is not sure at all.

currentActivity = probably.getType();

}

Log.v(TAG,"about to send message");

if (messenger != null) {

Message msg = Message.obtain();

msg.arg1 = currentActivity;

Log.v(TAG,"Sent message");

try {

messenger.send(msg);

} catch (android.os.remoteexception e1) {

Log.w(getClass().getName(),"Exception sending message",e1);

}

}

}

@Override

protected void onHandleIntent(Intent intent) {

// Get the ActivityRecognitionResult

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

// Get the list of the probable activities

ArrayList<DetectedActivity> detectedActivities = (ArrayList) result.getProbableActivities();

String type = "";

float confidence = 0;

// Select the most confidence type

for (DetectedActivity da : detectedActivities) {

if (da.getConfidence() > confidence) {

confidence = da.getConfidence();

type = Constants.getActivityString(

getApplicationContext(),da.getType());

}

}

// Add to the notification the current most confidence activity

NotificationCompat.Builder mBuilder =

new NotificationCompat.Builder(this)

.setSmallIcon(R.mipmap.ic_launcher)

.setContentTitle("Current activity: " + type)

.setongoing(true)

.setContentText("Confidence: " + String.valueOf(confidence) + "%");

int mNotificationId = Constants.NOTIFICATION_ID;

notificationmanager mNotifyMgr =

(notificationmanager) getSystemService(NOTIFICATION_SERVICE);

mNotifyMgr.notify(mNotificationId,mBuilder.build());

}

@Override

protected void onHandleIntent(Intent intent) {

if (ActivityRecognitionResult.hasResult(intent)) {

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

if (DSContext.CONTEXT != null && DSContext.CONTEXT.activityNode != null) {

String name = getNameFromType(result.getMostProbableActivity().getType());

DSContext.CONTEXT.activityNode.setValue(new Value(name));

}

}

}

/** Called when a new activity detection update is available.

*

*/

@Override

protected void onHandleIntent(Intent intent){

if(ActivityRecognitionResult.hasResult(intent)){

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

// notify

AndroidEventBus.getInstance().getBus().post(new AndroidEventMessage(Configuration.NEW_ACTIVITY,result));

}

}

/**

* Called when a new activity detection update is available.

*/

@Override

protected void onHandleIntent(Intent intent) {

Log.i("AS Service","onHandleIntent: Got here!");

if (ActivityRecognitionResult.hasResult(intent)) {

// Get the update

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

DetectedActivity mostProbableActivity = result.getMostProbableActivity();

// Get the confidence % (probability)

int confidence = mostProbableActivity.getConfidence();

// Get the type

int activityType = mostProbableActivity.getType();

// process

Intent broadcastIntent = new Intent(ACTIVITY_RECOGNITION_DATA);

broadcastIntent.putExtra(ACTIVITY,activityType);

broadcastIntent.putExtra(CONFIDENCE,confidence);

LocalbroadcastManager.getInstance(this).sendbroadcast(broadcastIntent);

Log.i("AS Service","Sent a local broardcast with the activity data.");

}

Log.i("AS","onHandleIntent called");

}

@Override

public void onHandleIntent(Intent intent) {

if (ActivityRecognitionResult.hasResult(intent)) {

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

List<DetectedActivity> activities = result.getProbableActivities();

DetectedActivity bestActivity = null;

int bestConfidence = 0;

for (DetectedActivity activity : activities) {

Log.d(TAG,"Activity " + activity.getType() + " " + activity.getConfidence());

if (ACTIVITY_MASK.contains(activity.getType())) {

if (activity.getConfidence() > bestConfidence) {

bestActivity = activity;

bestConfidence = activity.getConfidence();

}

}

}

DetectedActivity currentActivity = bestActivity;

long currentTime = System.currentTimeMillis();

if (currentActivity == null) {

Log.w(TAG,"No activity matches!");

return;

}

if (mGameHandler != null && mGameHandler.getLooper().getThread().isAlive()) {

mGameHandler.sendMessage(

mGameHandler.obtainMessage(MESSAGE_ACTIVITY,new activitylog(currentActivity,currentTime))

);

}

}

}

@Override

public void onReceive(Context context,Intent intent) {

if (ActivityRecognitionResult.hasResult(intent)) {

// Get the update

ActivityRecognitionResult result = ActivityRecognitionResult.extractResult(intent);

// Get the most probable activity

DetectedActivity mostProbableActivity = result.getMostProbableActivity();

int confidence = mostProbableActivity.getConfidence();

int activityType = mostProbableActivity.getType();

String activityName = getNameFromType(activityType);

if (DEBUG)

Log.i(TAG,String.format("Recognized %s with confidence %d",activityName,confidence));

DataBundle b = _bundlePool.borrowBundle();

b.putInt(KEY_CONFIDENCE,getType().toInt());

post(b);

if (_activityRecognitionClient != null && _activityRecognitionClient.isConnected()) {

_activityRecognitionClient.removeActivityUpdates(_pendingIntent);

_activityRecognitionClient.disconnect();

_activityRecognitionClient = null;

}

}

}

今天关于关于人脸识别引擎FaceRecognitionDotNet的实例和人脸识别基于的分享就到这里,希望大家有所收获,若想了解更多关于.NET的关于人脸识别引擎分享(C#)、Android打开相机进行人脸识别,使用虹软人脸识别引擎、com.google.android.gms.location.ActivityRecognitionClient的实例源码、com.google.android.gms.location.ActivityRecognitionResult的实例源码等相关知识,可以在本站进行查询。

本文标签:

![[转帖]Ubuntu 安装 Wine方法(ubuntu如何安装wine)](https://www.gvkun.com/zb_users/cache/thumbs/4c83df0e2303284d68480d1b1378581d-180-120-1.jpg)