在本文中,我们将带你了解elasticsearch带空格的术语在这篇文章中,我们将为您详细介绍elasticsearch带空格的术语的方方面面,并解答elasticsearch空格常见的疑惑,同时我们

在本文中,我们将带你了解elasticsearch带空格的术语在这篇文章中,我们将为您详细介绍elasticsearch带空格的术语的方方面面,并解答elasticsearch 空格常见的疑惑,同时我们还将给您一些技巧,以帮助您实现更有效的docker 部署 elasticsearch + elasticsearch-head + elasticsearch-head 跨域问题 + IK 分词器、Docker部署ElasticSearch和ElasticSearch-Head的实现、Elastic search 带空格的术语、Elastic Search中嵌套字段的术语聚合。

本文目录一览:- elasticsearch带空格的术语(elasticsearch 空格)

- docker 部署 elasticsearch + elasticsearch-head + elasticsearch-head 跨域问题 + IK 分词器

- Docker部署ElasticSearch和ElasticSearch-Head的实现

- Elastic search 带空格的术语

- Elastic Search中嵌套字段的术语聚合

elasticsearch带空格的术语(elasticsearch 空格)

我在为elasticsearch实现自动完成功能时遇到问题,这是我的设置:

创建自动完成的分析器

curl -XPUT http://localhost:9200/autocomplete/ -d ''{ "index": { "analysis": { "analyzer": { "placeNameIndexAnalyzer": { "type": "custom", "tokenizer": "keyword", "filter": [ "trim", "lowercase", "asciifolding", "left_ngram" ] } }, "filter": { "left_ngram": { "type": "edgeNGram", "side": "front", "min_gram": 3, "max_gram": 12 } } } }}''然后,使用“别名”属性中的分析器在自动完成中创建一个类型:

curl -XPUT http://localhost:9200/autocomplete/geo/_mapping/ -d ''{ "geo": { "properties": { "application_id": { "type": "string" }, "alias": { "type": "string", "analyzer": "placeNameIndexAnalyzer" }, "name": { "type": "string" }, "object_type": { "type": "string" } } }}''之后; 添加文档:

curl -XPOST http://localhost:9200/autocomplete/geo -d ''{ "application_id": "982", "name": "Buenos Aires", "alias": [ "bue", "buenos aires", "bsas", "bs as", "baires" ], "object_type": "cities"}''当我运行以下命令时:

curl -XGET ''localhost:9200/autocomplete/geo/_search?q=alias:bs%20as''结果是

{ "took": 2, "timed_out": false, "_shards": { "total": 5, "successful": 5, "failed": 0 }, "hits": { "total": 0, "max_score": null, "hits": [] }}和

curl -XGET ''localhost:9200/autocomplete/geo/_search?q=alias:bs as'' curl: (52) Empty reply from server但是我应该在“别名”字段中获取我的文档,我有一个“ bs as”。

我尝试使用_analyzeAPI,并得到了我认为是预期令牌的正确答案:

curl -XGET ''localhost:9200/autocomplete/_analyze?analyzer=placeNameIndexAnalyzer'' -d ''bs as''结果:

{ "tokens": [ { "token": "bs ", "start_offset": 0, "end_offset": 5, "type": "word", "position": 1 }, { "token": "bs a", "start_offset": 0, "end_offset": 5, "type": "word", "position": 1 }, { "token": "bs as", "start_offset": 0, "end_offset": 5, "type": "word", "position": 1 } ]}有什么提示吗?

编辑: 当我用实际类型运行分析时,我得到以下信息:

curl -XGET ''localhost:9200/autocomplete/_analyze?analyzer=placeNameIndexAnalyzer'' -d ''bs as''结果:

{ "_index": "autocomplete", "_type": "geo", "_id": "_analyze", "exists": false}答案1

小编典典q参数上使用的query_string查询首先通过在空格上分割查询字符串来解析查询字符串。您需要用其他保留空间的东西替换它。在这里match查询将是一个不错的选择。我还将使用其他分析器进行搜索-

您无需在其中应用ngram:

curl -XPUT http://localhost:9200/autocomplete/ -d ''{ "index": { "analysis": { "analyzer": { "placeNameIndexAnalyzer" : { "type": "custom", "tokenizer": "keyword", "filter" : ["trim", "lowercase", "asciifolding", "left_ngram"] }, "placeNameSearchAnalyzer" : { "type": "custom", "tokenizer": "keyword", "filter" : ["trim", "lowercase", "asciifolding"] } }, "filter": { "left_ngram": { "type" : "edgeNGram", "side" : "front", "min_gram" : 3, "max_gram" : 12 } } } }}''curl -XPUT http://localhost:9200/autocomplete/geo/_mapping/ -d ''{ "geo": { "properties": { "application_id": { "type": "string" }, "alias": { "type": "string", "index_analyzer": "placeNameIndexAnalyzer", "search_analyzer": "placeNameSearchAnalyzer" }, "name": { "type": "string" }, "object_type": { "type": "string" } } }}''curl -XPOST "http://localhost:9200/autocomplete/geo?refresh=true" -d ''{ "application_id":"982", "name":"Buenos Aires", "alias":["bue", "buenos aires", "bsas", "bs as", "baires"], "object_type":"cities"}''curl -XGET ''localhost:9200/autocomplete/geo/_search'' -d ''{ "query": { "match": { "alias": "bs as" } }}''

docker 部署 elasticsearch + elasticsearch-head + elasticsearch-head 跨域问题 + IK 分词器

0. docker pull 拉取 elasticsearch + elasticsearch-head 镜像

1. 启动 elasticsearch Docker 镜像

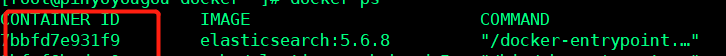

docker run -di --name tensquare_elasticsearch -p 9200:9200 -p 9300:9300 elasticsearch![]()

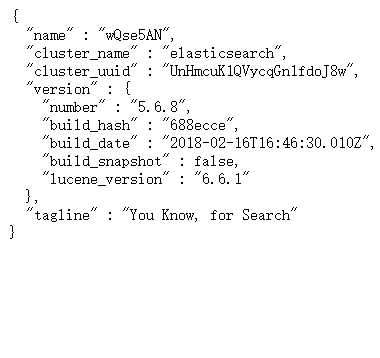

对应 IP:9200 ---- 反馈下边 json 数据,表示启动成功

2. 启动 elasticsearch-head 镜像

docker run -d -p 9100:9100 elasticsearch-head![]()

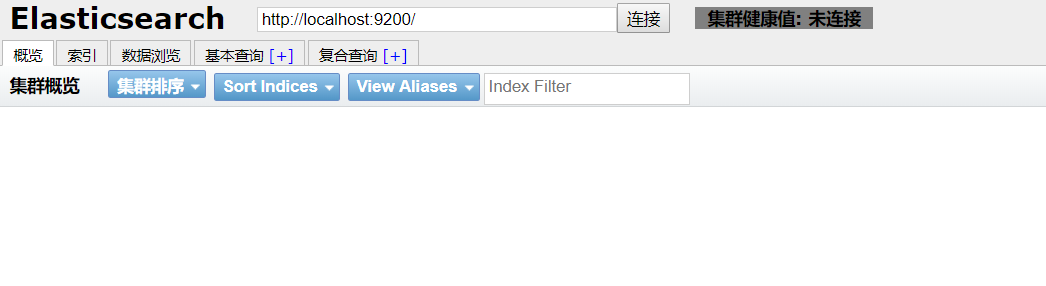

对应 IP:9100 ---- 得到下边页面,即启动成功

3. 解决跨域问题

进入 elasticsearch-head 页面,出现灰色未连接状态 , 即出现跨域问题

1. 根据 docker ps 得到 elasticsearch 的 CONTAINER ID

2. docker exec -it elasticsearch 的 CONTAINER ID /bin/bash 进入容器内

3. cd ./config

4. 修改 elasticsearch.yml 文件

echo "

http.cors.enabled: true

http.cors.allow-origin: ''*''" >> elasticsearch.yml

4. 重启 elasticsearch

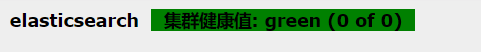

docker restart elasticsearch的CONTAINER ID重新进入 IP:9100 进入 elasticsearch-head, 出现绿色标注,配置成功 !

5. ik 分词器的安装

将在 ik 所在的文件夹下,拷贝到 /usr/share/elasticsearch/plugins --- 注意: elasticsearch 的版本号必须与 ik 分词器的版本号一致

docker cp ik elasticsearch的CONTAINER ID:/usr/share/elasticsearch/plugins

重启elasticsearch

docker restart elasticsearch

未添加ik分词器:http://IP:9200/_analyze?analyzer=chinese&pretty=true&text=我爱中国

添加ik分词器后:http://IP:9200/_analyze?analyzer=ik_smart&pretty=true&text=我爱中国

Docker部署ElasticSearch和ElasticSearch-Head的实现

本篇主要讲解使用Docker如何部署ElasticSearch:6.8.4 版本,讲解了从Docker拉取到最终运行ElasticSearch 以及 安装 ElasticSearch-Head 用来管理ElasticSearch相关信息的一个小工具,本博客系统首页的搜索正是使用了ElasticSearch来实现的,由于ElasticSearch 更新太快 以至于SpringData-ElasticSearch都跟不上 Es的更新 我也是一开始下载8.x的版本 导致SpringData-ElasticSearch 报错 最终我选择了6.8.4 在此记录一下

1.Docker部署ElasticSearch:6.8.4版本

1.1 拉取镜像

docker pull docker.elastic.co/elasticsearch/elasticsearch:6.8.4

1.2 运行容器

ElasticSearch的默认端口是9200,我们把宿主环境9200端口映射到Docker容器中的9200端口,就可以访问到Docker容器中的ElasticSearch服务了,同时我们把这个容器命名为es。

docker run -d --name es -p 9200:9200 -p 9300:9300 -e "discovery.type=single-node" -e ES_JAVA_OPTS="-Xms=256m -Xms=256m" docker.elastic.co/elasticsearch/elasticsearch:6.8.4

说明:

-e discovery.type=single-node :表示单节点启动

-e ES_JAVA_OPTS="-Xms=256m -Xms=256m" :表示设置es启动的内存大小,这个真的要设置,不然后时候会内存不够,比如我自己的辣鸡服务器!

1.3 内存不足问题

centos下载完elasticsearch并修改完配置后运行docker命令:

发现没有启动成功,去除命令的-d后打印错误如下

Java HotSpot(TM) 64-Bit Server VM warning: INFO: os::commit_memory(0x0000000085330000, 2060255232, 0) failed; error=''Cannot allocate memory'' (errno=12)

经过一番查找发现这是由于elasticsearch6.0默认分配jvm空间大小为2g,内存不足以分配导致。

解决方法就是修改jvm空间分配

运行命令:

find /var/lib/docker/overlay/ -name jvm.options 查找jvm.options文件,找到后进入使用vi命令打开jvm.options如下: 将 -Xms2g -Xmx2g 修改为 -Xms512m -Xmx512m

保存退出即可。再次运行创建运行elasticsearch命令,成功启动。

2.Docker部署ElasticSearch-Heard

2.1 拉取镜像

docker pull mobz/elasticsearch-head:5

2.2 运行容器

docker create --name elasticsearch-head -p 9100:9100 mobz/elasticsearch-head:5

2.3 启动容器

docker start elasticsearch-head

2.4 打开浏览器: http://IP:9100

发现连接不上,是因为有跨域问题,因为前后端分离开发的所以需要设置一下es

2.5 进入刚刚启动的 es 容器,容器name = es

docker exec -it es /bin/bash

2.6 修改elasticsearch.yml文件

vi config/elasticsearch.yml

添加

http.cors.enabled: true http.cors.allow-origin: "*"

其实就是SpringBoot的yml文件 添加跨域支持

2.7 退出容器 并重启

exit docker restart es

2.8 访问http://localhost:9100

总结:

本篇只是简单的讲解了如何用Docker安装ElasticSearch 并且会遇到的坑,包括内存不足,或者版本太高等问题,以及ElasticSearch-Heard的安装和跨域的配置 ,下一篇将讲解ElasticSearch如何安装中文分词器

以上就是本文的全部内容,希望对大家的学习有所帮助,也希望大家多多支持。

- Docker安装ElasticSearch和Kibana的问题及处理方法

- docker安装Elasticsearch7.6集群并设置密码的方法步骤

- 一文搞定Docker安装ElasticSearch的过程

- 在Docker中安装Elasticsearch7.6.2的教程

- 教你使用docker安装elasticsearch和head插件的方法

Elastic search 带空格的术语

我在为elasticsearch实现自动完成功能时遇到问题,这是我的

设置:

创建自动完成的分析器

curl -XPUT http://localhost:9200/autocomplete/ -d ''{ "index": { "analysis": { "analyzer": { "placeNameIndexAnalyzer": { "type": "custom", "tokenizer": "keyword", "filter": [ "trim", "lowercase", "asciifolding", "left_ngram" ] } }, "filter": { "left_ngram": { "type": "edgeNGram", "side": "front", "min_gram": 3, "max_gram": 12 } } } }}''然后,使用“ alias”

属性中的分析器在自动完成中创建一个类型:

curl -XPUT http://localhost:9200/autocomplete/geo/_mapping/ -d ''{ "geo": { "properties": { "application_id": { "type": "string" }, "alias": { "type": "string", "analyzer": "placeNameIndexAnalyzer" }, "name": { "type": "string" }, "object_type": { "type": "string" } } }}''Afterwards; add a document:

curl -XPOST http://localhost:9200/autocomplete/geo -d ''{ "application_id": "982", "name": "Buenos Aires", "alias": [ "bue", "buenos aires", "bsas", "bs as", "baires" ], "object_type": "cities"}''When I run the following:

curl -XGET ''localhost:9200/autocomplete/geo/_search?q=alias:bs%20as''result is

{ "took": 2, "timed_out": false, "_shards": { "total": 5, "successful": 5, "failed": 0 }, "hits": { "total": 0, "max_score": null, "hits": [] }}and

curl -XGET ''localhost:9200/autocomplete/geo/_search?q=alias:bs as'' curl: (52) Empty reply from serverBut I should be getting my document for in the “alias” field I have a “bs as”.

I tried using the _analyze API and I get what I think is the correct answer

with the expected tokens:

curl -XGET ''localhost:9200/autocomplete/_analyze?analyzer=placeNameIndexAnalyzer'' -d ''bs as''result:

{ "tokens": [ { "token": "bs ", "start_offset": 0, "end_offset": 5, "type": "word", "position": 1 }, { "token": "bs a", "start_offset": 0, "end_offset": 5, "type": "word", "position": 1 }, { "token": "bs as", "start_offset": 0, "end_offset": 5, "type": "word", "position": 1 } ]}Any Hints?

EDIT: when I run analyze with the actual type I get this:

curl -XGET ''localhost:9200/autocomplete/_analyze?analyzer=placeNameIndexAnalyzer'' -d ''bs as''result:

{ "_index": "autocomplete", "_type": "geo", "_id": "_analyze", "exists": false}答案1

小编典典参数上使用的query_string查询首先通过在空格上分割查询字符串来解析查询字符串。您需要用

其他保留空间的东西替换它。在这里match查询将是一个不错的选择。我还将使用其他分析器进行搜索-您无需在其中应用

ngram:

curl -XPUT http://localhost:9200/autocomplete/ -d ''{ "index": { "analysis": { "analyzer": { "placeNameIndexAnalyzer" : { "type": "custom", "tokenizer": "keyword", "filter" : ["trim", "lowercase", "asciifolding", "left_ngram"] }, "placeNameSearchAnalyzer" : { "type": "custom", "tokenizer": "keyword", "filter" : ["trim", "lowercase", "asciifolding"] } }, "filter": { "left_ngram": { "type" : "edgeNGram", "side" : "front", "min_gram" : 3, "max_gram" : 12 } } } }}''curl -XPUT http://localhost:9200/autocomplete/geo/_mapping/ -d ''{ "geo": { "properties": { "application_id": { "type": "string" }, "alias": { "type": "string", "index_analyzer": "placeNameIndexAnalyzer", "search_analyzer": "placeNameSearchAnalyzer" }, "name": { "type": "string" }, "object_type": { "type": "string" } } }}''curl -XPOST "http://localhost:9200/autocomplete/geo?refresh=true" -d ''{ "application_id":"982", "name":"Buenos Aires", "alias":["bue", "buenos aires", "bsas", "bs as", "baires"], "object_type":"cities"}''curl -XGET ''localhost:9200/autocomplete/geo/_search'' -d ''{ "query": { "match": { "alias": "bs as" } }}''

Elastic Search中嵌套字段的术语聚合

我在elasticsearch(YML中的定义)中具有字段的下一个映射:

my_analyzer: type: custom tokenizer: keyword filter: lowercase products_filter: type: "nested" properties: filter_name: {"type" : "string", analyzer: "my_analyzer"} filter_value: {"type" : "string" , analyzer: "my_analyzer"}每个文档都有很多过滤器,看起来像:

"products_filter": [{"filter_name": "Rahmengröße","filter_value": "33,5 cm"},{"filter_name": "color","filter_value": "gelb"},{"filter_name": "Rahmengröße","filter_value": "39,5 cm"},{"filter_name": "Rahmengröße","filter_value": "45,5 cm"}]我试图获取唯一过滤器名称的列表以及每个过滤器的唯一过滤器值的列表。

我的意思是,我想获得结构是怎样的:Rahmengröße:

39.5厘米

45.5厘米

33.5厘米

颜色:

盖尔布

为了得到它,我尝试了几种聚合的变体,例如:

{ "aggs": { "bla": { "terms": { "field": "products_filter.filter_name" }, "aggs": { "bla2": { "terms": { "field": "products_filter.filter_value" } } } } }}这个请求是错误的。

它将为我返回唯一过滤器名称的列表,并且每个列表将包含所有filter_values的列表。

"bla": {"doc_count_error_upper_bound": 0,"sum_other_doc_count": 103,"buckets": [{"key": "color","doc_count": 9,"bla2": {"doc_count_error_upper_bound": 4,"sum_other_doc_count": 366,"buckets": [{"key": "100","doc_count": 5},{"key": "cm","doc_count": 5},{"key": "unisex","doc_count": 5},{"key": "11","doc_count": 4},{"key": "160","doc_count": 4},{"key": "22","doc_count": 4},{"key": "a","doc_count": 4},{"key": "alu","doc_count": 4},{"key": "aluminium","doc_count": 4},{"key": "aus","doc_count": 4}]}},另外,我尝试使用反向嵌套聚合,但这对我没有帮助。

所以我认为我的尝试有逻辑上的错误吗?

答案1

小编典典如我所说。您的问题是您的文本被分析,elasticsearch总是在令牌级别聚合。因此,为了解决该问题,必须将字段值索引为单个标记。有两种选择:

- 不分析它们

- 使用关键字分析器+小写(不区分大小写的aggs)为它们编制索引

因此,将使用小写过滤器并删除重音符号(ö => o以及ß =>ss您的字段的其他字段,以创建自定义关键字分析器)来进行设置,以便将它们用于聚合(raw和keyword):

PUT /test{ "settings": { "analysis": { "analyzer": { "my_analyzer_keyword": { "type": "custom", "tokenizer": "keyword", "filter": [ "asciifolding", "lowercase" ] } } } }, "mappings": { "data": { "properties": { "products_filter": { "type": "nested", "properties": { "filter_name": { "type": "string", "analyzer": "standard", "fields": { "raw": { "type": "string", "index": "not_analyzed" }, "keyword": { "type": "string", "analyzer": "my_analyzer_keyword" } } }, "filter_value": { "type": "string", "analyzer": "standard", "fields": { "raw": { "type": "string", "index": "not_analyzed" }, "keyword": { "type": "string", "analyzer": "my_analyzer_keyword" } } } } } } } }}测试文件,您给了我们:

PUT /test/data/1{ "products_filter": [ { "filter_name": "Rahmengröße", "filter_value": "33,5 cm" }, { "filter_name": "color", "filter_value": "gelb" }, { "filter_name": "Rahmengröße", "filter_value": "39,5 cm" }, { "filter_name": "Rahmengröße", "filter_value": "45,5 cm" } ]}这将是查询以使用raw字段进行汇总:

GET /test/_search{ "size": 0, "aggs": { "Nesting": { "nested": { "path": "products_filter" }, "aggs": { "raw_names": { "terms": { "field": "products_filter.filter_name.raw", "size": 0 }, "aggs": { "raw_values": { "terms": { "field": "products_filter.filter_value.raw", "size": 0 } } } } } } }}它确实带来了预期的结果(带有过滤器名称的存储桶和带有其值的子存储桶):

{ "took": 1, "timed_out": false, "_shards": { "total": 5, "successful": 5, "failed": 0 }, "hits": { "total": 1, "max_score": 0, "hits": [] }, "aggregations": { "Nesting": { "doc_count": 4, "raw_names": { "doc_count_error_upper_bound": 0, "sum_other_doc_count": 0, "buckets": [ { "key": "Rahmengröße", "doc_count": 3, "raw_values": { "doc_count_error_upper_bound": 0, "sum_other_doc_count": 0, "buckets": [ { "key": "33,5 cm", "doc_count": 1 }, { "key": "39,5 cm", "doc_count": 1 }, { "key": "45,5 cm", "doc_count": 1 } ] } }, { "key": "color", "doc_count": 1, "raw_values": { "doc_count_error_upper_bound": 0, "sum_other_doc_count": 0, "buckets": [ { "key": "gelb", "doc_count": 1 } ] } } ] } } }}另外,您可以将field与关键字分析器(以及一些规范化)结合使用,以获得更通用且不区分大小写的结果:

GET /test/_search{ "size": 0, "aggs": { "Nesting": { "nested": { "path": "products_filter" }, "aggs": { "keyword_names": { "terms": { "field": "products_filter.filter_name.keyword", "size": 0 }, "aggs": { "keyword_values": { "terms": { "field": "products_filter.filter_value.keyword", "size": 0 } } } } } } }}结果就是:

{ "took": 1, "timed_out": false, "_shards": { "total": 5, "successful": 5, "failed": 0 }, "hits": { "total": 1, "max_score": 0, "hits": [] }, "aggregations": { "Nesting": { "doc_count": 4, "keyword_names": { "doc_count_error_upper_bound": 0, "sum_other_doc_count": 0, "buckets": [ { "key": "rahmengrosse", "doc_count": 3, "keyword_values": { "doc_count_error_upper_bound": 0, "sum_other_doc_count": 0, "buckets": [ { "key": "33,5 cm", "doc_count": 1 }, { "key": "39,5 cm", "doc_count": 1 }, { "key": "45,5 cm", "doc_count": 1 } ] } }, { "key": "color", "doc_count": 1, "keyword_values": { "doc_count_error_upper_bound": 0, "sum_other_doc_count": 0, "buckets": [ { "key": "gelb", "doc_count": 1 } ] } } ] } } }}关于elasticsearch带空格的术语和elasticsearch 空格的介绍已经告一段落,感谢您的耐心阅读,如果想了解更多关于docker 部署 elasticsearch + elasticsearch-head + elasticsearch-head 跨域问题 + IK 分词器、Docker部署ElasticSearch和ElasticSearch-Head的实现、Elastic search 带空格的术语、Elastic Search中嵌套字段的术语聚合的相关信息,请在本站寻找。

本文标签:

![[转帖]Ubuntu 安装 Wine方法(ubuntu如何安装wine)](https://www.gvkun.com/zb_users/cache/thumbs/4c83df0e2303284d68480d1b1378581d-180-120-1.jpg)