本文将分享hive_异常_01_的详细内容,并且还将对未解决FAILED:ExecutionError,returncode1fromorg.apache.hadoop.hive.ql.exec.DD

本文将分享hive_异常_01_的详细内容,并且还将对未解决FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTas...进行详尽解释,此外,我们还将为大家带来关于Encountered IOException running create table job: : org.apache.hadoop.hive.conf.HiveConf、ERROR hive.HiveConfig: Could not load org.apache.hadoop.hive.conf.HiveConf. Make sure HIVE_CONF_D...、Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask、Error:Execution failed for task '':app:dexDebug''. >的相关知识,希望对你有所帮助。

本文目录一览:- hive_异常_01_(未解决)FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTas...

- Encountered IOException running create table job: : org.apache.hadoop.hive.conf.HiveConf

- ERROR hive.HiveConfig: Could not load org.apache.hadoop.hive.conf.HiveConf. Make sure HIVE_CONF_D...

- Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask

- Error:Execution failed for task '':app:dexDebug''. >

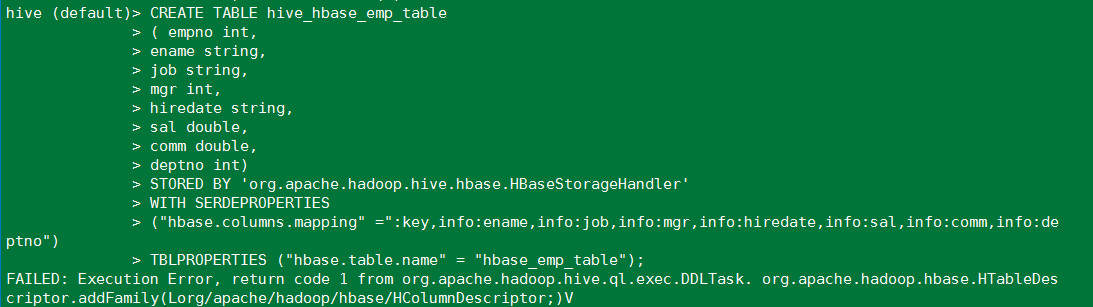

hive_异常_01_(未解决)FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTas...

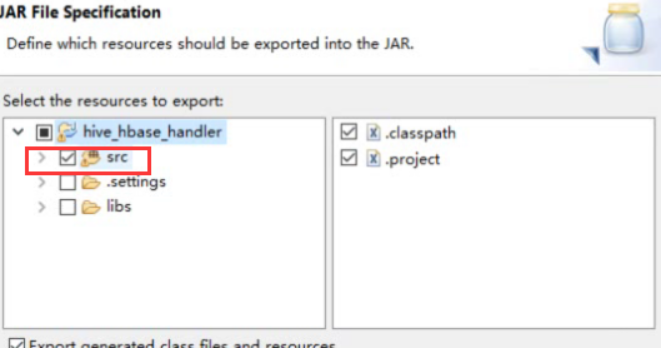

一、如果出现如下错误需要编译源码

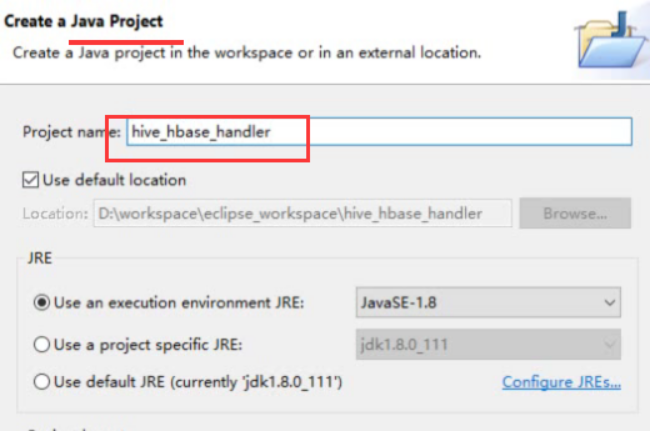

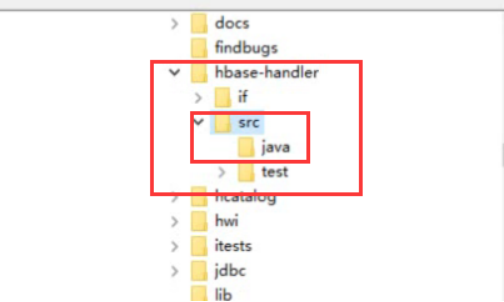

需要重新编译Hbase-handler源码

步骤如下:

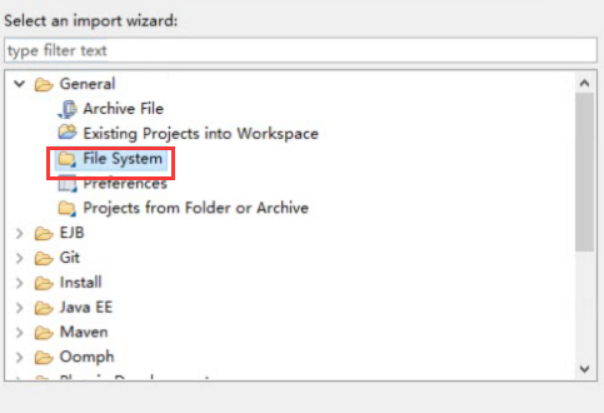

准备Jar包:

将Hbase 中lib下的jar包和Hive中lib下的jar包全部导入到一起。

记得删除里面不是jar包的内容,容易报错,如pom文件

接着项目不报错后,进行jar包的导出

接着删除hive下的lib中的hive-hbase-handler-1.2.2.jar

将自己编译的jar包进行替换,即可

二、Hbase和hive的表关联后,如何进行有效删除?

一个错误:先删除hbase中的表,然后发现管理表,无法查询数据了,那删除该表,就报错。

解决方案:退出该shell,重新进入即可。

那如果是一个外部表的话,可以直接删除。

结论:删除此类表,先删hive表,再删hbase表。

三、版本号的问题

Describe ‘表名’

Alter ‘表名’,{NAME=>’列族’,VERSIONS=’3’}

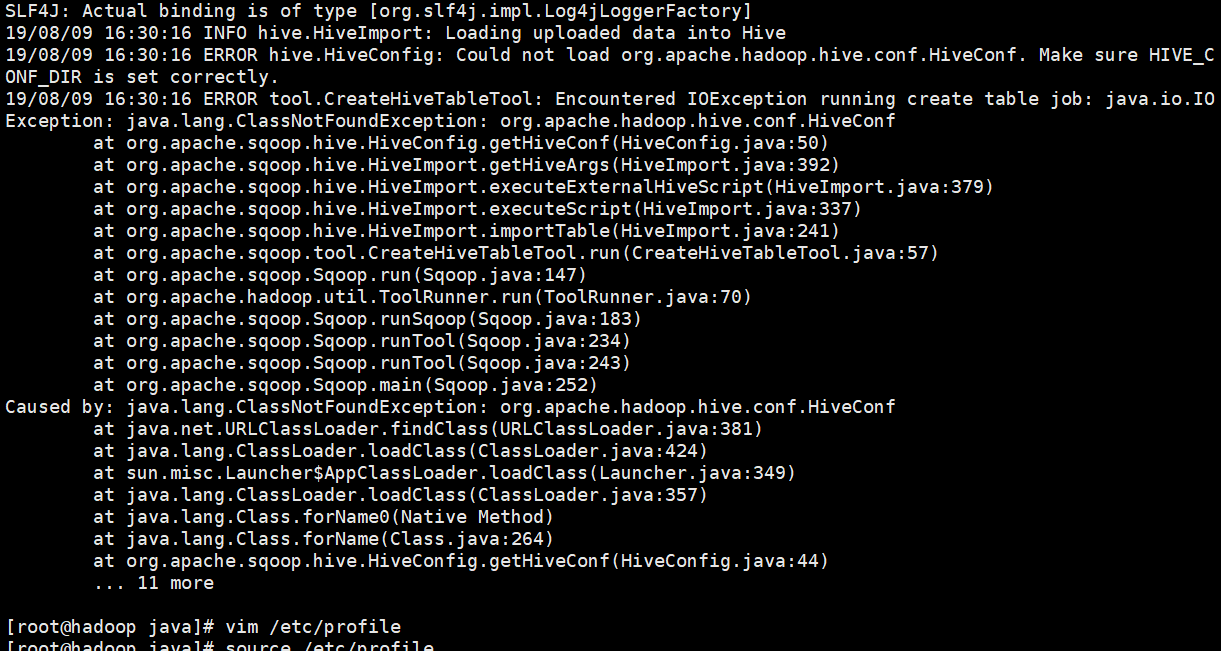

Encountered IOException running create table job: : org.apache.hadoop.hive.conf.HiveConf

19/08/09 16:30:16 ERROR hive.HiveConfig: Could not load org.apache.hadoop.hive.conf.HiveConf. Make sure HIVE_CONF_DIR is set correctly.

19/08/09 16:30:16 ERROR tool.CreateHiveTableTool: Encountered IOException running create table job: java.io.IOException: java.lang.ClassNotFoundException: org.apache.hadoop.hive.conf.HiveConf

at org.apache.sqoop.hive.HiveConfig.getHiveConf(HiveConfig.java:50)

at org.apache.sqoop.hive.HiveImport.getHiveArgs(HiveImport.java:392)

at org.apache.sqoop.hive.HiveImport.executeExternalHiveScript(HiveImport.java:379)

at org.apache.sqoop.hive.HiveImport.executeScript(HiveImport.java:337)

at org.apache.sqoop.hive.HiveImport.importTable(HiveImport.java:241)

at org.apache.sqoop.tool.CreateHiveTableTool.run(CreateHiveTableTool.java:57)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hive.conf.HiveConf

使用 sqoop 创建和 mysql 相同的表结构的时候:

sqoop create-hive-table --connect jdbc:mysql://10.136.198.112:3306/questions --username root --password root --table user --hive-table hhive

报错

解决方法,添加环境变量

往 /etc/profile 最后加入 export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$HIVE_HOME/lib/*

并且刷新 source /etc/profile

然后再次执行 sqoop 语句,执行成功,但是 hive 中并没有生成表

解决方法:讲 hive/config 下的 hive-site.xml 配置文件复制到 sqoop/config 下,再次执行,成功

hive 中的表已经生成

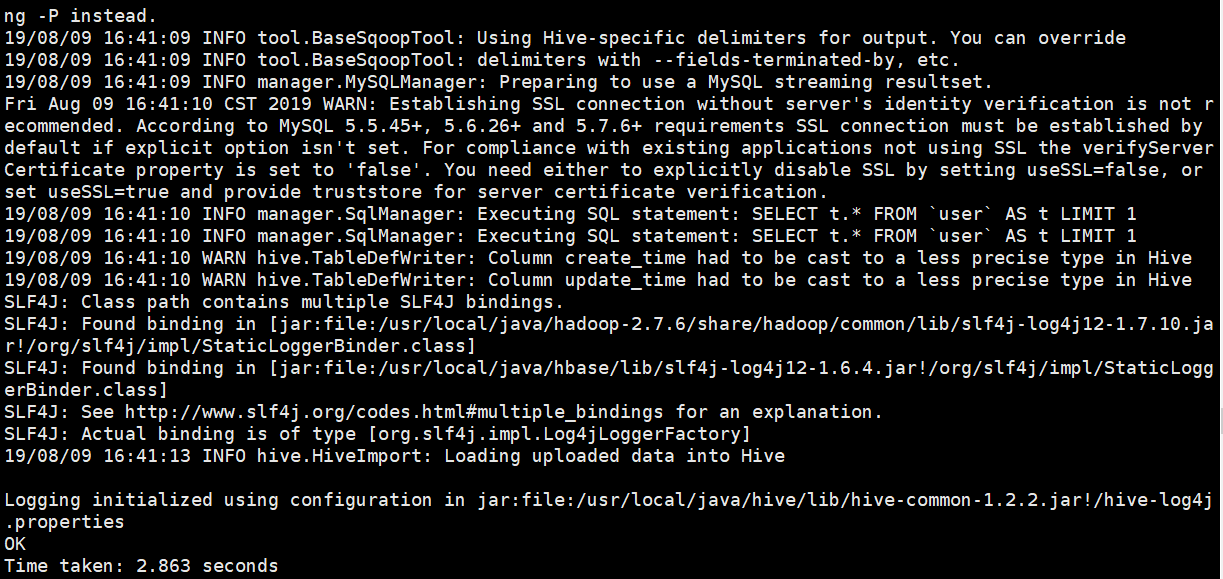

ERROR hive.HiveConfig: Could not load org.apache.hadoop.hive.conf.HiveConf. Make sure HIVE_CONF_D...

Sqoop 导入 mysql 表中的数据到 hive,出现如下错误:

解决方法:

往 /etc/profile 最后加入 export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$HIVE_HOME/lib/*然后刷新配置,source /etc/profile

Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask

Showing 4096 bytes of 17167 total. Click here for the full log.

.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:542) 17/01/20 09:39:23 ERROR client.RemoteDriver: Shutting down remote driver due to error: java.lang.InterruptedException java.lang.InterruptedException at java.lang.Object.wait(Native Method) at org.apache.spark.scheduler.TaskSchedulerImpl.waitBackendReady(TaskSchedulerImpl.scala:623) at org.apache.spark.scheduler.TaskSchedulerImpl.postStartHook(TaskSchedulerImpl.scala:170) at org.apache.spark.scheduler.cluster.YarnClusterScheduler.postStartHook(YarnClusterScheduler.scala:33) at org.apache.spark.SparkContext.<init>(SparkContext.scala:595) at org.apache.spark.api.java.JavaSparkContext.<init>(JavaSparkContext.scala:59) at org.apache.hive.spark.client.RemoteDriver.<init>(RemoteDriver.java:169) at org.apache.hive.spark.client.RemoteDriver.main(RemoteDriver.java:556) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.spark.deploy.yarn.ApplicationMaster$$anon$2.run(ApplicationMaster.scala:542) 17/01/20 09:39:23 INFO yarn.ApplicationMaster: Unregistering ApplicationMaster with FAILED (diag message: Uncaught exception: org.apache.hadoop.yarn.exceptions.InvalidResourceRequestException: Invalid resource request, requested virtual cores < 0, or requested virtual cores > max configured, requestedVirtualCores=4, maxVirtualCores=2 at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.validateResourceRequest(SchedulerUtils.java:258) at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.normalizeAndValidateRequest(SchedulerUtils.java:226) at org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerUtils.normalizeAndvalidateRequest(SchedulerUtils.java:233) at org.apache.hadoop.yarn.server.resourcemanager.RMServerUtils.normalizeAndValidateRequests(RMServerUtils.java:97) at org.apache.hadoop.yarn.server.resourcemanager.ApplicationMasterService.allocate(ApplicationMasterService.java:504) at org.apache.hadoop.yarn.api.impl.pb.service.ApplicationMasterProtocolPBServiceImpl.allocate(ApplicationMasterProtocolPBServiceImpl.java:60) at org.apache.hadoop.yarn.proto.ApplicationMasterProtocol$ApplicationMasterProtocolService$2.callBlockingMethod(ApplicationMasterProtocol.java:99) at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617) at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2086) at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2082) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698) at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2080) ) 17/01/20 09:39:23 INFO impl.AMRMClientImpl: Waiting for application to be successfully unregistered. 17/01/20 09:39:24 INFO yarn.ApplicationMaster: Deleting staging directory .sparkStaging/application_1484288256809_0021 17/01/20 09:39:24 INFO storage.DiskBlockManager: Shutdown hook called 17/01/20 09:39:24 INFO util.ShutdownHookManager: Shutdown hook called 17/01/20 09:39:24 INFO util.ShutdownHookManager: Deleting directory /yarn/nm/usercache/anonymous/appcache/application_1484288256809_0021/spark-3f3ac5b0-5a46-48d7-929b-81b7820c9e81/userFiles-af94b1af-604f-4423-b1e4-0384e372c1f8 17/01/20 09:39:24 INFO util.ShutdownHookManager: Deleting directory /yarn/nm/usercache/anonymous/appcache/application_1484288256809_0021/spark-3f3ac5b0-5a46-48d7-929b-81b7820c9e81

Error:Execution failed for task '':app:dexDebug''. >

Error:Execution failed for task '':app:dexDebug''. > com.android.ide.common.process.ProcessException: org.gradle.process.internal.ExecException: Process ''command ''C:\Program Files\Java\jdk1.7.0_79\bin\java.exe'''' finished with non-zero exit value 2

一般出现这个编译错误 就是 多了一个 jar 包等等或者缺失了 jar 包等等 往 Messages 里面认真看看。

今天关于hive_异常_01_和未解决FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTas...的分享就到这里,希望大家有所收获,若想了解更多关于Encountered IOException running create table job: : org.apache.hadoop.hive.conf.HiveConf、ERROR hive.HiveConfig: Could not load org.apache.hadoop.hive.conf.HiveConf. Make sure HIVE_CONF_D...、Error, return code 1 from org.apache.hadoop.hive.ql.exec.spark.SparkTask、Error:Execution failed for task '':app:dexDebug''. >等相关知识,可以在本站进行查询。

本文标签:

![[转帖]Ubuntu 安装 Wine方法(ubuntu如何安装wine)](https://www.gvkun.com/zb_users/cache/thumbs/4c83df0e2303284d68480d1b1378581d-180-120-1.jpg)