最近很多小伙伴都在问influxdb-1.7.8和centos7)部署这两个问题,那么本篇文章就来给大家详细解答一下,同时本文还将给你拓展2.InfluxDB-InfluxQL基础语法教程--目录、4

最近很多小伙伴都在问influxdb-1.7.8和centos 7) 部署这两个问题,那么本篇文章就来给大家详细解答一下,同时本文还将给你拓展2.InfluxDB-InfluxQL基础语法教程--目录、4.InfluxDB-InfluxQL基础语法教程--基本select语句、5.InfluxDB-InfluxQL基础语法教程--WHERE子句、6.InfluxDB-InfluxQL基础语法教程--GROUP BY子句等相关知识,下面开始了哦!

本文目录一览:- influxdb-1.7.8(centos 7) 部署(influxdb安装部署linux)

- 2.InfluxDB-InfluxQL基础语法教程--目录

- 4.InfluxDB-InfluxQL基础语法教程--基本select语句

- 5.InfluxDB-InfluxQL基础语法教程--WHERE子句

- 6.InfluxDB-InfluxQL基础语法教程--GROUP BY子句

influxdb-1.7.8(centos 7) 部署(influxdb安装部署linux)

1、官方下载:

https://dl.influxdata.com/influxdb/releases/influxdb-1.7.8.x86_64.rpm

2、安装软件

sudo yum localinstall influxdb-1.7.8.x86_64.rpm

3、环境准备

mkdir -p /data/influxdb/data

chmod -R 777 /data

4、包文件路径

/usr/bin下

- influxd influxdb服务器

- influx influxdb命令行客户端

- influx_inspect 查看工具

- influx_stress 压力测试工具

- influx_tsm 数据库转换工具(将数据库从b1或bz1格式转换为tsm1格式)

/var/lib/influxdb/下

- data 存放最终存储的数据,文件以.tsm结尾

- meta 存放数据库元数据

- wal 存放预写日志文件

/var/log/influxdb下

- influxd.log 日志文件

/etc/influxdb下

- influxdb.conf 配置文件

/var/run/influxdb/

- influxd.pid PID文件

5、备份配置文件

mv /etc/influxdb/influxdb.conf /etc/influxdb/influxdb.conf.default

6、更新配置文件

vim /etc/influxdb/influxdb.conf

### Welcome to the InfluxDB configuration file.

# The values in this file override the default values used by the system if

# a config option is not specified. The commented out lines are the configuration

# field and the default value used. Uncommenting a line and changing the value

# will change the value used at runtime when the process is restarted.

# Once every 24 hours InfluxDB will report usage data to usage.influxdata.com

# The data includes a random ID, os, arch, version, the number of series and other

# usage data. No data from user databases is ever transmitted.

# Change this option to true to disable reporting.

# reporting-disabled = false

# Bind address to use for the RPC service for backup and restore.

# bind-address = "127.0.0.1:8088"

###

### [meta]

###

### Controls the parameters for the Raft consensus group that stores metadata

### about the InfluxDB cluster.

###

[meta]

# Where the metadata/raft database is stored

dir = "/data/influxdb/data/meta"

# Automatically create a default retention policy when creating a database.

retention-autocreate = true

# If log messages are printed for the meta service

# logging-enabled = true

###

### [data]

###

### Controls where the actual shard data for InfluxDB lives and how it is

### flushed from the WAL. "dir" may need to be changed to a suitable place

### for your system, but the WAL settings are an advanced configuration. The

### defaults should work for most systems.

###

[data]

# The directory where the TSM storage engine stores TSM files.

dir = "/data/influxdb/data/data"

# The directory where the TSM storage engine stores WAL files.

wal-dir = "/data/influxdb/data/wal"

# The amount of time that a write will wait before fsyncing. A duration

# greater than 0 can be used to batch up multiple fsync calls. This is useful for slower

# disks or when WAL write contention is seen. A value of 0s fsyncs every write to the WAL.

# Values in the range of 0-100ms are recommended for non-SSD disks.

wal-fsync-delay = "0s"

# The type of shard index to use for new shards. The default is an in-memory index that is

# recreated at startup. A value of "tsi1" will use a disk based index that supports higher

# cardinality datasets.

# index-version = "inmem"

index-version = "tsi1"

# Trace logging provides more verbose output around the tsm engine. Turning

# this on can provide more useful output for debugging tsm engine issues.

# trace-logging-enabled = false

# Whether queries should be logged before execution. Very useful for troubleshooting, but will

# log any sensitive data contained within a query.

# query-log-enabled = true

# Validates incoming writes to ensure keys only have valid unicode characters.

# This setting will incur a small overhead because every key must be checked.

# validate-keys = false

# Settings for the TSM engine

# CacheMaxMemorySize is the maximum size a shard''s cache can

# reach before it starts rejecting writes.

# Valid size suffixes are k, m, or g (case insensitive, 1024 = 1k).

# Values without a size suffix are in bytes.

# cache-max-memory-size = "1g"

# CacheSnapshotMemorySize is the size at which the engine will

# snapshot the cache and write it to a TSM file, freeing up memory

# Valid size suffixes are k, m, or g (case insensitive, 1024 = 1k).

# Values without a size suffix are in bytes.

# cache-snapshot-memory-size = "25m"

# CacheSnapshotWriteColdDuration is the length of time at

# which the engine will snapshot the cache and write it to

# a new TSM file if the shard hasn''t received writes or deletes

# cache-snapshot-write-cold-duration = "10m"

# CompactFullWriteColdDuration is the duration at which the engine

# will compact all TSM files in a shard if it hasn''t received a

# write or delete

# compact-full-write-cold-duration = "4h"

# The maximum number of concurrent full and level compactions that can run at one time. A

# value of 0 results in 50% of runtime.GOMAXPROCS(0) used at runtime. Any number greater

# than 0 limits compactions to that value. This setting does not apply

# to cache snapshotting.

# max-concurrent-compactions = 0

# CompactThroughput is the rate limit in bytes per second that we

# will allow TSM compactions to write to disk. Note that short bursts are allowed

# to happen at a possibly larger value, set by CompactThroughputBurst

# compact-throughput = "48m"

# CompactThroughputBurst is the rate limit in bytes per second that we

# will allow TSM compactions to write to disk.

# compact-throughput-burst = "48m"

# If true, then the mmap advise value MADV_WILLNEED will be provided to the kernel with respect to

# TSM files. This setting has been found to be problematic on some kernels, and defaults to off.

# It might help users who have slow disks in some cases.

# tsm-use-madv-willneed = false

# Settings for the inmem index

# The maximum series allowed per database before writes are dropped. This limit can prevent

# high cardinality issues at the database level. This limit can be disabled by setting it to

# 0.

# max-series-per-database = 1000000

# The maximum number of tag values per tag that are allowed before writes are dropped. This limit

# can prevent high cardinality tag values from being written to a measurement. This limit can be

# disabled by setting it to 0.

# max-values-per-tag = 100000

# Settings for the tsi1 index

# The threshold, in bytes, when an index write-ahead log file will compact

# into an index file. Lower sizes will cause log files to be compacted more

# quickly and result in lower heap usage at the expense of write throughput.

# Higher sizes will be compacted less frequently, store more series in-memory,

# and provide higher write throughput.

# Valid size suffixes are k, m, or g (case insensitive, 1024 = 1k).

# Values without a size suffix are in bytes.

max-index-log-file-size = "1m"

# The size of the internal cache used in the TSI index to store previously

# calculated series results. Cached results will be returned quickly from the cache rather

# than needing to be recalculated when a subsequent query with a matching tag key/value

# predicate is executed. Setting this value to 0 will disable the cache, which may

# lead to query performance issues.

# This value should only be increased if it is known that the set of regularly used

# tag key/value predicates across all measurements for a database is larger than 100. An

# increase in cache size may lead to an increase in heap usage.

series-id-set-cache-size = 100

###

### [coordinator]

###

### Controls the clustering service configuration.

###

[coordinator]

# The default time a write request will wait until a "timeout" error is returned to the caller.

# write-timeout = "10s"

# The maximum number of concurrent queries allowed to be executing at one time. If a query is

# executed and exceeds this limit, an error is returned to the caller. This limit can be disabled

# by setting it to 0.

# max-concurrent-queries = 0

# The maximum time a query will is allowed to execute before being killed by the system. This limit

# can help prevent run away queries. Setting the value to 0 disables the limit.

query-timeout = "60s"

# The time threshold when a query will be logged as a slow query. This limit can be set to help

# discover slow or resource intensive queries. Setting the value to 0 disables the slow query logging.

log-queries-after = "10s"

# The maximum number of points a SELECT can process. A value of 0 will make

# the maximum point count unlimited. This will only be checked every second so queries will not

# be aborted immediately when hitting the limit.

# max-select-point = 0

# The maximum number of series a SELECT can run. A value of 0 will make the maximum series

# count unlimited.

# max-select-series = 0

# The maxium number of group by time bucket a SELECT can create. A value of zero will max the maximum

# number of buckets unlimited.

# max-select-buckets = 0

###

### [retention]

###

### Controls the enforcement of retention policies for evicting old data.

###

[retention]

# Determines whether retention policy enforcement enabled.

# enabled = true

# The interval of time when retention policy enforcement checks run.

# check-interval = "30m"

###

### [shard-precreation]

###

### Controls the precreation of shards, so they are available before data arrives.

### Only shards that, after creation, will have both a start- and end-time in the

### future, will ever be created. Shards are never precreated that would be wholly

### or partially in the past.

[shard-precreation]

# Determines whether shard pre-creation service is enabled.

# enabled = true

# The interval of time when the check to pre-create new shards runs.

# check-interval = "10m"

# The default period ahead of the endtime of a shard group that its successor

# group is created.

# advance-period = "30m"

###

### Controls the system self-monitoring, statistics and diagnostics.

###

### The internal database for monitoring data is created automatically if

### if it does not already exist. The target retention within this database

### is called ''monitor'' and is also created with a retention period of 7 days

### and a replication factor of 1, if it does not exist. In all cases the

### this retention policy is configured as the default for the database.

[monitor]

# Whether to record statistics internally.

# store-enabled = true

# The destination database for recorded statistics

# store-database = "_internal"

# The interval at which to record statistics

# store-interval = "10s"

###

### [http]

###

### Controls how the HTTP endpoints are configured. These are the primary

### mechanism for getting data into and out of InfluxDB.

###

[http]

# Determines whether HTTP endpoint is enabled.

# enabled = true

# Determines whether the Flux query endpoint is enabled.

# flux-enabled = false

# Determines whether the Flux query logging is enabled.

# flux-log-enabled = false

# The bind address used by the HTTP service.

# bind-address = ":8086"

# Determines whether user authentication is enabled over HTTP/HTTPS.

# auth-enabled = false

# The default realm sent back when issuing a basic auth challenge.

# realm = "InfluxDB"

# Determines whether HTTP request logging is enabled.

# log-enabled = true

# Determines whether the HTTP write request logs should be suppressed when the log is enabled.

# suppress-write-log = false

# When HTTP request logging is enabled, this option specifies the path where

# log entries should be written. If unspecified, the default is to write to stderr, which

# intermingles HTTP logs with internal InfluxDB logging.

#

# If influxd is unable to access the specified path, it will log an error and fall back to writing

# the request log to stderr.

# access-log-path = ""

# Filters which requests should be logged. Each filter is of the pattern NNN, NNX, or NXX where N is

# a number and X is a wildcard for any number. To filter all 5xx responses, use the string 5xx.

# If multiple filters are used, then only one has to match. The default is to have no filters which

# will cause every request to be printed.

# access-log-status-filters = []

# Determines whether detailed write logging is enabled.

# write-tracing = false

# Determines whether the pprof endpoint is enabled. This endpoint is used for

# troubleshooting and monitoring.

# pprof-enabled = true

# Enables a pprof endpoint that binds to localhost:6060 immediately on startup.

# This is only needed to debug startup issues.

# debug-pprof-enabled = false

# Determines whether HTTPS is enabled.

# https-enabled = false

# The SSL certificate to use when HTTPS is enabled.

# https-certificate = "/etc/ssl/influxdb.pem"

# Use a separate private key location.

# https-private-key = ""

# The JWT auth shared secret to validate requests using JSON web tokens.

# shared-secret = ""

# The default chunk size for result sets that should be chunked.

# max-row-limit = 0

# The maximum number of HTTP connections that may be open at once. New connections that

# would exceed this limit are dropped. Setting this value to 0 disables the limit.

# max-connection-limit = 0

# Enable http service over unix domain socket

# unix-socket-enabled = false

# The path of the unix domain socket.

# bind-socket = "/var/run/influxdb.sock"

# The maximum size of a client request body, in bytes. Setting this value to 0 disables the limit.

# max-body-size = 25000000

# The maximum number of writes processed concurrently.

# Setting this to 0 disables the limit.

# max-concurrent-write-limit = 0

# The maximum number of writes queued for processing.

# Setting this to 0 disables the limit.

# max-enqueued-write-limit = 0

# The maximum duration for a write to wait in the queue to be processed.

# Setting this to 0 or setting max-concurrent-write-limit to 0 disables the limit.

# enqueued-write-timeout = 0

###

### [logging]

###

### Controls how the logger emits logs to the output.

###

[logging]

# Determines which log encoder to use for logs. Available options

# are auto, logfmt, and json. auto will use a more a more user-friendly

# output format if the output terminal is a TTY, but the format is not as

# easily machine-readable. When the output is a non-TTY, auto will use

# logfmt.

# format = "auto"

# Determines which level of logs will be emitted. The available levels

# are error, warn, info, and debug. Logs that are equal to or above the

# specified level will be emitted.

# level = "info"

# Suppresses the logo output that is printed when the program is started.

# The logo is always suppressed if STDOUT is not a TTY.

# suppress-logo = false

###

### [subscriber]

###

### Controls the subscriptions, which can be used to fork a copy of all data

### received by the InfluxDB host.

###

[subscriber]

# Determines whether the subscriber service is enabled.

# enabled = true

# The default timeout for HTTP writes to subscribers.

# http-timeout = "30s"

# Allows insecure HTTPS connections to subscribers. This is useful when testing with self-

# signed certificates.

# insecure-skip-verify = false

# The path to the PEM encoded CA certs file. If the empty string, the default system certs will be used

# ca-certs = ""

# The number of writer goroutines processing the write channel.

# write-concurrency = 40

# The number of in-flight writes buffered in the write channel.

# write-buffer-size = 1000

###

### [[graphite]]

###

### Controls one or many listeners for Graphite data.

###

[[graphite]]

# Determines whether the graphite endpoint is enabled.

# enabled = false

# database = "graphite"

# retention-policy = ""

# bind-address = ":2003"

# protocol = "tcp"

# consistency-level = "one"

# These next lines control how batching works. You should have this enabled

# otherwise you could get dropped metrics or poor performance. Batching

# will buffer points in memory if you have many coming in.

# Flush if this many points get buffered

# batch-size = 5000

# number of batches that may be pending in memory

# batch-pending = 10

# Flush at least this often even if we haven''t hit buffer limit

# batch-timeout = "1s"

# UDP Read buffer size, 0 means OS default. UDP listener will fail if set above OS max.

# udp-read-buffer = 0

### This string joins multiple matching ''measurement'' values providing more control over the final measurement name.

# separator = "."

### Default tags that will be added to all metrics. These can be overridden at the template level

### or by tags extracted from metric

# tags = ["region=us-east", "zone=1c"]

### Each template line requires a template pattern. It can have an optional

### filter before the template and separated by spaces. It can also have optional extra

### tags following the template. Multiple tags should be separated by commas and no spaces

### similar to the line protocol format. There can be only one default template.

# templates = [

# "*.app env.service.resource.measurement",

# # Default template

# "server.*",

# ]

###

### [collectd]

###

### Controls one or many listeners for collectd data.

###

[[collectd]]

# enabled = false

# bind-address = ":25826"

# database = "collectd"

# retention-policy = ""

#

# The collectd service supports either scanning a directory for multiple types

# db files, or specifying a single db file.

# typesdb = "/usr/local/share/collectd"

#

# security-level = "none"

# auth-file = "/etc/collectd/auth_file"

# These next lines control how batching works. You should have this enabled

# otherwise you could get dropped metrics or poor performance. Batching

# will buffer points in memory if you have many coming in.

# Flush if this many points get buffered

# batch-size = 5000

# Number of batches that may be pending in memory

# batch-pending = 10

# Flush at least this often even if we haven''t hit buffer limit

# batch-timeout = "10s"

# UDP Read buffer size, 0 means OS default. UDP listener will fail if set above OS max.

# read-buffer = 0

# Multi-value plugins can be handled two ways.

# "split" will parse and store the multi-value plugin data into separate measurements

# "join" will parse and store the multi-value plugin as a single multi-value measurement.

# "split" is the default behavior for backward compatability with previous versions of influxdb.

# parse-multivalue-plugin = "split"

###

### [opentsdb]

###

### Controls one or many listeners for OpenTSDB data.

###

[[opentsdb]]

# enabled = false

# bind-address = ":4242"

# database = "opentsdb"

# retention-policy = ""

# consistency-level = "one"

# tls-enabled = false

# certificate= "/etc/ssl/influxdb.pem"

# Log an error for every malformed point.

# log-point-errors = true

# These next lines control how batching works. You should have this enabled

# otherwise you could get dropped metrics or poor performance. Only points

# metrics received over the telnet protocol undergo batching.

# Flush if this many points get buffered

# batch-size = 1000

# Number of batches that may be pending in memory

# batch-pending = 5

# Flush at least this often even if we haven''t hit buffer limit

# batch-timeout = "1s"

###

### [[udp]]

###

### Controls the listeners for InfluxDB line protocol data via UDP.

###

[[udp]]

# enabled = false

# bind-address = ":8089"

# database = "udp"

# retention-policy = ""

# InfluxDB precision for timestamps on received points ("" or "n", "u", "ms", "s", "m", "h")

# precision = ""

# These next lines control how batching works. You should have this enabled

# otherwise you could get dropped metrics or poor performance. Batching

# will buffer points in memory if you have many coming in.

# Flush if this many points get buffered

# batch-size = 5000

# Number of batches that may be pending in memory

# batch-pending = 10

# Will flush at least this often even if we haven''t hit buffer limit

# batch-timeout = "1s"

# UDP Read buffer size, 0 means OS default. UDP listener will fail if set above OS max.

# read-buffer = 0

###

### [continuous_queries]

###

### Controls how continuous queries are run within InfluxDB.

###

[continuous_queries]

# Determines whether the continuous query service is enabled.

# enabled = true

# Controls whether queries are logged when executed by the CQ service.

# log-enabled = true

# Controls whether queries are logged to the self-monitoring data store.

# query-stats-enabled = false

# interval for how often continuous queries will be checked if they need to run

# run-interval = "1s"

###

### [tls]

###

### Global configuration settings for TLS in InfluxDB.

###

[tls]

# Determines the available set of cipher suites. See https://golang.org/pkg/crypto/tls/#pkg-constants

# for a list of available ciphers, which depends on the version of Go (use the query

# SHOW DIAGNOSTICS to see the version of Go used to build InfluxDB). If not specified, uses

# the default settings from Go''s crypto/tls package.

# ciphers = [

# "TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305",

# "TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256",

# ]

# Minimum version of the tls protocol that will be negotiated. If not specified, uses the

# default settings from Go''s crypto/tls package.

# min-version = "tls1.2"

# Maximum version of the tls protocol that will be negotiated. If not specified, uses the

# default settings from Go''s crypto/tls package.

# max-version = "tls1.2"7、rsyslog配置

cat >/etc/rsyslog.d/influxd.conf <<EOF

:programname, contains, "influxd" /var/log/influxdb/influxd.log

:programname, contains, "influxd" ~

EOFsystemctl restart rsyslog

- rsyslog配置说明

举例

Mar 10 14:47:04 vm-hp LogHeader[8261]: Hello, Logger支持的模板属性变量输出如下

msg: Hello, Logger,

rawmsg: <175>Mar 10 15:52:49 LogHeader[13845]: Hello, Logger,

HOSTNAME: vm-28-234-pro01-hp,

FROMHOST: vm-28-234-pro01-hp,

syslogtag: LogHeader[13845]:,

programname: LogHeader,

PRI: 175,

PRI-text: local5.debug,

IUT: 1,

syslogfacility: 21,

syslogfacility-text: local5,

syslogseverity: 7,

syslogseverity-text: debug,

timereported: Mar 10 15:52:49,

TIMESTAMP: Mar 10 15:52:49,

timegenerated: Mar 10 15:52:49,

PROTOCOL-VERSION: 0,

STRUCTURED-DATA: -,

APP-NAME: LogHeader,

PROCID: 13845,

MSGID: -参考:https://aicode.cc/shi-yongrsyslog-guan-li-ri-zhi.html

8、启动服务

启动服务 systemctl start influxd

停止服务 systemctl stop influxd

重启服务 systemctl restart influxd

2.InfluxDB-InfluxQL基础语法教程--目录

本文翻译自官网,官方文档地址:(https://docs.influxdata.com/influxdb/v1.7/query_language/data_exploration/)

<kbd>InfluxQL</kbd>是用于在InfluxDB中进行数据探索的类似于SQL的查询语法。下面将详细介绍在InfluxDB中使用select语句的情形。

接下来介绍的语法如下面的表格所示。

| The Basics | Configure Query Results | General Tips on Query Syntax |

|---|---|---|

| The SELECT statement | ORDER BY time DESC | Time Syntax |

| The WHERE clause | The LIMIT and SLIMIT clauses | Regular Expressions |

| The GROUP BY clause | The OFFSET and SOFFSET clauses | Data types and cast operations |

| The INTO clause | The Time Zone clause | Merge behavior |

| Multiple statements | ||

| Subqueries |

目录

- InfluxDB-InfluxQL基础语法教程--数据说明

- InfluxDB-InfluxQL基础语法教程--基本select语句

- InfluxDB-InfluxQL基础语法教程--WHERE子句

- InfluxDB-InfluxQL基础语法教程--GROUP BY子句

- InfluxDB-InfluxQL基础语法教程--INTO子句

- InfluxDB-InfluxQL基础语法教程--ORDER BY子句

- InfluxDB-InfluxQL基础语法教程--LIMIT and SLIMIT 子句

- InfluxDB-InfluxQL基础语法教程--OFFSET 和SOFFSET子句

4.InfluxDB-InfluxQL基础语法教程--基本select语句

本文翻译自官网,官网地址:(https://docs.influxdata.com/influxdb/v1.7/query_language/data_exploration/)

基本语法如下:

SELECT <field_key>[,<field_key>,<tag_key>] FROM <measurement_name>[,<measurement_name>]

可见,select语句是由SELECT子句和FROM子句组成的。

一、SELECT子句

在SELECT字句中,有如下几种形式,分别用于查询各种指定的数据:

| 语法 | 意思 |

|---|---|

| SELECT * | 查询measurement中所有的fields和 tags。示例sql:<kbd>select * from h2o_feet;</kbd> |

| SELECT "<field_key>" | 查询指定的一个field。示例sql:<kbd>select water_level from h2o_feet;</kbd> |

| SELECT "<field_key>","<field_key>" | 查询多个field。示例sql:<kbd>select "level description", "water_level" from h2o_feet;</kbd> |

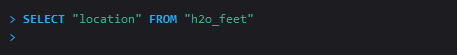

| SELECT "<field_key>","<tag_key>" | 查询指定的field和tag。示例sql:<kbd>select water_level,location from h2o_feet;</kbd> 注:在SELECT子句中,如果包含了tag,那么此时就必须指定至少一个field。比如如下的sql就是错误的,因为它只select了一个tag,而没有field:<kbd>select location from h2o_feet;</kbd> |

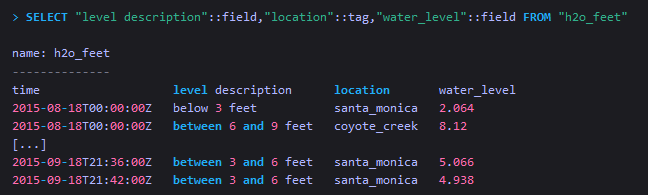

| SELECT "<field_key>"::field,"<tag_key>"::tag | 跟上面一样,也是查询指定的field和tag。 ::[field | tag]语法用来指定标识符的类型,因为有时候tag和field有可能同名,因此用 ::[field | tag]语法来加以区分。 |

在SELECT子句中,还包含数学运算、聚合函数、基本的类型转换、正则表达式等。

二、FROM子句

FROM子句用于指定要查询的measurement,支持的语法如下:

| 语法 | 意思 |

|---|---|

| FROM <measurement_name> | 从指定measurement中查询数据。这种方式会从当前DB、默认retention policy的measurement中查询数据。 |

| FROM <measurement_name>,<measurement_name> | 从多个measurement中查询数据 |

| FROM <database_name>.<retention_policy_name>.<measurement_name> | 从指定DB、指定retention policy的measurement中查询数据 |

| FROM <database_name>..<measurement_name> | 从指定DB、默认retention policy的measurement 中查询数据 |

FROM子句中还支持正则表达式。

关于引号

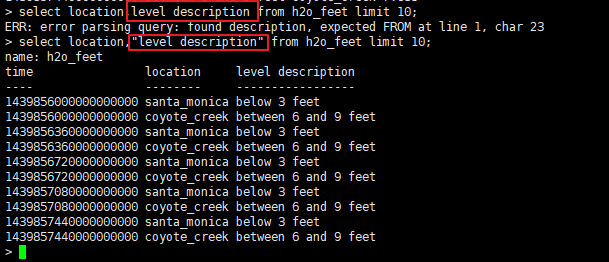

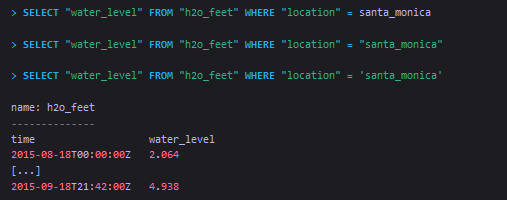

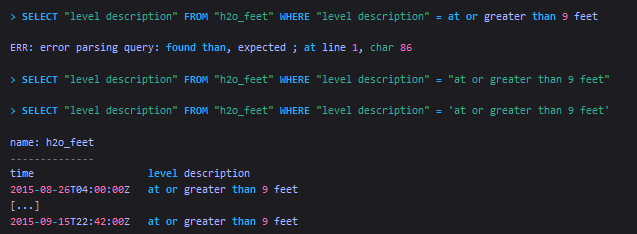

如果measurement、tag、field等的标识符除了[A-z,0-9,_]之外,还有其他字符,或者标识符是keyword关键字,那么在引用的时候必须加上双引号。比如在表 h2o_feet 中,"level description"就是一个带有空格的field,如此一来在查询到的时候,就必须加上双引号了。如下图,在查询level description时若不加双引号,则会报错。

<font color=DarkRed size=4>官方推荐,虽然有些标识符不是必须使用双引号,但是推荐对所有标识符使用双引号!</font>

示例sql

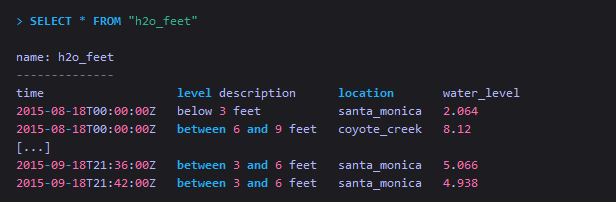

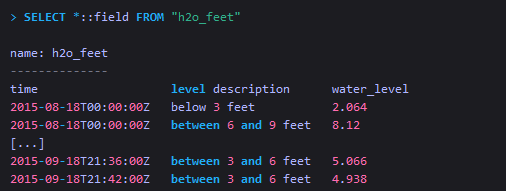

-

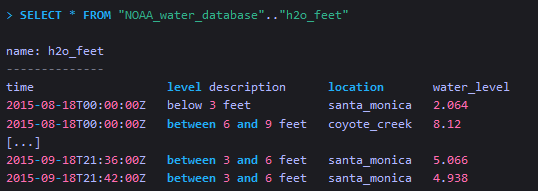

从单个measurement中查询该measurement所有的tag和field

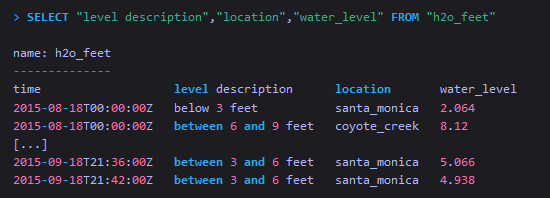

-

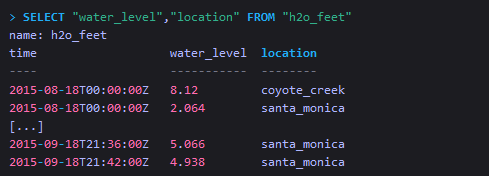

从单个measurement中查询指定的tag和field

-

从单个measurement中查询指定的tag和field,并指定它们的标识类型

这种方式一般使用较少。 -

从measurement中查询所有的field

The SELECT clause supports combining the * syntax with the :: syntax. -

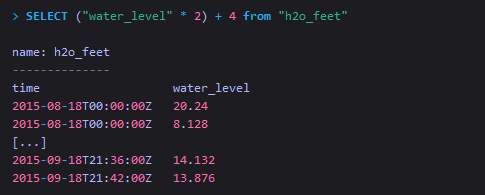

在查询时进行基本的数学运算

InfluxDB遵循标准的四则运算规则。更多操作详见Mathematical Operators。 -

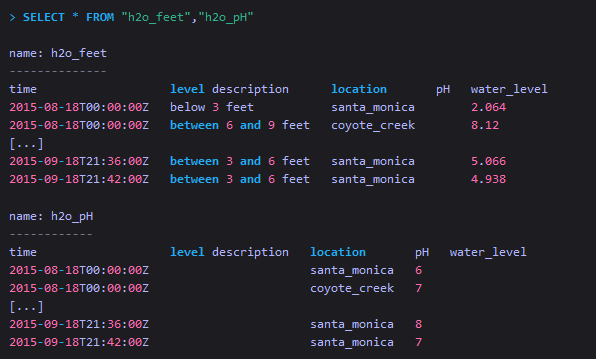

同时从多个measurement中查询它们的所有数据

-

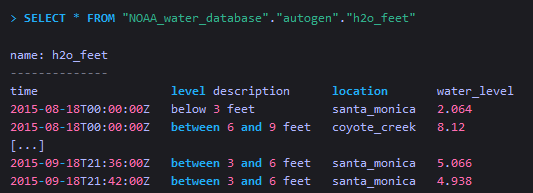

从一个全路径的measurement中查询数据

可见,所谓的全路径,其实就是指在FROM子句中,指定了measurement所在的DB,以及要查询数据所在的retention policy。 -

查询指定数据库中的measurement的数据

The query selects data in the NOAA_water_database, the DEFAULT retention policy, and the h2o_feet measurement. The .. indicates the DEFAULT retention policy for the specified database.

关于SELECT语句的常见疑问

在SELECT 子句中,必须要有至少一个field key!如果在SELECT子句中只有一个或多个tag key,那么该查询会返回空。这是由InfluxDB底层存储数据的方式所导致的结果。

示例:

上面的查询结果返回为空,是因为在它的SELECT子句中,只查询了location这个tag key。

如果想要查询跟location这个tag key有关的任何数据,则在SELECT字句中必须至少要包含一个field key,如下:

5.InfluxDB-InfluxQL基础语法教程--WHERE子句

本文翻译自官网,官网地址:(https://docs.influxdata.com/influxdb/v1.7/query_language/data_exploration/)

WHERE子句

语法:

SELECT_clause FROM_clause WHERE <conditional_expression> [(AND|OR) <conditional_expression> [...]]

注:在WHERE子句中,支持在fields, tags, and timestamps上进行条件表达式的运算。

注:在InfluxDB的WHERE子句中,不支持使用 OR 来指定不同的time区间,如下面的sql将会返回空:

SELECT * FROM "absolutismus" WHERE time = ''2016-07-31T20:07:00Z'' OR time = ''2016-07-31T23:07:17Z''

Fields

语法如下:

field_key <operator> [''string'' | boolean | float | integer]

在WHERE子句中,支持对string, boolean, float 和 integer类型的field values进行比较。

注意 :<font color=Red size=3>在WHERE子句中,如果是string类型的field value,一定要用单引号括起来。如果不适用引号括起来,或者使用的是双引号,将不会返回任何数据,有时甚至都不报错! </font>

WHERE支持的运算符如下:

| Operator | Meaning |

|---|---|

| = | equal to |

| <> | not equal to |

| != | not equal to |

| > | greater than |

| >= | greater than or equal to |

| < | less than |

| <= | less than or equal to |

支持的更多运算符详见: Arithmetic Operations, Regular Expressions

Tags

语法如下:

tag_key <operator> [''tag_value'']

<font color=Red size=3>对于在WHERE子句中的tag values,也要用单引号括起来。如果不用引号括起来,或者使用双引号,则查询不会返回任务数据。甚至不会报错。</font>

Tag支持的运算符如下:

| Operator | Meaning |

|---|---|

| = | equal to |

| <> | not equal to |

| != | not equal to |

还支持正则运算: Regular Expressions

Timestamps

对于大部分的SELECT 语句来说,默认的时间区间是1677-09-21 00:12:43.145224194 到 2262-04-11T23:47:16.854775806Z UTC. 对于有GROUP BY time() 的SELECT 语句,默认的时间区间是1677-09-21 00:12:43.145224194 UTC 到 now()。

在 Time Syntax 小节将会介绍如何在WHERE子句中指定时间 区间。

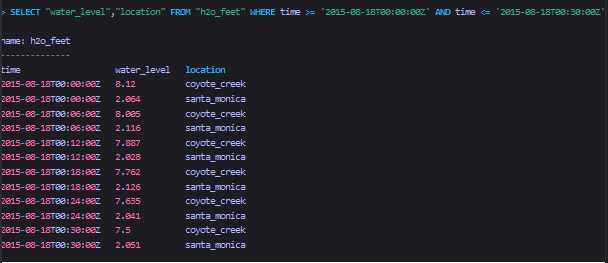

WHERE示例sql

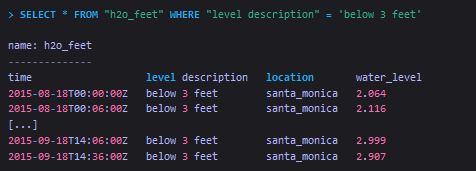

-

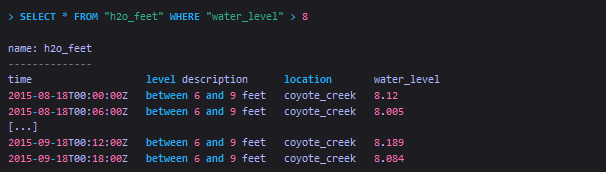

Select data that have specific field key-values

-

Select data that have a specific string field key-value

InfluxQL requires single quotes around string field values in the WHERE clause.

InfluxQL requires single quotes around string field values in the WHERE clause. -

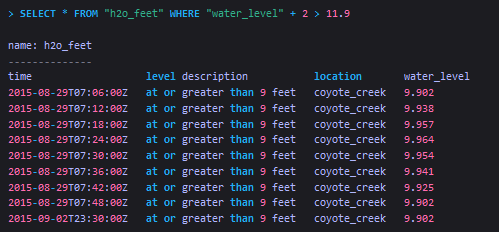

Select data that have a specific field key-value and perform basic arithmetic

-

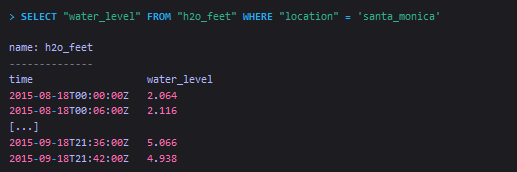

Select data that have a specific tag key-value

InfluxQL requires single quotes around tag values in the WHERE clause.

InfluxQL requires single quotes around tag values in the WHERE clause. -

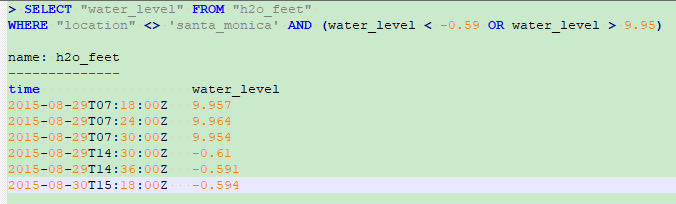

Select data that have specific field key-values and tag key-values

The WHERE clause supports the operators AND and OR, and supports separating logic with parentheses.

The WHERE clause supports the operators AND and OR, and supports separating logic with parentheses.

- Select data that have specific timestamps

该sql将查询h2o_feet中在7天以内的所有数据

关于WHERE语句的常见疑问

问题 :where子句查询意外地未返回任何数据。

答 :通常情况,出现该问题是因为在WHERE子句中没有对tag values或string类型的field values使用单引号括起来的缘故。对于WHERE子句中的tag values或string类型的field values,如果没有用引号括起来,或者是用的双引号,这种时候,查询不会返回任何结果,有时甚至也不会报错。

在下面的示例sql中,对tag value的引号使用做说明。第一个sql没有对tag value使用引号,第二个sql对tag value使用了双引号,第三个sql则对tag value使用了单引号。可以看到,第一和第二个sql都没有返回任何查询结果,而第三个sql返回了预期中的结果。

在下面的sql对string类型的field value的引号情况做说明,其中field value为“at or greater than 9 feet”。第一个sql没有对field value使用引号,第二个sql对field value使用了双引号,第三个sql则对field value使用了单引号。可以看到,第一个sql报错了,因为field valus中包含了空格。第二个sql虽然没报错,但是查询结果为空。第三个sql返回了预期中的结果。

6.InfluxDB-InfluxQL基础语法教程--GROUP BY子句

本文翻译自官网,官网地址:(https://docs.influxdata.com/influxdb/v1.7/query_language/data_exploration/)

GROUP BY子句通过用户自己制定的tags set或time区间,来将查询结果进行分组。

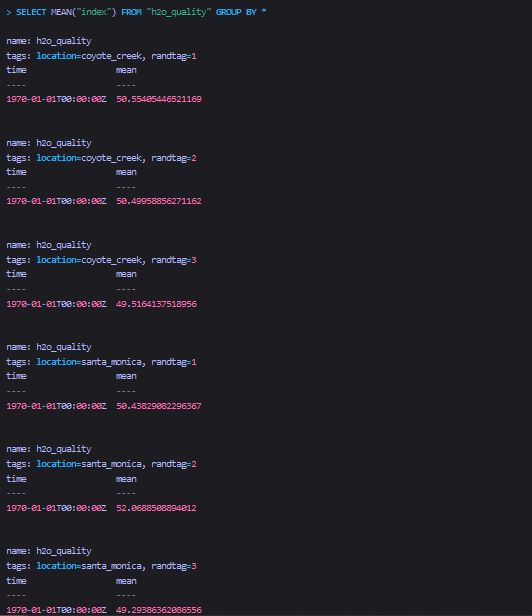

一、GROUP BY tags

GROUP BY <tag> 通过用户指定的tag set,来对查询结果进行分组。

语法:

SELECT_clause FROM_clause [WHERE_clause]

GROUP BY [* | <tag_key>[,<tag_key]]

| GROUP BY子句 | 意义 |

|---|---|

| GROUP BY * | 使用所有tag对查询结果进行分组 |

| GROUP BY <tag_key> | 使用指定tag对查询结果进行分组 |

| GROUP BY <tag_key>,<tag_key> | 使用指定的多个tag对查询结果进行分组,其中tag之间的顺序是无关的。 |

注 :如果在sql中同时存在WHERE子句和GROUP BY子句,则GROUP BY子句一定要在WHERE子句之后!

Other supported features: Regular Expressions

GROUP BY tags 示例sql

-

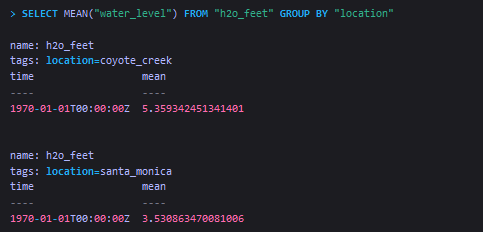

Group query results by a single tag

上面的sql使用了MEAN函数,来对h2o_feet这个measurement中的location这个tag进行分组求平均值。

上面的sql使用了MEAN函数,来对h2o_feet这个measurement中的location这个tag进行分组求平均值。

注:在InfluxDB中,<font color=Red size=2>0纪元1970-01-01T00:00:00Z</font>这个时间经常被用来表示timestamp的NULL值。如果你的查询中没有显示指定返回一个timestamp,比如上面在调用聚合函数时,就没有指定时间区间,因此InfluxDB最后返回0纪元来作为timestamp。 -

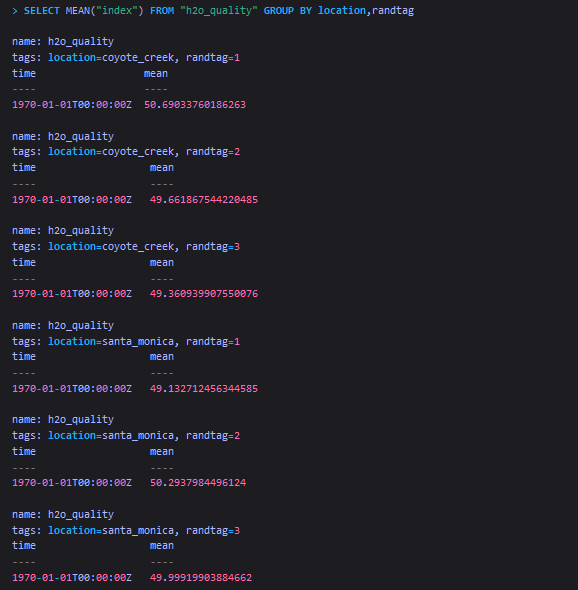

Group query results by more than one tag

-

Group query results by all tags

二、基础GROUP BY time intervals

GROUP BY time() 查询会将查询结果按照用户指定的时间区间来进行分组。

语法:

SELECT <function>(<field_key>) FROM_clause

WHERE <time_range>

GROUP BY time(<time_interval>),[tag_key] [fill(<fill_option>)]

基本的 GROUP BY time() 查询用法需要在SELECT子句中调用相关函数,并且在WHERE子句中调用time时间区间。

-

time(time_interval)

在GROUP BY time()子句中的time_interval是个连续的时间区间,该时间区间决定了InfluxDB如何通过时间来对查询结果进行分组。比如,如果time_interval为5m,那么它会将查询结果分为5分钟一组(如果在WHERE子句中指定了time区间,那么就是将WHERE中指定的time区间划分为没5分钟一组)。 -

fill(<fill_option>)

fill(<fill_option>) 是可选的。它可以填充那些没有数据的时间区间的值。 从 [GROUP BY time intervals and fill() ] (https://docs.influxdata.com/influxdb/v1.7/query_language/data_exploration/#group-by-time-intervals-and-fill) 部分可查看到关于这部分的更多信息。注:基本的GROUP BY time()查询通过当前InfluxDB数据库的预设时间边界来确定每个时间间隔中包含的原始数据和查询返回的时间戳。

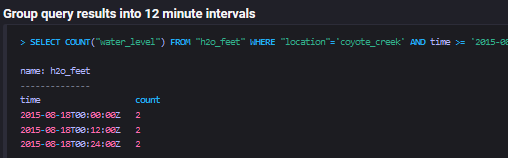

基本用法示例sql

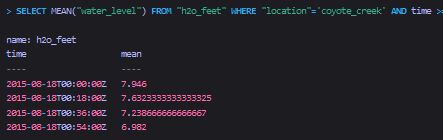

先看一个WHERE查询

下面的GROUP BY time(time_interval)示例是在上面的sql基础上进行改进的,sql为:

SELECT COUNT("water_level") FROM "h2o_feet"

WHERE "location"=''coyote_creek''

AND time >= ''2015-08-18T00:00:00Z''

AND time <= ''2015-08-18T00:30:00Z''

GROUP BY time(12m)

查询结果:

该sql将h2o_feet表中tag=“coyote_creek”,且在''2015-08-18T00:00:00Z''和''2015-08-18T00:30:00Z''时间区间内的数据查询出来,并对其划分为每12分钟一组,对water_level值进行count计算。

该sql将h2o_feet表中tag=“coyote_creek”,且在''2015-08-18T00:00:00Z''和''2015-08-18T00:30:00Z''时间区间内的数据查询出来,并对其划分为每12分钟一组,对water_level值进行count计算。

注意:在查询结果中,时间区间是左闭右开的。拿第一行查询结果数据来说,2015-08-18T00:00:00Z表示的时间区间是[2015-08-18T00:00:00, 2015-08-18T00:12:00Z )

常见问题

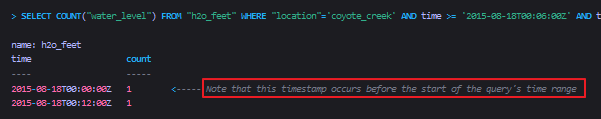

问题:查询结果中有预期之外的时间区间和值。

在基本用法中,GROUP BY time()查询通过当前InfluxDB数据库的预设时间边界来确定每个时间间隔中包含的原始数据和查询返回的时间戳,这有可能会导致预期之外的结果值。

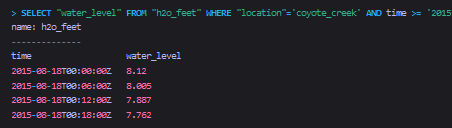

比如,通过如下sql:

SELECT "water_level" FROM "h2o_feet"

WHERE "location"=''coyote_creek''

AND time >= ''2015-08-18T00:00:00Z''

AND time <= ''2015-08-18T00:18:00Z''

我们查询到原始数据如下所示:

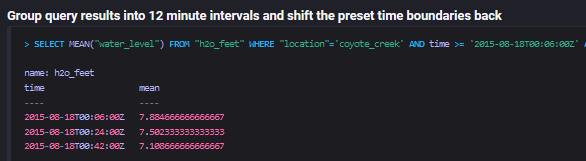

在接下来的查询中,我们通过WHERE子句,指定查询12分钟内的数据,并通过GROUP BY子句,将查询结果按12分钟的时间区间进行分组。

SELECT COUNT("water_level") FROM "h2o_feet"

WHERE "location"=''coyote_creek''

AND time >= ''2015-08-18T00:06:00Z''

AND time < ''2015-08-18T00:18:00Z''

GROUP BY time(12m)

按照预想,因为查询的是12分钟内的数据,并且group by时是按照12分钟来进行分组的,所以最后的查询结果应该只有一行而已。然后实际的查询结果却有两行:

解释 : influxdb使用预设的整数时间边界来作为GROUP BY的时间间隔,这些间隔独立于WHERE子句中的任何时间条件。在计算结果时,所有返回的数据都必须出现在WHERE查询的显式时间范围内,但当按间隔作为GROUP BY分组时是基于预设的时间边界。

(这里翻译的不好,下面是原版英文:

InfluxDB uses preset round-number time boundaries for GROUP BY intervals that are independent of any time conditions in the WHERE clause. When it calculates the results, all returned data must occur within the query’s explicit time range but the GROUP BY intervals will be based on the preset time boundaries. )

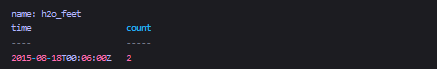

高级的GROUP BY time()语法允许用户自定义预设时间边界的开始时间。在高级语法小节的示例sql3中,将展示这种用法,它查询的结果如下:

三、高级GROUP BY time() 语法

语法如下:

SELECT <function>(<field_key>)

FROM_clause

WHERE <time_range>

GROUP BY time(<time_interval>,<offset_interval>),[tag_key] [fill(<fill_option>)]

在GROUP BY time()高级语法中,需要在SELECT子句中调用InfluxDB的函数,并在WHERE子句中指定时间区间。并且需要注意到的是,GROUP BY子句必须在WHERE子句之后!

-

time(time_interval,offset_interval)

在GROUP BY time()子句中的通过time_interval和offset_interval来表示一个连续的时间区间,该时间区间决定了InfluxDB如何通过时间来对查询结果进行分组。比如,如果时间区间为5m,那么它会将查询结果分为5分钟一组(如果在WHERE子句中指定了time区间,那么就是将WHERE中指定的time区间划分为没5分钟一组)。

offset_interval是持续时间文本。它向前或向后移动InfluxDB数据库的预设时间边界。offset_interval可以为正或负。 -

fill(<fill_option>)

fill(<fill_option>)是可选的。 它可以填充那些没有数据的时间区间的值。 从 GROUP BY time intervals and fill() 部分可查看到关于这部分的更多信息。注:高级 GROUP BY time() 语法依赖于time_interval、offset_interval、以及 InfluxDB 数据库的预设时间边界来确定每组内的数据条数、以及查询结果的时间戳。

高级用法示例sql

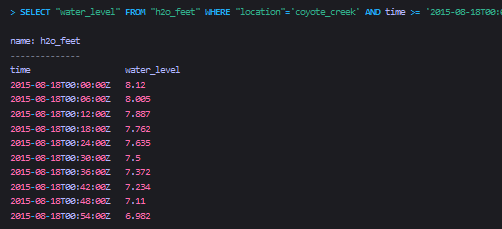

先看如下查询sql

SELECT "water_level" FROM "h2o_feet"

WHERE "location"=''coyote_creek''

AND time >= ''2015-08-18T00:00:00Z''

AND time <= ''2015-08-18T00:54:00Z''

查询结果:

接下来将使用上面的样例数据的子集来进行演示。以下sql将按照每18m对数据进行进组,并将预设的时间界限前移。

ELECT MEAN("water_level") FROM "h2o_feet"

WHERE "location"=''coyote_creek''

AND time >= ''2015-08-18T00:06:00Z''

AND time <= ''2015-08-18T00:54:00Z''

GROUP BY time(18m,6m)

查询结果:

可见上面sql将查询结果按照每18m为一组进行了分组,并且将预设的时间界限偏移了6分钟。

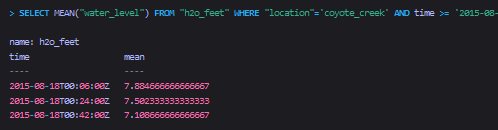

注意,对于没有offset_interval的group by time(),它的查询结果的时间边界和返回的时间戳遵循influxdb数据库的预设时间边界。下面我们看offset_interval的group by time()的查询结果:

SELECT MEAN("water_level") FROM "h2o_feet"

WHERE "location"=''coyote_creek''

AND time >= ''2015-08-18T00:06:00Z''

AND time <= ''2015-08-18T00:54:00Z''

GROUP BY time(18m)

再看如下sql:

再看如下sql:

SELECT MEAN("water_level") FROM "h2o_feet"

WHERE "location"=''coyote_creek''

AND time >= ''2015-08-18T00:06:00Z''

AND time <= ''2015-08-18T00:54:00Z''

GROUP BY time(18m,-12m);

查询结果

注 :该sql使用的是time(18m,-12m),offset_interval是负数,它的查询结果跟使用time(18m,6m)是一样的。<font color=Red size=2>因此在决定正负偏移间隔时,请随意选择最直观的选项。</font>

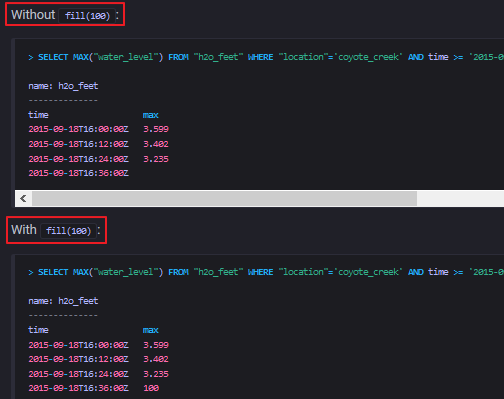

GROUP BY time intervals and fill()

Fill() 可以填充那些没有数据的时间区间的值。

语法:

SELECT <function>(<field_key>) FROM_clause

WHERE <time_range>

GROUP BY time(time_interval,[<offset_interval])[,tag_key] [fill(<fill_option>)]

默认情况下,在GROUP BY time()查询结果中,若某个时间区间没有数据,则该时间区间对应的值为null。通过fill(),就可以填充那些没有数据的时间区间的值。

需要注意的是,fill()必须出现在GROUP BY子句的最后。

Fill选项

- 任何数学数值

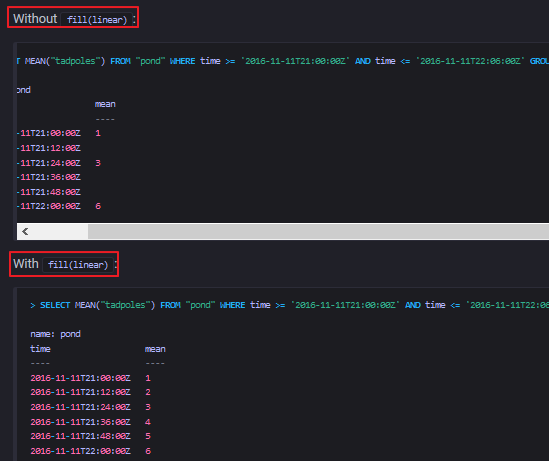

使用给定的数学数值进行填充 - linear

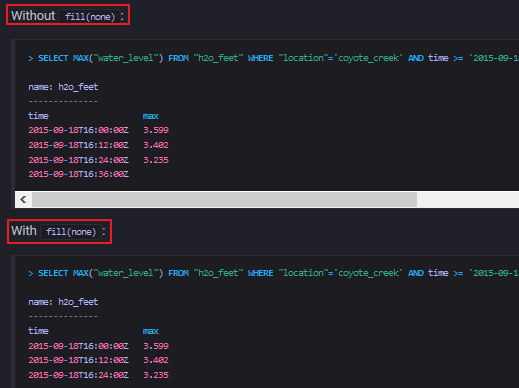

为没有数据值的时间区间线性插入数值,使得插入之后的数值,跟其他本来就有数据的区间的值成线性。(这里翻译的不是很好,看示例就能明白了) - none

若某个时间区间内没有数据,则在查询结果中该区间对应的时间戳将不显示出来 - null

没有值的区间,显示为null。这也是默认的选项。 - previous

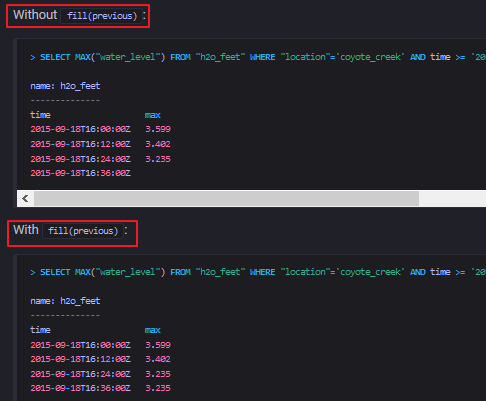

用前一个区间的数值来填充当前没有数据的区间的值。

示例:

-

fill(100)

-

fill(linear)

-

fill(none)

-

fill(null)

-

fill(previous)

我们今天的关于influxdb-1.7.8和centos 7) 部署的分享就到这里,谢谢您的阅读,如果想了解更多关于2.InfluxDB-InfluxQL基础语法教程--目录、4.InfluxDB-InfluxQL基础语法教程--基本select语句、5.InfluxDB-InfluxQL基础语法教程--WHERE子句、6.InfluxDB-InfluxQL基础语法教程--GROUP BY子句的相关信息,可以在本站进行搜索。

本文标签:

![[转帖]Ubuntu 安装 Wine方法(ubuntu如何安装wine)](https://www.gvkun.com/zb_users/cache/thumbs/4c83df0e2303284d68480d1b1378581d-180-120-1.jpg)