本文将介绍Python中的ListComprehension以及Generator的详细情况,特别是关于list.reverse.python的相关信息。我们将通过案例分析、数据研究等多种方式,帮助您

本文将介绍Python中的 List Comprehension 以及 Generator的详细情况,特别是关于list.reverse. python的相关信息。我们将通过案例分析、数据研究等多种方式,帮助您更全面地了解这个主题,同时也将涉及一些关于Attention-over-Attention Neural Networks for Reading Comprehension论文总结、Comprehensive Guide to build a Recommendation Engine from scratch (in Python) / 从0开始搭建推荐系统、Comprehensive learning path – Data Science in Python深度学习路径-用python进行数据学习、HeadFirstPython 学习笔记(0)--list comprehension(列表推导)的知识。

本文目录一览:- Python中的 List Comprehension 以及 Generator(list.reverse. python)

- Attention-over-Attention Neural Networks for Reading Comprehension论文总结

- Comprehensive Guide to build a Recommendation Engine from scratch (in Python) / 从0开始搭建推荐系统

- Comprehensive learning path – Data Science in Python深度学习路径-用python进行数据学习

- HeadFirstPython 学习笔记(0)--list comprehension(列表推导)

Python中的 List Comprehension 以及 Generator(list.reverse. python)

11行代码就写出了一个配置文件的解析器。

def loadUserInfo(fileName):

userinfo = {}

file = open(fileName, "r")

while file:

line = file.readline()

if len(line) == 0:

break

if line.startswith(''#''):

continue

key, value = line.split("=")

userinfo[key.strip()] = value.strip()

return userinfo最近正在跟同事学习python在数据挖掘中的应用,又专门学习了一下python本身,然后用list comprehension简化了以下上面的代码:

def loadUserInfo(file):

return dict([line.strip().split("=")

for line in open(file, "r")

if len(line) > 0 and not line.startswith("#")])这个函数和上面的函数的功能一样,都是读取一个指定的key=value格式的文件,然后构建出来一个映射(当然,在Python中叫做字典)对象,该函数还会跳过空行和#开头的行。

比如,我想要查看一下.wgetrc配置文件:

if __name__ == "__main__":

print(loadUserInfo("/Users/jtqiu/.wgetrc"))假设我的.wgetrc文件配置如下:

http-proxy=10.18.0.254:3128

ftp-proxy=10.18.0.254:3128

#http_proxy=10.1.1.28:3128

use_proxy=yes则上面的函数会产生这样的输出:

{''use_proxy'': ''yes'', ''ftp-proxy'': ''10.18.0.254:3128'', ''http-proxy'': ''10.18.0.254:3128''}list comprehension(列表推导式)

在python中,list comprehension(或译为列表推导式)可以很容易的从一个列表生成另外一个列表,从而完成诸如map, filter等的动作,比如:

要把一个字符串数组中的每个字符串都变成大写:

names = ["john", "jack", "sean"]

result = []

for name in names:

result.append(name.upper())如果用列表推导式,只需要一行:

[name.upper() for name in names]结果都是一样:

[''JOHN'', ''JACK'', ''SEAN'']另外一个例子,如果想要过滤出一个数字列表中的所有偶数:

numbers = [1, 2, 3, 4, 5, 6]

result = []

for number in numbers:

if number % 2 == 0:

result.append(number)如果写成列表推导式:

[x for x in numbers if x%2 == 0]结果也是一样:

[2, 4, 6]显然,列表推导更加短小,也更加表意。

迭代器

在了解generator之前,我们先来看一个迭代器的概念。有时候我们不需要将整个列表都放在内存中,特别是当列表的尺寸比较大的时候。

比如我们定义一个函数,它会返回一个连续的整数的列表:

def myrange(n):

num, nums = 0, []

while num < n:

nums.append(num)

num += 1

return nums当我们计算诸如myrange(50)或者myrange(100)时,不会有任何问题,但是当获取诸如myrange(10000000000)的时候,由于这个函数的内部会将数字保存在一个临时的列表中,因此会有很多的内存占用。

因此在python有了迭代器的概念:

class myrange(object):

def __init__(self, n):

self.i = 0

self.n = n

def __iter__(self):

return self

# for python 3

def __next__(self):

return self.next()

def next(self):

if self.i < self.n:

i = self.i

self.i += 1

return i

else:

raise StopIteration()这个对象其实实现了两个特殊的方法:__iter__(对于python3来说,是__next__)和next方法。其中next每次只返回一个值,如果迭代已经结束,就抛出一个StopIteration的异常。实现了这两个方法的类都可以算作是一个迭代器,他们可以被用于可迭代的上下文中,比如:

>>> from myrange import myrange

>>> x = myrange(10)

>>> x.next()

0

>>> x.next()

1

>>> x.next()

2但是可以看到这个函数中有很多的样板代码,因此我们有了生成器表达式来简化这个过程:

def myrange(n):

num = 0

while num < n:

yield num

num += 1注意此处的yield关键字,每次使用next来调用这个函数时都会求值一次num并返回,具体的细节可以参考这里。

区别

简单来说,两者都可以在迭代器上下文中使用,看起来几乎是一样的。不同的地方是generator可以节省内存空间,从而提高执行速度。generator更适合一次性的列表处理,比如只是需要一个中间列表作为转换。而列表推导则更适合要将列表保存下来,以备后续使用的场景。

这里也有一些讨论,可以一并参看。

参考

- Iterators & Generators

- Generators Wiki

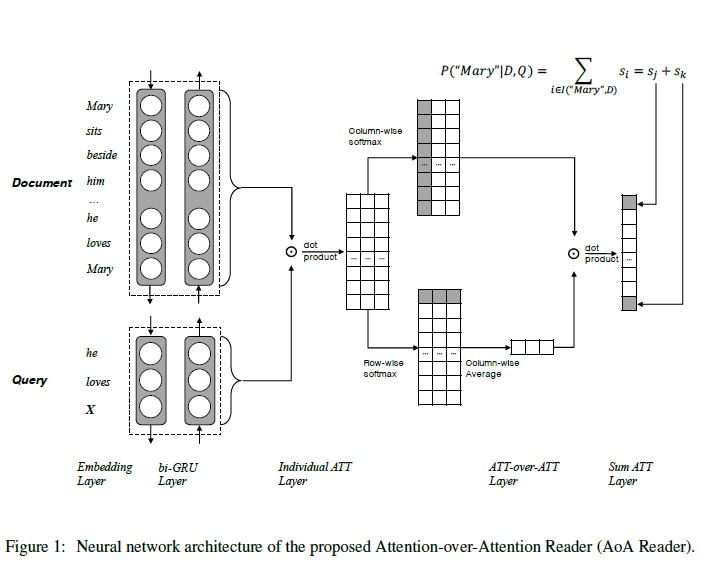

Attention-over-Attention Neural Networks for Reading Comprehension论文总结

Attention-over-Attention Neural Networks for Reading Comprehension

论文地址:https://arxiv.org/pdf/1607.04423.pdf

0 摘要

任务:完形填空是阅读理解是挖掘文档和问题关系的一个代表性问题。

模型:提出一个简单但是新颖的模型A-O-A模型,在文档级的注意力机制上增加一层注意力来确定最后答案

(什么是文档级注意力?就是每阅读问题中的一个词,该词对文档中的所有单词都会形成一个分布,从而形成文档级别的分布)

模型优点:(1)更简单,性能更好 (2)提出N-best重新排序策略去二次检验候选词的有效性(3)相比之前模型,实验结果性能更好

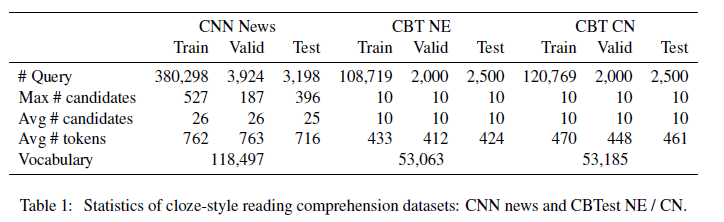

数据集:CNN&Daily Mail以及儿童故事(Children’s Book Test,CBT)数据集介绍

1 引言

(1)引出任务:

阅读和理解人类语言是机器的挑战性任务,他需要理解自然语言和推理线索的能力。其中,填充式阅读理解问题已成为社区的热门任务,填充式查询( cloze-style query)是一个问题,即在考虑上下文信息的同时,在给定的句子中填写适当的单词。这个单词答案是文档中的一个单词,它需利用文档和问题中的上下文信息推理得来。

形式上,一般的Cloze-style式阅读理解问题可以被解释为一个三元组:D,Q,A三元组:

- 文档D :Mary sits beside him … he loves Mary

- 查询Q:he loves __

- 查询答案A:Mary

(2)对机器进行填充式任务的阅读理解,需要大规模数据集,现有两个数据集:

1)CNN /每日邮报新闻数据集(2015年),文档D由新闻报道组成,查询Q由文章摘要构成,将文章摘要中的一个实体词被特殊的占位符替换以指示缺失的词。被替换的实体词将是查询的答案。

2)儿童图书测试数据集(2016),文档D由书籍的连续20个句子生成的,并且查询由第21句形成。

(3)基于上述数据集的相关工作:

在这个数据集上,提出了很多神经网络的方法,而且大多数都是基于注意力机制的神经网络,由于注意力机制在学习对于输入上的重要分布的能力,已经成为了大多数NLP任务的固定模式。

(4)本文思路:

本文提出一种新的神经网络,称为attention-over-attention注意模型。我们可以从字面上理解含义:我们的模型旨在将另一种注意机制置于现有文档级别的注意力机制之上。

与以前的作品不同,之前的模型通常使用启发式归并的思想或者设置许多预定义的不可训练的项,我们的模型可以自动在多个文档级别的“注意力”上生成一个集中注意力,所以,模型不仅可以从查询Q到文档D;还可以文档D到查询Q进行交互查看,这将受益于交互式信息。

(5)本文创新点:

综上所述,我们的工作主要贡献如下:

-

据我们所知,这是第一次提出将注意力覆盖在现有关注点上的机制之上,即attention-over-attention mechanism。

-

与以往有关向模型引入复杂体系结构或许多不可训练的超参数的工作不同,我们的模型要简单得多,但要大幅优于各种最先进的系统。

-

我们还提出了N-best排名策略,以重新评估候选词的各个方面并进一步提高表现。

接下来的文章结构:第2节简明介绍完形填空式阅读理解任务以及相关的数据集;第3节详细介绍所提出的attention-over-attention的阅读器;第4节将介绍N-BEST排名策略;第5节和第6节介绍实验结果和实验分析;最后在第7节中给出总结与展望。

2 完形填空式阅读理解

2.1 任务描述

<D, Q, A>,其中

D代表文档(document),

Q代表问题(query),

A则代表问题的答案(Answer),

答案是文档中某个词,答案词的类型为固定搭配中的介词或命名实体。

作者:损失函数

链接:https://www.imooc.com/article/29985

来源:慕课网

本文原创发布于慕课网 ,转载请注明出处,谢谢合作

<D, Q, A>,其中

D代表文档(document),

Q代表问题(query),

A则代表问题的答案(Answer),

答案是文档中某个词,答案词的类型为固定搭配中的介词或命名实体。

作者:损失函数

链接:https://www.imooc.com/article/29985

来源:慕课网

本文原创发布于慕课网 ,转载请注明出处,谢谢合作

一个完形填空式阅读理解样本可描述为一个三元组:<D, Q, A>,其中D代表文档(document),Q代表问题(query),A则代表问题的答案(Answer),答案是文档中某个词,答案词的类型为固定搭配中的介词或命名实体。

2.2 现有公共数据集

- CNN/DailyMail

<D, Q, A>,其中D代表文档(document),Q代表问题(query),A则代表问题的答案(Answer),答案是文档中某个词,答案词的类型为固定搭配中的介词或命名实体。

作者:损失函数

链接:https://www.imooc.com/article/29985

来源:慕课网

本文原创发布于慕课网 ,转载请注明出处,谢谢合作

<D, Q, A>,其中D代表文档(document),Q代表问题(query),A则代表问题的答案(Answer),答案是文档中某个词,答案词的类型为固定搭配中的介词或命名实体。

作者:损失函数

链接:https://www.imooc.com/article/29985

来源:慕课网

本文原创发布于慕课网 ,转载请注明出处,谢谢合作

<D, Q, A>,其中D代表文档(document),Q代表问题(query),A则代表问题的答案(Answer),答案是文档中某个词,答案词的类型为固定搭配中的介词或命名实体。

作者:损失函数

链接:https://www.imooc.com/article/29985

来源:慕课网

本文原创发布于慕课网 ,转载请注明出处,谢谢合作

这是一个完形填空式的机器阅读理解数据集,从美国有线新闻网(CNN)和每日邮报网抽取近一百万篇文章,每篇文章作为一个文档(document),在文档的summary中剔除一个实体类单词,并作为问题(question),剔除的实体类单词即作为答案(answer),该文档中所有的实体类单词均可为候选答案(candidate answers)。其中每个样本使用命名实体识别方法将文本中所有的命名实体用类似“@entity1”替代,并随机打乱表示。

- Children‘s Book Test

一个完形填空式阅读理解样本可描述为一个三元组:<D, Q, A>,其中D代表文档(document),Q代表问题(query),A则代表问题的答案(Answer),答案是文档中某个词,答案词的类型为固定搭配中的介词或命名实体。

作者:损失函数

链接:https://www.imooc.com/article/29985

来源:慕课网

本文原创发布于慕课网 ,转载请注明出处,谢谢合作

由于没有摘要,所以从每一个儿童故事中提取20个连续的句子作为文档(document),第21个句子作为问题(question),并从中剔除一个实体类单词作为答案(answer)。在CBTest数据集中,有四种类型的子数据集可用,它们通过应答词的词性和命名实体标签分类,包含命名实体(NE),常用名词(CN),动词和介词。 在研究中,他们发现动词和介词的回答相对较少地依赖于文档的内容,并且人类甚至可以在没有文档存在的情况下进行介词填空。 回答动词和介词较少依赖于文档的存在。 因此,大多数相关工作都集中在解决NE问题上和CN类型。

3 本文模型Attention-over-Attention Reader

我们的模型基于“Text understanding with the attention sum reader network.”论文提出的模型,但是他主要计算文档级别的关注,而不是计算文档的混合程度。而我们这篇文章中提出了一个新的方法,在原来的注意力上新增加一层注意力,来描述每一个注意力的重要性。

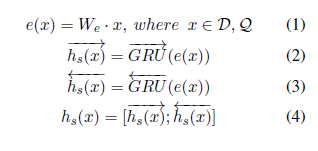

- Contextual Embedding

首先将文档D和问题Q转化为one-hot向量,然后将one-hot向量输入embedding层,然后用共享嵌入矩阵We将它们转换为连续表示。通过共享词嵌入,文档和问题都可以参与嵌入的学习,它们都将从这种机制中获益。然后,我们使用两个双向RNNS来获取文档的上下文表示形式并分别进行查询,其中每个单词的表示形式是通过连接向前和向后输出状态形成的。在权衡模型性能和训练复杂度之后,我们选择门控递归单元(GRU) 作为递归单元实现。也就是说,用 Bi-GRU 将 query 和 document 分别 encode,将两个方向的hidden state 拼接起来作为该词的 state,此时 document 和 query 可以分别用一个 Dxd 和 Qxd 的矩阵来表示,这里 D 是 document 的词数,Q 是 query 的词数,d 是embedding 的维度。

1 embedding = tf.get_variable(''embedding'',

2 [FLAGS.vocab_size, FLAGS.embedding_size],

3 initializer=tf.random_uniform_initializer(minval=-0.05, maxval=0.05))

4

5 regularizer = tf.nn.l2_loss(embedding)

6

7 doc_emb = tf.nn.dropout(tf.nn.embedding_lookup(embedding, documents), FLAGS.dropout_keep_prob)

8 doc_emb.set_shape([None, None, FLAGS.embedding_size])

9

10 query_emb = tf.nn.dropout(tf.nn.embedding_lookup(embedding, query), FLAGS.dropout_keep_prob)

11 query_emb.set_shape([None, None, FLAGS.embedding_size])

12

13 with tf.variable_scope(''document'', initializer=orthogonal_initializer()):

14 fwd_cell = tf.contrib.rnn.GRUCell(FLAGS.hidden_size)

15 back_cell = tf.contrib.rnn.GRUCell(FLAGS.hidden_size)

16

17 doc_len = tf.reduce_sum(doc_mask, reduction_indices=1)

18 h, _ = tf.nn.bidirectional_dynamic_rnn(

19 fwd_cell, back_cell, doc_emb, sequence_length=tf.to_int64(doc_len), dtype=tf.float32)

20 #h_doc = tf.nn.dropout(tf.concat(2, h), FLAGS.dropout_keep_prob)

21 h_doc = tf.concat(h, 2)

22

23 with tf.variable_scope(''query'', initializer=orthogonal_initializer()):

24 fwd_cell = tf.contrib.rnn.GRUCell(FLAGS.hidden_size)

25 back_cell = tf.contrib.rnn.GRUCell(FLAGS.hidden_size)

26

27 query_len = tf.reduce_sum(query_mask, reduction_indices=1)

28 h, _ = tf.nn.bidirectional_dynamic_rnn(

29 fwd_cell, back_cell, query_emb, sequence_length=tf.to_int64(query_len), dtype=tf.float32)

30 #h_query = tf.nn.dropout(tf.concat(2, h), FLAGS.dropout_keep_prob)

31 h_query = tf.concat(h, 2)

文档的Contextual Embedding表示为h_doc,维度为|D| * 2d,问题的Contextual Embedding表示为h_query,维度为|Q| * 2d,d为GRU的节点数。

D和问题Q转化为one-hot向量,然后将one-hot向量输入embedding层,

作者:损失函数

链接:https://www.imooc.com/article/29985

来源:慕课网

本文原创发布于慕课网 ,转载请注明出处,谢谢合作

- Pair-wise Matching Score

在获得文档h_doc和查询h_query 的contextual embeddings之后,我们计算成对匹配矩阵,其表示一个文档词和一个查询词的成对匹配程度。 也就是,当给出第i个单词和第j个单词时,我们可以通过它们的点积来计算匹配分数

1 M = tf.matmul(h_doc, h_query, adjoint_b=True)

2 M_mask = tf.to_float(tf.matmul(tf.expand_dims(doc_mask, -1), tf.expand_dims(query_mask, 1)))通过这种方式,我们可以计算每个文档和查询词之间的每一对匹配分数,形成一个矩阵M∈R| D | * | Q | ,其中第i行第j列的值由M(i,j)填充。行数为文档D的长度,宽度为查询Q的长度。

这一步本质上就是对两个矩阵做矩阵乘法,得到所谓的Matching Score矩阵M,这里的M矩阵的维度是DxQ,矩阵中的每个元素表示对应 document 和 query 中的词之间的matching score。

- Individual Attentions

对 M 矩阵中的每一列做 softmax 归一化,其中当考虑单个查询词时,每列是单独的文档级关注,得到所谓的 query-to-document attention的关注,即给定一个query词,对 document 中每个词的 attention,本文用下式进行表示:

每一列都是一个文档级别的注意力,我们表示α(t)∈R |D| 作为在第t个查询词的文档级关注

- Attention-over-Attention

前三个步骤都是很多模型采用的通用做法,这一步是本文的亮点。首先,上一步是对 M 矩阵的每一列做了 softmax 归一化,这里对 M 矩阵的每一行做 softmax 归一化,即得到所谓的document-to-query attention。

我们引入另一种注意机制来自动确定每个individual的注意力的重要性,而不是使用简单的启发式方法(如求和或平均)来将这些个体注意力集中到最后的注意力上。

首先,我们计算反向注意力,即对于时间t的每个文档词,我们计算查询中的“重要性”分布,即给定单个文档词时哪些查询词更重要。 我们将逐行方式的softmax函数应用于成对匹配矩阵M,以获得查询级别的关注。 我们表示β(t)∈R | Q | 作为关于时间t处的文档词的查询级关注,这可以被视为文档到查询的关注。(对M横向求softmax)

到目前为止,我们已经获得了查询到文档的关注度α(把M竖着soft)和文档到查询的关注度β(把M横着soft)。 我们的动机是利用文档和查询之间的交互信息。

1 # Softmax over axis

2 def softmax(target, axis, mask, epsilon=1e-12, name=None):

3 with tf.op_scope([target], name, ''softmax''):

4 max_axis = tf.reduce_max(target, axis, keep_dims=True)

5 target_exp = tf.exp(target-max_axis) * mask

6 normalize = tf.reduce_sum(target_exp, axis, keep_dims=True)

7 softmax = target_exp / (normalize + epsilon)

8 return softmax

9

10 alpha = softmax(M, 1, M_mask)##mask矩阵,非零位置为1,axis=0为batch

11 beta = softmax(M, 2, M_mask)然后我们对所有β(t)进行平均以得到平均的查询级别关注β。 请注意,我们不会将另一个softmax应用于β,因为平均个别关注不会破坏正常化条件。

这里的α的维度为|D| * |Q|,β的维度为|Q| * 1,我们在看问题的时候,并不是问题的每个单词我们都需要用到解题中,即问题中的单词的重要性是不一样的,这一步我们主要分析问题中每个单词的贡献,先定位贡献最大的单词,然后再在文档中定位和这个贡献最大的单词相关性最高的词作为问题的答案。

最后,我们计算α和β的点积得到“attended document-level attention”s∈R| D | 即attention-over-attention机制。 直观地说,该操作是在时间t查看查询词时(此时根据上步所得的每个问题词的贡献程度)计算每个单独文档级关注度α(t)的加权总和。通过每个查询词的重要性通过投票结果来做出最终决定(文档级关注)。

直观上看,就像把每个query 的word的权重去衡量每个document−level 的权重,由此学习出document 中哪个词更有可能为answer。

1 query_importance = tf.expand_dims(tf.reduce_mean(beta, 1) / tf.to_float(tf.expand_dims(doc_len, -1)), -1)

2 s = tf.squeeze(tf.matmul(alpha, query_importance), [2])- Final Predictions

上面我们可以得到一个s 向量,这个s 向量和document长度相等,因此若某个词在document 出现多次,则该词也应该在s 中出现多次,该词的概率应该等于其在s 出现的概率之和。计算单词w是答案的条件概率,文档D的单词组成单词空间V,单词w可能在单词空间V中出现了多次,其出现的位置i组成一个集合I(w, D),对每个单词w,我们通过计算它在s中的得分并求和得到单词w是答案的条件概率,计算公式如下所示:

1 unpacked_s = zip(tf.unstack(s, FLAGS.batch_size), tf.unstack(documents, FLAGS.batch_size))

2 y_hat = tf.stack([tf.unsorted_segment_sum(attentions, sentence_ids, FLAGS.vocab_size) for (attentions, sentence_ids) in unpacked_s])

##unsorted_segment_sum函数是用来分割求和的,第二个参数就是分割的index,index相同的作为一个整体求和

##注意这里面y_hat也就是上面所讲的s向量,但是其经过unsorted_segment_sum操作后,其长度变为vocab_size.

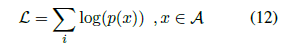

那在train 时,object_function 具体是怎样呢?

实现代码:

下面代码中的一波操作不太好理解,其在nlpnlp 代码中很常见,值得好好琢磨。

1 index = tf.range(0, FLAGS.batch_size) * FLAGS.vocab_size + tf.to_int32(answer)##这里面为啥乘以vocab_size,看下面解释,求答案在全文中的索引,也就是I(W,D)

2

3 flat = tf.reshape(y_hat, [-1])## 注意每个样本的y_hat长度为vocab_size,直接将batch_size个flat reshape成一维。

4 relevant = tf.gather(flat, index)##以index为准,找到flat中对应的值,也就是answer中的词在s向量中的概率值。

5

6 loss = -tf.reduce_mean(tf.log(relevant))

7

8 accuracy = tf.reduce_mean(tf.to_float(tf.equal(tf.argmax(y_hat, 1), answer)))4 N最佳重新排名策略 N-best Re-ranking Strategy

- N-best解码

我们在解码过程中提取后续候选项,而形成N个最佳列表。而不是仅挑选出具有最高可能性的候选项作为回答。

- 将候选词填入查询

作为填充式问题的一个特征,每个候选词都可以填充到查询的空白处以形成一个完整的句子。 这使我们能够根据其上下文来检查候选词。

- 特征打分

能够从很多方面为候选句子打分,本文中我们选取三种特征来进行打分形成N-best表。

(1)Global N-gram LM:这是评分句子的基本指标,旨在评估其流利程度。 基于训练集中所有的document训练8-gram模型,判断候选答案所在句子的合理性;

(2)Local N-gram LM:与全局LM不同,局部LM旨在通过给定文档来探索信息,因此统计信息是从测试时间文档中获得的。 应该注意的是,局部LM是逐个样本进行训练的, 它并未在整个测试集上进行训练,这在真实测试案例中是不合法的。 当测试样本中有许多未知单词时,此模型非常有用。基于问题所对应的的document训练8-gram模型;

(3)Word-class LM:与全局LM类似,词类LM也在训练数据的文档部分进行训练,但是这些词被转换为它的词类ID。可以通过使用聚类方法获得该词类。 在本文中,我们简单地使用mkcls工具来生成1000个词类。

- 微调权重和 重新打分和重新排名

采用K-best MIRA算法对上述三种特征进行权值训练,最后累加权值,选择损失函数最小的值作为最终的正确答案。

mkcls是一种通过使用最大似然准则来训练单词类的工具。生成的词类特别适合于语言模型或统计翻译模型。

MIRA算法是一种超保守算法,在分类,排序,回归等应用领域都取得不错的成绩。

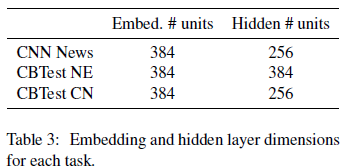

5 实验

5.1实验设置

- 嵌入层(使用未预训练词向量)权值初始化:[−0.05, 0.05];

- l2正则化:0.0001;

- dropout:0.1;

- 隐含层:GRU由随机正交矩阵初始化

- 优化函数:Adam;

- 学习率初始化:0.001;

- gradient clip:5;

- batch size:32;

- 5-Bestlist(影响不大)

对于不同语料,嵌入层维度和隐含层单元数:

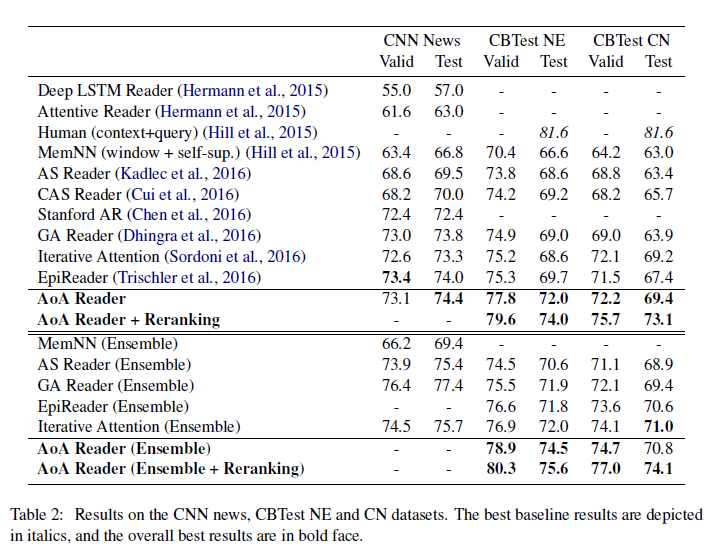

5.2 实验结果

我们的实验是在公共数据集上CNN news和CBTest NE/CN开展:

CNN News大概包括90k文档和380k问题;DailyMail包含197k 文档 和 879k 问题;CBT Test将文本根据单词属性分为NE,CN,P,V,这四种的验证集和测试集都是2000和2500的问题。

实验结果:

我们的AoA阅读器在当前先进的系统上有很大的优势,在CBTest NE和CN测试集中,相比EpiReader有2.3%和2.0%的绝对改进,证明了我们的模型的有效性。此外,通过在重新排序步骤中添加额外的特性,在CBTest NE/CN测试中,相对于AoA阅读器还有2.0%到3.7%的显著提升集。我们还发现,我们的单一模型可以保持与以前最好的集成系统的性能,甚至我们有0.9%的绝对改善超过最佳集成模型(迭代注意)在CBTest NE验证集。当涉及到集成模型时,我们的AoA读者还显示了显著改善了以前最好的集成模型,并建立了一个新的先进的系统。

为了调查采用attention-over-attention机制的有效性,我们也比较模型CAS模型(使用预定义的合并启发式,比如sum或avg等)。本文不使用预定义的合并启发式,而是让模型显式地学习个体之间的权重关注,使性能比CAS显著提高4.1%和3.7%可以在CNN的验证和测试集上 。

6 感悟

- 我看过一些QA、QG等方面的论文,感觉大部分都做了类似论文所说的document−level attention 操作,也就是结合query去attention documentt, 这篇创新的也做了query−level attention 操作。

- 感觉这篇论文实际上做了两层attention,在第一层中不仅做了document−level attention ,也做了query−level attention,第二层中,把结合query−level attention的信息对document−level attention 又做了attention 操作。

- 考虑在阅读理解其他方面的应用; 考虑模型是否有别的改进;研究语料库的应用;

- 单个模型和ensembel模型

Comprehensive Guide to build a Recommendation Engine from scratch (in Python) / 从0开始搭建推荐系统

https://www.analyticsvidhya.com/blog/2018/06/comprehensive-guide-recommendation-engine-python/, 一篇详细的入门级的推荐系统的文章,这篇文章内容详实,格式漂亮,推荐给大家.

下面是翻译,翻译关注的是意思,不是直译哈,大家将就着看, 如果英文好,推荐看原文,原文的排版比我这个舒服多了.

NOTE: 原文中发现一个有误的地方,下面我会用 红色 标出来. 同时,我在翻译的过程中,有疑虑或者值得商榷的地方,我会用 蓝色 标出来.

Comprehensive Guide to build a Recommendation Engine from scratch (in Python)

从0开始搭建推荐系统

Introduction / 简介

In today’s world, every customer is faced with multiple choices. For example, If I’m looking for a book to read without any specific idea of what I want, there’s a wide range of possibilities how my search might pan out. I might waste a lot of time browsing around on the internet and trawling through various sites hoping to strike gold. I might look for recommendations from other people.

现如今,每个顾客都面临多种选择. 比如,假如我想读书又不知道看点什么,那就有无数种可能的选择。我可能会浪费大把的时间在网上搜索或者盲目地在各个书店撒网希望淘到喜欢的书。我也可能需求其他人的推荐.

But if there was a site or app which could recommend me books based on what I have read previously, that would be a massive help. Instead of wasting time on various sites, I could just log in and voila! 10 recommended books tailored to my taste.

但是如果有这么一个网站或者APP能基于我以前读过什么来给我推荐书籍,那该多好啊. 我不是在各个网站浪费时间,而是直接找到10本满足我口味的书细细品味.

This is what recommendation engines do and their power is being harnessed by most businesses these days. From Amazon to Netflix, Google to Goodreads, recommendation engines are one of the most widely used applications of machine learning techniques.

这就是推荐引擎要做的事情,并且它的功用也得到的大多数商业行为的使用. 从 Amazon 到 Netfix, Google 到 Goodreads, 推荐引擎成为了最常见的机器学习技术的一种.

In this article, we will cover various types of recommendation engine algorithms and fundamentals of creating them in Python. We will also see the mathematics behind the workings of these algorithms. Finally, we will create our own recommendation engine using matrix factorization.

在这篇文章里,我们将讲到各种不同的推荐引擎算法和原理并用python 创建他们. 我们也将看到这些算法背后的数学知识. 最后,我们将用 matrix factorization 来创建我们自己的推荐引擎.

Table of Contents 目录

- What are recommendation engines? 什么是推荐引擎

- How does a recommendation engine work? 推荐引擎是怎么工作的Case study in Python using the MovieLens dataset 基于MovieLens数据集用python实现的案例

- Data collection 数据收集

- Data storage 数据存储 Filtering the data 数据过滤

- Content based filtering 基于内容的过滤

- Collaborative filtering 协同过滤

- Case study in Python using the MovieLens dataset / 基于MovieLens 数据集的案例学习python实现

- Building collaborative filtering model from scratch / 从0开始创建协同过滤模型

- Building Simple popularity and collaborative filtering model using Turicreate / 使用 Turicreate 创建简单的基于欢迎度的模型和协同过滤模型

- Introduction to matrix factorization / 介绍 matrix factorization

- Building a recommendation engine using matrix factorization / 使用 matrix factorization 创建推荐引擎 Evaluation metrics for recommendation engines / 推荐引擎效果评估

- Recall

- Precision

- RMSE (Root Mean Squared Error)

- Mean Reciprocal Rank

- MAP at k (Mean Average Precision at cutoff k)

- NDCG (Normalized Discounted Cumulative Gain)

- What else can be tried? / 其他有哪些可以尝试?

1. What are recommendation engines? / 推荐引擎是什么?

Till recently, people generally tended to buy products recommended to them by their friends or the people they trust. This used to be the primary method of purchase when there was any doubt about the product. But with the advent of the digital age, that circle has expanded to include online sites that utilize some sort of recommendation engine.

人们一般倾向于在买东西时寻求他们的朋友或者他们相信的人推荐的产品. 这是过去最常见的购买方式尤其是当他们对要买的产品有疑虑时. 但是随着数字时代的到来,这个过程就包含了使用推荐引擎来在线的推荐产品.

A recommendation engine filters the data using different algorithms and recommends the most relevant items to users. It first captures the past behavior of a customer and based on that, recommends products which the users might be likely to buy.

推荐引擎使用不同的算法过滤数据,并推荐给用户最相关的东西. 它捕捉客户的历史行为,并且基于这些历史行为给用户推荐他们最可能买的东西.

If a completely new user visits an e-commerce site, that site will not have any past history of that user. So how does the site go about recommending products to the user in such a scenario? One possible solution could be to recommend the best selling products, i.e. the products which are high in demand. Another possible solution could be to recommend the products which would bring the maximum profit to the business.

如果是一个全新的用户访问电商网站,这个站点没有这个用户的任何历史信息. 那么这种情况下网站怎么推荐呢?一个可能的方案是推荐热销的商品,比如,高需求量的商品; 另一个方案是推荐那些给公司带来高收益的产品.

If we can recommend a few items to a customer based on their needs and interests, it will create a positive impact on the user experience and lead to frequent visits. Hence, businesses nowadays are building smart and intelligent recommendation engines by studying the past behavior of their users.

如果我们能根据用户的需求和兴趣推荐一些产品给他们,这将产生有益的效果并且带来更多的访问. 因此,现代商业活动正在基于他们用户的行为建立只能推荐引擎.

Now that we have an intuition of recommendation engines, let’s now look at how they work.

现在我们对推荐引擎有了一个直观的认识,让我们来看看它是怎么工作的

2. How does a recommendation engine work? / 推荐引擎怎么工作的?

Before we deep dive into this topic, first we’ll think of how we can recommend items to users:

在我们深入研究之前,我们先想一下我们怎么推荐产品给用户

- We can recommend items to a user which are most popular among all the users / 我们可以给所有用户推荐正在热销的产品

- We can divide the users into multiple segments based on their preferences (user features) and recommend items to them based on the segment they belong to / 我们也可以按照用户的喜好特征把用户分组,然后按照分组分别推荐不同的东西给他们

Both of the above methods have their drawbacks. In the first case, the most popular items would be the same for each user so everybody will see the same recommendations. While in the second case, as the number of users increases, the number of features will also increase. So classifying the users into various segments will be a very difficult task.

以上两种方法都有缺陷.第一种方法,所有人都收到的是一样的产品的推荐. 第二种方法,如果用户不断增加,用户的喜好特征也会增加,再次给用户归类是一个困难的工作.

The main problem here is that we are unable to tailor recommendations based on the specific interest of the users. It’s like Amazon is recommending you buy a laptop just because it’s been bought by the majority of the shoppers. But thankfully, Amazon (or any other big firm) does not recommend products using the above mentioned approach. They use some personalized methods which help them in recommending products more accurately.

这里的主要问题是我们不能根据用户各自的兴趣来定制化的推荐. 就像Amanzon 因为其他很多人买了笔记本电脑也给你推荐了笔记本电脑. 但是幸运的是,Amazon并不是这样推荐的, 他们使用的个性化的推荐方法,这样的推荐更加精确.

Let’s now focus on how a recommendation engine works by going through the following steps.

现在我们更深入的按步骤讲解推荐引擎的工作方式.

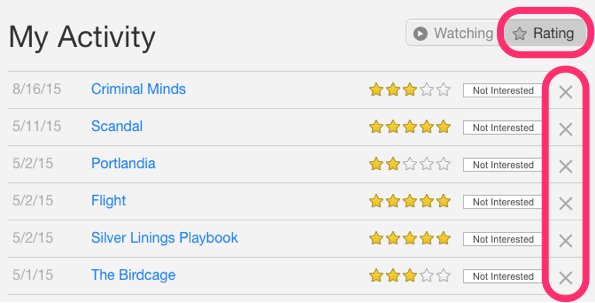

2.1 Data collection / 数据收集

This is the first and most crucial step for building a recommendation engine. The data can be collected by two means: explicitly and implicitly. Explicit data is information that is provided intentionally, i.e. input from the users such as movie ratings. Implicit data is information that is not provided intentionally but gathered from available data streams like search history, clicks, order history, etc.

这是创建推荐引擎第一步也是最重要的一步。两种方法可以收集收据:明采和暗采。明采就是让用户主动输入反馈,比如对所购商品的评价打分;暗采就是系统在前台收集后台记录用户的行为数据,常见的有用户的鼠标点击行为,搜索记录, 购买记录等.

In the above image, Netflix is collecting the data explicitly in the form of ratings given by user to different movies.

上图中,Netfix 使用的就用明采的方法让用户主动给电影打分评级.

Here the order history of a user is recorded by Amazon which is an example of implicit mode of data collection.

这是一个Amazon 网站的用户购买记录,属于暗采方法.

2.2 Data storage / 数据存储

The amount of data dictates how good the recommendations of the model can get. For example, in a movie recommendation system, the more ratings users give to movies, the better the recommendations get for other users. The type of data plays an important role in deciding the type of storage that has to be used. This type of storage could include a standard SQL database, a NoSQL database or some kind of object storage.

数据量的多少会影响推荐模型的精度. 比如,一个电影推荐系统,用户给的打分越多,推荐就会越准确. 数据的类型决定了存储类型. 存储类型有标准的SQL数据库,NoSQL 数据库和对象存储.

2.3 Filtering the data / 数据过滤

After collecting and storing the data, we have to filter it so as to extract the relevant information required to make the final recommendations.

收集到数据并存储好以后,我们要过滤数据来找到相关信息,然后做出最终推荐.

Source: intheshortestrun

There are various algorithms that help us make the filtering process easier. In the next section, we will go through each algorithm in detail.

有各种各样的算法可以帮我们的过滤流程更加容易. 接下来,我们就一个一个来看看.

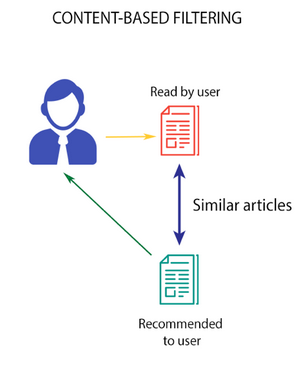

2.3.1 Content based filtering / 基于内容的过滤

This algorithm recommends products which are similar to the ones that a user has liked in the past.

这个算法推荐给用户那些他以前喜欢过的东西的类似产品.

Source: Medium

For example, if a person has liked the movie “Inception”, then this algorithm will recommend movies that fall under the same genre. But how does the algorithm understand which genre to pick and recommend movies from?

比如,如果一个人喜欢电影《盗梦空间》,那么这个算法就会推荐相同类型的电影. 但是,这个算法怎么知道根据电影的哪个属性来推荐呢?

Consider the example of Netflix. They save all the information related to each user in a vector form. This vector contains the past behavior of the user, i.e. the movies liked/disliked by the user and the ratings given by them. This vector is known as the profile vector. All the information related to movies is stored in another vector called the item vector. Item vector contains the details of each movie, like genre, cast, director, etc.

考虑Netflix 的例子,他们保存了所有的用户信息在向量里,这个向量包含了这个用户的过往行为,比如他喜欢或者不喜欢那些电影,给电影评了多少分. 这个向量叫做用户属性向量。所有与电影本身相关的属性,比如电影类型,导演,年代信息等会保存在另一个向量里,叫产品属性向量.

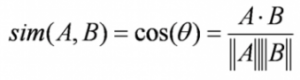

The content-based filtering algorithm finds the cosine of the angle between the profile vector and item vector, i.e. cosine similarity. Suppose A is the profile vector and B is the item vector, then the similarity between them can be calculated as:

基于内容的过滤算法找出用户属性向量和产品属性向量的夹角的cos值,比如 cos 相似性. 假如A是用户属性向量,B是产品属性向量,他们两者的相似性可以计算如下:

(译者注:我觉得A和B是两种完全不同的类型,不应该去计算相似性,这里值得商榷)

Based on the cosine value, which ranges between -1 to 1, the movies are arranged in descending order and one of the two below approaches is used for recommendations:

基于cos值(-1 ~ 1),把电影按照从大到小的值排列,然后使用下面两种方法推荐:

- Top-n approach: where the top n movies are recommended (Here n can be decided by the business) / 推荐最大的几个,至于是几个,取决于业务需求

- Rating scale approach: Where a threshold is set and all the movies above that threshold are recommended / 设定一个阈值,凡是>阈值的都推荐

Other methods that can be used to calculate the similarity are: / 除了cos相似性,其他计算相似性的方法有:

- Euclidean Distance: Similar items will lie in close proximity to each other if plotted in n-dimensional space. So, we can calculate the distance between items and based on that distance, recommend items to the user. The formula for the euclidean distance is given by: / 欧几里得距离: 没啥说头,自己看公式

![]()

- Pearson’s Correlation: It tells us how much two items are correlated. Higher the correlation, more will be the similarity. Pearson’s correlation can be calculated using the following formula: / Pearson相关性(译者注:我理解就是cos相关性的一个变种,先求mean,在算cos 相似性,为的是消除人为喜好造成的参数分布差异,比如有些人容易满足对产品总是打高分,有些人比较挑剔容易打低分)

A major drawback of this algorithm is that it is limited to recommending items that are of the same type. It will never recommend products which the user has not bought or liked in the past. So if a user has watched or liked only action movies in the past, the system will recommend only action movies. It’s a very narrow way of building an engine.

这个算法主要的缺点是只能推荐用户买过的相似的东西。如果用户没有买过东西,那就无能为力了. 如果用户只看过动作片,那么它就只会推动作片给用户. 这种推荐相对比较low.

To improve on this type of system, we need an algorithm that can recommend items not just based on the content, but the behavior of users as well.

为了改善这个系统,我们需要一个算法,既能基于内容,也能基于用户行为来推荐.

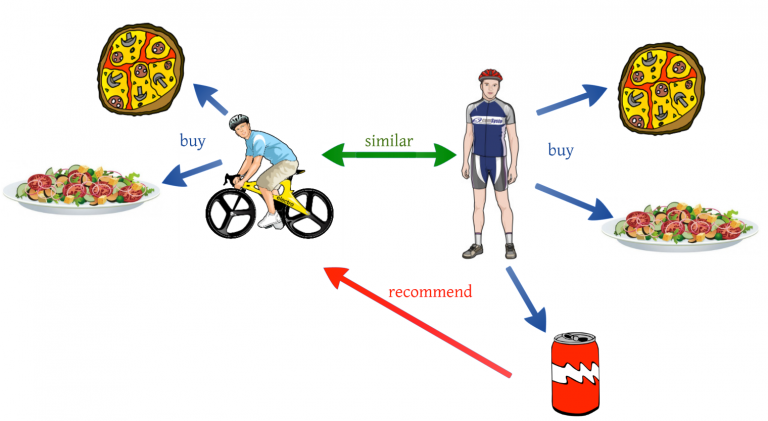

2.3.2 Collaborative filtering / 协同过滤

Let us understand this with an example. If person A likes 3 movies, say Interstellar, Inception and Predestination, and person B likes Inception, Predestination and The Prestige, then they have almost similar interests. We can say with some certainty that A should like The Prestige and B should like Interstellar. The collaborative filtering algorithm uses “User Behavior” for recommending items. This is one of the most commonly used algorithms in the industry as it is not dependent on any additional information. There are different types of collaborating filtering techniques and we shall look at them in detail below.

举一个例子来理解. 如果一个人A喜欢3个电影 <星际>,<盗梦空间>,和<前目的地>, 另一个人B喜欢<盗梦空间>,<前目的地>和<致命魔术>. 我们一定程度上可以说A应该会喜欢<致命魔术>,B应该会喜欢<星际>. 协同过滤使用“用户行为“来推荐. 这是业界常用的算法因为他不依赖与任何其他信息. 有几种不同的协同过滤技术,我们接下来仔细来了解下.

User-User collaborative filtering / 用户-用户 协同过滤

This algorithm first finds the similarity score between users. Based on this similarity score, it then picks out the most similar users and recommends products which these similar users have liked or bought previously.

这个算法先找到用户之间的相似性. 基于这个相似性数据,给用户推荐那些和他有最像的行为的用户买过的东西.

Source: Medium

In terms of our movies example from earlier, this algorithm finds the similarity between each user based on the ratings they have previously given to different movies. The prediction of an item for a user u is calculated by computing the weighted sum of the user ratings given by other users to an item i.

根据我们之前的电影例子,这个算法找出给过评分的用户的相似性. 用户u 给商品 i 打分的预测公式如下:

The prediction Pu,i is given by:

Here, / 这里

- Pu,i is the prediction of an item / 用户u 给商品 i 评分的预测值

- Rv,i is the rating given by a user v to a movie i

- Su,v is the similarity between users

Now, we have the ratings for users in profile vector and based on that we have to predict the ratings for other users. Following steps are followed to do so:

现在,我们有了用户属性向量,基于这个我们就可以做预测了. 具体步骤如下:

- For predictions we need the similarity between the user u and v. We can make use of Pearson correlation. / 我们选的是Pearson相关性

- First we find the items rated by both the users and based on the ratings, correlation between the users is calculated. / 那就算吧

- The predictions can be calculated using the similarity values. This algorithm, first of all calculates the similarity between each user and then based on each similarity calculates the predictions. Users having higher correlation will tend to be similar. / 算出一个大的矩阵,矩阵里面的数字越接近1,越相似.

- Based on these prediction values, recommendations are made. Let us understand it with an example: / 下面例子讲的很清楚

Consider the user-movie rating matrix:

这是一个user-movie 矩阵:

| User/Movie | x1 | x2 | x3 | x4 | x5 | Mean User Rating |

| A | 4 | 1 | – | 4 | – | 3 |

| B | – | 4 | – | 2 | 3 | 3 |

| C | – | 1 | – | 4 | 4 | 3 |

Here we have a user movie rating matrix. To understand this in a more practical manner, let’s find the similarity between users (A, C) and (B, C) in the above table. Common movies rated by A/[ and C are movies x2 and x4 and by B and C are movies x2, x4 and x5.

A和C对电影x2,x4 都打分了,B和C对 x2,x4,x5 都打分了. 下面的算式pearson 相关性算式.

The correlation between user A and C is more than the correlation between B and C. Hence users A and C have more similarity and the movies liked by user A will be recommended to user C and vice versa.

可以看出, A 和C相关性很高,如果A喜欢什么,那就推荐给C. 相反亦然.

This algorithm is quite time consuming as it involves calculating the similarity for each user and then calculating prediction for each similarity score. One way of handling this problem is to select only a few users (neighbors) instead of all to make predictions, i.e. instead of making predictions for all similarity values, we choose only few similarity values. There are various ways to select the neighbors:

这个算法非常耗时间因为它要先计算相似性矩阵然后计算预测值. 一个解决这个问题的方法是求出相似度矩阵后选择一部分邻居用户来计算预测值.

- Select a threshold similarity and choose all the users above that value / 把相似性大于一定阈值的的用户选进来

- Randomly select the users / 随机选用户

- Arrange the neighbors in descending order of their similarity value and choose top-N users / 选前几个最相似的用户

- Use clustering for choosing neighbors / 使用聚类算法找邻居用户

This algorithm is useful when the number of users is less. Its not effective when there are a large number of users as it will take a lot of time to compute the similarity between all user pairs. This leads us to item-item collaborative filtering, which is effective when the number of users is more than the items being recommended.

这个算法如果用户数量少还可以,如果用户太多了,相似性矩阵就会很大,计算量太大,所有如果用户数量大于商品数量的情况下,采用的是接下来讲到的 产品-产品 协同过滤.

Item-Item collaborative filtering / 产品-产品协同过滤

In this algorithm, we compute the similarity between each pair of items.

这个算法,我们技术产品之间的相似性

Source: Medium

So in our case we will find the similarity between each movie pair and based on that, we will recommend similar movies which are liked by the users in the past. This algorithm works similar to user-user collaborative filtering with just a little change – instead of taking the weighted sum of ratings of “user-neighbors”, we take the weighted sum of ratings of “item-neighbors”. The prediction is given by:

基本思想是,你喜欢过一种商品,那就把相似的商品推给你. 预测公式如下.

Now we will find the similarity between items. / 相似性用cos 相似性

Now, as we have the similarity between each movie and the ratings, predictions are made and based on those predictions, similar movies are recommended. Let us understand it with an example. / 看例子很清楚

| User/Movie | x1 | x2 | x3 | x4 | x5 |

| A | 4 | 1 | 2 | 4 | 4 |

| B | 2 | 4 | 4 | 2 | 1 |

| C | – | 1 | – | 3 | 4 |

| Mean Item Rating | 3 | 2 | 3 | 3 | 3 |

Here the mean item rating is the average of all the ratings given to a particular item (compare it with the table we saw in user-user filtering). Instead of finding the user-user similarity as we saw earlier, we find the item-item similarity.

To do this, first we need to find such users who have rated those items and based on the ratings, similarity between the items is calculated. Let us find the similarity between movies (x1, x4) and (x1, x5). Common users who have rated movies x1 and x4 are A and B while the users who have rated movies x1 and x5 are also A and B.

(译者注: 本来说好用 cos 相似性的,结果作者忽悠我们,他用了 pearson 相似性,因为每个数组都借去了mean. 其实没必要, 直接cos相似性就挺好.)

The similarity between movie x1 and x4 is more than the similarity between movie x1 and x5. So based on these similarity values, if any user searches for movie x1, they will be recommended movie x4 and vice versa. Before going further and implementing these concepts, there is a question which we must know the answer to – what will happen if a new user or a new item is added in the dataset? It is called a Cold Start. There can be two types of cold start:

这里有个有意思的概念注意一下,叫冷启动,就是一个新用户和新产品加入了我们的系统

- Visitor Cold Start

- Product Cold Start

Visitor Cold Start means that a new user is introduced in the dataset. Since there is no history of that user, the system does not know the preferences of that user. It becomes harder to recommend products to that user. So, how can we solve this problem? One basic approach could be to apply a popularity based strategy, i.e. recommend the most popular products. These can be determined by what has been popular recently overall or regionally. Once we know the preferences of the user, recommending products will be easier.

新用户加入,我不知道你的过往行为,那就可以给你推送最近流行的产品

On the other hand, Product Cold Start means that a new product is launched in the market or added to the system. User action is most important to determine the value of any product. More the interaction a product receives, the easier it is for our model to recommend that product to the right user. We can make use of Content based filtering to solve this problem. The system first uses the content of the new product for recommendations and then eventually the user actions on that product.

新产品加入,我不知道你和其他产品的相似性,那就人工来标注新产品的属性,比如给产品打上日用品,家电等标签,然后基于内容推送, 有人正在关注家电那就推荐给他.

Now let’s solidify our understanding of these concepts using a case study in Python. Get your machines ready because this is going to be fun!

现在我们通过python 的例子来加深理解. 准备好机器,开干吧!

3. Case study in Python using the MovieLens Dataset / 基于MovieLens数据集的案例学习,使用python语言

We will work on the MovieLens dataset and build a model to recommend movies to the end users. This data has been collected by the GroupLens Research Project at the University of Minnesota. The dataset can be downloaded from here. This dataset consists of:

我们将基于明尼苏达大学的GroupLens 研究项目采集的MovieLens数据集来创建一个模型. 这个数据集有943个用户1682个电影的数据,总共100k的rating 数据. 数据集可以在这里下载

- 100,000 ratings (1-5) from 943 users on 1682 movies

- Demographic information of the users (age, gender, occupation, etc.)

First, we’ll import our standard libraries and read the dataset in Python.

首先,导入python常用库并读取数据集

import pandas as pd

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

# pass in column names for each CSV as the column name is not given in the file and read them using pandas.

# You can check the column names from the readme file

#Reading users file:

u_cols = [''user_id'', ''age'', ''sex'', ''occupation'', ''zip_code'']

users = pd.read_csv(''ml-100k/u.user'', sep=''|'', names=u_cols,encoding=''latin-1'')

#Reading ratings file:

r_cols = [''user_id'', ''movie_id'', ''rating'', ''unix_timestamp'']

ratings = pd.read_csv(''ml-100k/u.data'', sep=''\t'', names=r_cols,encoding=''latin-1'')

#Reading items file:

i_cols = [''movie id'', ''movie title'' ,''release date'',''video release date'', ''IMDb URL'', ''unknown'', ''Action'', ''Adventure'',

''Animation'', ''Children\''s'', ''Comedy'', ''Crime'', ''Documentary'', ''Drama'', ''Fantasy'',

''Film-Noir'', ''Horror'', ''Musical'', ''Mystery'', ''Romance'', ''Sci-Fi'', ''Thriller'', ''War'', ''Western'']

items = pd.read_csv(''ml-100k/u.item'', sep=''|'', names=i_cols,

encoding=''latin-1'')

After loading the dataset, we should look at the content of each file (users, ratings, items).

- Users

print(users.shape)

users.head()

So, we have 943 users in the dataset and each user has 5 features, i.e. user_ID, age, sex, occupation and zip_code. Now let’s look at the ratings file.

- Ratings

print(ratings.shape)

ratings.head()

We have 100k ratings for different user and movie combinations. Now finally examine the items file.

- Items

print(items.shape)

items.head()

This dataset contains attributes of 1682 movies. There are 24 columns out of which last 19 columns specify the genre of a particular movie. These are binary columns, i.e., a value of 1 denotes that the movie belongs to that genre, and 0 otherwise.

这个数据集包含了1682部电影. 24列中的最后19列表示的是电影的特定类型, 这些类型都是用二进制0和1表示的,1表示具有这种类型,0表示没有.

The dataset has already been divided into train and test by GroupLens where the test data has 10 ratings for each user, i.e. 9,430 rows in total. We will read both these files into our Python environment.

这个数据集的所有者GroupLens 已经分好了训练集和测试集, 测试集里面每个用户有10个打分,所有总共有9430个打分.

r_cols = [''user_id'', ''movie_id'', ''rating'', ''unix_timestamp'']

ratings_train = pd.read_csv(''ml-100k/ua.base'', sep=''\t'', names=r_cols, encoding=''latin-1'')

ratings_test = pd.read_csv(''ml-100k/ua.test'', sep=''\t'', names=r_cols, encoding=''latin-1'')

ratings_train.shape, ratings_test.shape

![]()

It’s finally time to build our recommend engine! / 最后我们来创建我们的推荐引擎!

4. Building collaborative filtering model from scratch / 从0开始创建协同过滤模型

We will recommend movies based on user-user similarity and item-item similarity. For that, first we need to calculate the number of unique users and movies.

我们将基于 用户-用户 相似性和 产品-产品 相似性来推荐电影. 因为我们先算出有多少不同的用户和电影.

n_users = ratings.user_id.unique().shape[0]

n_items = ratings.movie_id.unique().shape[0]

Now, we will create a user-item matrix which can be used to calculate the similarity between users and items.

现在我们建立一个用户-产品 矩阵, 用它来算用户之间和产品之间的相似性.

data_matrix = np.zeros((n_users, n_items))

for line in ratings.itertuples():

data_matrix[line[1]-1, line[2]-1] = line[3]

Now, we will calculate the similarity. We can use the pairwise_distance function from sklearn to calculate the cosine similarity.

现在,我们来算相似性. 我们用 sklearn 里的pairwise_distance 函数来算cos相似性. (译者注:我觉得这里不对,所有改成了下面的红色代码,具体原因看代码注释)

from sklearn.metrics.pairwise import pairwise_distances

user_similarity = pairwise_distances(data_matrix, metric=''cosine'')

item_similarity = pairwise_distances(data_matrix.T, metric=''cosine'')

# NOTE: why use pairwise_distances? why not cosine_similarity? cosine_distance = 1-cosine_similarity. i believe cosine_similarity is right for here.

# let''s change it to consine_similarity

user_similarity = cosine_similarity(data_matrix) item_similarity = cosine_similarity(data_matrix.T)

This gives us the item-item and user-user similarity in an array form. The next step is to make predictions based on these similarities. Let’s define a function to do just that.

算出了产品-产品相似性和 用户-用户相似性后. 下一步就是基于相似性做预测. 预测函数定义如下:

def predict(ratings, similarity, type=''user''):

if type == ''user'':

mean_user_rating = ratings.mean(axis=1)

#We use np.newaxis so that mean_user_rating has same format as ratings

ratings_diff = (ratings - mean_user_rating[:, np.newaxis])

pred = mean_user_rating[:, np.newaxis] + similarity.dot(ratings_diff) / np.array([np.abs(similarity).sum(axis=1)]).T

elif type == ''item'':

pred = ratings.dot(similarity) / np.array([np.abs(similarity).sum(axis=1)])

return pred

Finally, we will make predictions based on user similarity and item similarity.

最后,我们调用预测函数来预测.

user_prediction = predict(data_matrix, user_similarity, type=''user'')

item_prediction = predict(data_matrix, item_similarity, type=''item'')

As it turns out, we also have a library which generates all these recommendations automatically. Let us now learn how to create a recommendation engine using turicreate in Python. To get familiar with turicreate and to install it on your machine, refer here.

上面我们是自己实现的协同过滤,其实有现成的库来做这些. 接下来我们一起学习用Apple的turicreate 机器学习库来创建推荐引擎. 想了解turicreate 可以看这里

5. Building a simple popularity and collaborative filtering model using Turicreate / 使用Turicreate 创建简单的基于流行度和协同过滤的模型

After installing turicreate, first let’s import it and read the train and test dataset in our environment. Since we will be using turicreate, we will need to convert the dataset in SFrames.

安装好turicreate后,我们把dataframe 格式转成turicreate的 SFrame格式

import turicreate

train_data = turicreate.SFrame(ratings_train)

test_data = turicreate.Sframe(ratings_test)

We have user behavior as well as attributes of the users and movies, so we can make content based as well as collaborative filtering algorithms. We will start with a simple popularity model and then build a collaborative filtering model.

我们有用户行为还有用户和电影的属性,所有我们能基于内容推荐,也能做协同过滤. 我们先从基于流行度的模型开始,然后再会创建一个协同过滤模型.

First we’ll build a model which will recommend movies based on the most popular choices, i.e., a model where all the users receive the same recommendation(s). We will use the turicreate recommender function popularity_recommender for this.

首先我们创建一个基于流行度的模型,就是看什么东西大家都喜欢就推荐什么

popularity_model = turicreate.popularity_recommender.create(train_data, user_id=''user_id'', item_id=''movie_id'', target=''rating'') Various arguments which we have used are:

这些是要用到的参数: 看名字就很直观了,不解释了

- train_data: the SFrame which contains the required training data

- user_id: the column name which represents each user ID

- item_id: the column name which represents each item to be recommended (movie_id)

- target: the column name representing scores/ratings given by the user

It’s prediction time! We will recommend the top 5 items for the first 5 users in our dataset.

现在来看预测! 我们给前5个用户每人推荐5个产品.

popularity_recomm = popularity_model.recommend(users=[1,2,3,4,5],k=5)

popularity_recomm.print_rows(num_rows=25)

Note that the recommendations for all users are the same – 1467, 1201, 1189, 1122, 814. And they’re all in the same order! This confirms that all the recommended movies have an average rating of 5, i.e. all the users who watched the movie gave it a top rating. Thus our popularity system works as expected.

不管是哪个用户,推荐给他的东西都是一样的,就是最流行的5个产品.

After building a popularity model, we will now build a collaborative filtering model. Let’s train the item similarity model and make top 5 recommendations for the first 5 users.

现在我们来创建一个协同过滤模型。我们来选择创建协同过滤里边的item-item 相似度模型.

#Training the model

item_sim_model = turicreate.item_similarity_recommender.create(train_data, user_id=''user_id'', item_id=''movie_id'', target=''rating'', similarity_type=''cosine'')

#Making recommendations

item_sim_recomm = item_sim_model.recommend(users=[1,2,3,4,5],k=5)

item_sim_recomm.print_rows(num_rows=25)

Here we can see that the recommendations (movie_id) are different for each user. So personalization exists, i.e. for different users we have a different set of recommendations.

看,每个用于得到的推荐不一样,个性化产品推荐来了!!!

In this model, we do not have the ratings for each movie given by each user. We must find a way to predict all these missing ratings. For that, we have to find a set of features which can define how a user rates the movies. These are called latent features. We need to find a way to extract the most important latent features from the the existing features. Matrix factorization, covered in the next section, is one such technique which uses the lower dimension dense matrix and helps in extracting the important latent features.

CF 模型是基于相似度的,还有另外一种模型 matrix factorization 和 content based 模型很类似,区别在于content based 是用户手动打了一些属性标签,而matrix factorization 是尝试自动去找出一些隐含的属性标签,虽然这些标签没有具体的名字,我们也不知道它发现了什么属性. 接下来就是matrix factorization的介绍.

暂时翻译到这里,有空继续

6. Introduction to matrix factorization

Let’s understand matrix factorization with an example. Consider a user-movie ratings matrix (1-5) given by different users to different movies.

Here user_id is the unique ID of different users and each movie is also assigned a unique ID. A rating of 0.0 represents that the user has not rated that particular movie (1 is the lowest rating a user can give). We want to predict these missing ratings. Using matrix factorization, we can find some latent features that can determine how a user rates a movie. We decompose the matrix into constituent parts in such a way that the product of these parts generates the original matrix.

Let us assume that we have to find k latent features. So we can divide our rating matrix R(MxN) into P(MxK) and Q(NxK) such that P x QT (here QT is the transpose of Q matrix) approximates the R matrix:

![]() , where:

, where:

- M is the total number of users

- N is the total number of movies

- K is the total latent features

- R is MxN user-movie rating matrix

- P is MxK user-feature affinity matrix which represents the association between users and features

- Q is NxK item-feature relevance matrix which represents the association between movies and features

- Σ is KxK diagonal feature weight matrix which represents the essential weights of features

Choosing the latent features through matrix factorization removes the noise from the data. How? Well, it removes the feature(s) which does not determine how a user rates a movie. Now to get the rating ruifor a movie qik rated by a user puk across all the latent features k, we can calculate the dot product of the 2 vectors and add them to get the ratings based on all the latent features.

![]()

This is how matrix factorization gives us the ratings for the movies which have not been rated by the users. But how can we add new data to our user-movie rating matrix, i.e. if a new user joins and rates a movie, how will we add this data to our pre-existing matrix?

Let me make it easier for you through the matrix factorization method. If a new user joins the system, there will be no change in the diagonal feature weight matrix Σ, as well as the item-feature relevance matrix Q. The only change will occur in the user-feature affinity matrix P. We can apply some matrix multiplication methods to do that.

We have,

![]()

Let’s multiply with Q on both sides.

![]()

Now, we have

![]()

So,

![]()

Simplifying it further, we can get the P matrix:

![]()

This is the updated user-feature affinity matrix. Similarly, if a new movie is added to the system, we can follow similar steps to get the updated item-feature relevance matrix Q.

Remember, we decomposed R matrix into P and Q. But how do we decide which P and Q matrix will approximate the R matrix? We can use the gradient descent algorithm for doing this. The objective here is to minimize the squared error between the actual rating and the one estimated using P and Q. The squared error is given by:

![]()

Here,

- eui is the error

- rui is the actual rating given by user u to the movie i

- řui is the predicted rating by user u for the movie i

Our aim was to decide the p and q value in such a way that this error is minimized. We need to update the p and q values so as to get the optimized values of these matrices which will give the least error. Now we will define an update rule for puk and qki. The update rule in gradient descent is defined by the gradient of the error to be minimized.

![]()

![]()

As we now have the gradients, we can apply the update rule for puk and qki.

![]()

![]()

Here α is the learning rate which decides the size of each update. The above updates can be repeated until the error is minimized. Once that’s done, we get the optimal P and Q matrix which can be used to predict the ratings. Let us quickly recap how this algorithm works and then we will build the recommendation engine to predict the ratings for the unrated movies.

Below is how matrix factorization works for predicting ratings:

# for f = 1,2,....,k :

# for rui ε R :

# predict rui

# update puk and qki

So based on each latent feature, all the missing ratings in the R matrix will be filled using the predicted rui value. Then puk and qki are updated using gradient descent and their optimal value is obtained. It can be visualized as shown below:

Now that we have understood the inner workings of this algorithm, we’ll take an example and see how a matrix is factorized into its constituents.

Consider a 2 X 3 matrix, A2X3 as shown below:

![]()

Here we have 2 users and their corresponding ratings for 3 movies. Now, we will decompose this matrix into sub parts, such that:

![]()

The eigenvalues of AAT will give us the P matrix and the eigenvalues of ATA will give us the Q matrix. Σ is the square root of the eigenvalues from AAT or ATA.

Calculate the eigenvalues for AAT.

![]()

![]()

So, the eigenvalues of AAT are 25, 9. Similarly, we can calculate the eigenvalues of ATA. These values will be 25, 9, 0. Now we have to calculate the corresponding eigenvectors for AAT and ATA.

For λ = 25, we have:

It can be row reduced to:

A unit-length vector in the kernel of that matrix is:

Similarly, for λ = 9 we have:

It can be row reduced to:

A unit-length vector in the kernel of that matrix is:

For the last eigenvector, we could find a unit vector perpendicular to q1 and q2. So,

Σ2X3 matrix is the square root of eigenvalues of AAT or ATA, i.e. 25 and 9.

![]()

Finally, we can compute P2X2 by the formula σpi = Aqi, or pi = 1/σ(Aqi). This gives:

![]()

So, the decomposed form of A matrix is given by:

![]()

![]()

Since we have the P and Q matrix, we can use the gradient descent approach to get their optimized versions. Let us build our recommendation engine using matrix factorization.

7. Building a recommendation engine using matrix factorization

Let us define a function to predict the ratings given by the user to all the movies which are not rated by him/her.

class MF():

# Initializing the user-movie rating matrix, no. of latent features, alpha and beta.

def __init__(self, R, K, alpha, beta, iterations):

self.R = R

self.num_users, self.num_items = R.shape

self.K = K

self.alpha = alpha

self.beta = beta

self.iterations = iterations

# Initializing user-feature and movie-feature matrix

def train(self):

self.P = np.random.normal(scale=1./self.K, size=(self.num_users, self.K))

self.Q = np.random.normal(scale=1./self.K, size=(self.num_items, self.K))

# Initializing the bias terms

self.b_u = np.zeros(self.num_users)

self.b_i = np.zeros(self.num_items)

self.b = np.mean(self.R[np.where(self.R != 0)])

# List of training samples

self.samples = [

(i, j, self.R[i, j])

for i in range(self.num_users)

for j in range(self.num_items)

if self.R[i, j] > 0

]

# Stochastic gradient descent for given number of iterations

training_process = []

for i in range(self.iterations):

np.random.shuffle(self.samples)

self.sgd()

mse = self.mse()

training_process.append((i, mse))

if (i+1) % 20 == 0:

print("Iteration: %d ; error = %.4f" % (i+1, mse))

return training_process

# Computing total mean squared error

def mse(self):

xs, ys = self.R.nonzero()

predicted = self.full_matrix()

error = 0

for x, y in zip(xs, ys):

error += pow(self.R[x, y] - predicted[x, y], 2)

return np.sqrt(error)

# Stochastic gradient descent to get optimized P and Q matrix

def sgd(self):

for i, j, r in self.samples:

prediction = self.get_rating(i, j)

e = (r - prediction)

self.b_u[i] += self.alpha * (e - self.beta * self.b_u[i])

self.b_i[j] += self.alpha * (e - self.beta * self.b_i[j])

self.P[i, :] += self.alpha * (e * self.Q[j, :] - self.beta * self.P[i,:])

self.Q[j, :] += self.alpha * (e * self.P[i, :] - self.beta * self.Q[j,:])

# Ratings for user i and moive j

def get_rating(self, i, j):

prediction = self.b + self.b_u[i] + self.b_i[j] + self.P[i, :].dot(self.Q[j, :].T)

return prediction

# Full user-movie rating matrix

def full_matrix(self):

return mf.b + mf.b_u[:,np.newaxis] + mf.b_i[np.newaxis:,] + mf.P.dot(mf.Q.T)

Now we have a function that can predict the ratings. The input for this function are:

- R – The user-movie rating matrix

- K – Number of latent features

- alpha – Learning rate for stochastic gradient descent

- beta – Regularization parameter for bias

- iterations – Number of iterations to perform stochastic gradient descent

We have to convert the user item ratings to matrix form. It can be done using the pivot function in python.

R= np.array(ratings.pivot(index = ''user_id'', columns =''movie_id'', values = ''rating'').fillna(0))

fillna(0) will fill all the missing ratings with 0. Now we have the R matrix. We can initialize the number of latent features, but the number of these features must be less than or equal to the number of original features.

Now let us predict all the missing ratings. Let’s take K=20, alpha=0.001, beta=0.01 and iterations=100.

mf = MF(R, K=20, alpha=0.001, beta=0.01, iterations=100)

training_process = mf.train()

print()

print("P x Q:")

print(mf.full_matrix())

print()

This will give us the error value corresponding to every 20th iteration and finally the complete user-movie rating matrix. The output looks like this:

We have created our recommendation engine. Let’s focus on how to evaluate a recommendation engine in the next section.

8. Evaluation metrics for recommendation engines

For evaluating recommendation engines, we can use the following metrics

8.1 Recall:

- What proportion of items that a user likes were actually recommended

- It is given by:

-

- Here tp represents the number of items recommended to a user that he/she likes and tp+fnrepresents the total items that a user likes

- If a user likes 5 items and the recommendation engine decided to show 3 of them, then the recall will be 0.6

- Larger the recall, better are the recommendations

8.2 Precision:

-

- Out of all the recommended items, how many did the user actually like?

- It is given by:

-

- Here tp represents the number of items recommended to a user that he/she likes and tp+fprepresents the total items recommended to a user

- If 5 items were recommended to the user out of which he liked 4, then precision will be 0.8

- Larger the precision, better the recommendations

- But consider this case: If we simply recommend all the items, they will definitely cover the items which the user likes. So we have 100% recall! But think about precision for a second. If we recommend say 1000 items and user likes only 10 of them, then precision is 0.1%. This is really low. So, our aim should be to maximize both precision and recall.

8.3 RMSE (Root Mean Squared Error):

- It measures the error in the predicted ratings:

-

- Here, Predicted is the rating predicted by the model and Actual is the original rating

- If a user has given a rating of 5 to a movie and we predicted the rating as 4, then RMSE is 1

- Lesser the RMSE value, better the recommendations

The above metrics tell us how accurate our recommendations are but they do not focus on the order of recommendations, i.e. they do not focus on which product to recommend first and what follows after that. We need some metric that also considers the order of the products recommended. So, let’s look at some of the ranking metrics:

8.4 Mean Reciprocal Rank:

- Evaluates the list of recommendations

-

- Suppose we have recommended 3 movies to a user, say A, B, C in the given order, but the user only liked movie C. As the rank of movie C is 3, the reciprocal rank will be 1/3

- Larger the mean reciprocal rank, better the recommendations

8.5 MAP at k (Mean Average Precision at cutoff k):

- Precision and Recall don’t care about ordering in the recommendations

- Precision at cutoff k is the precision calculated by considering only the subset of your recommendations from rank 1 through k

-

- Suppose we have made three recommendations [0, 1, 1]. Here 0 means the recommendation is not correct while 1 means that the recommendation is correct. Then the precision at k will be [0, 1/2, 2/3], and the average precision will be (1/3)*(0+1/2+2/3) = 0.38

- Larger the mean average precision, more correct will be the recommendations

8.6 NDCG (Normalized Discounted Cumulative Gain):

- The main difference between MAP and NDCG is that MAP assumes that an item is either of interest (or not), while NDCG gives the relevance score

- Let us understand it with an example: suppose out of 10 movies – A to J, we can recommend the first five movies, i.e. A, B, C, D and E while we must not recommend the other 5 movies, i.e., F, G, H, I and J. The recommendation was [A,B,C,D]. So the NDCG in this case will be 1 as the recommended products are relevant for the user

- Higher the NDCG value, better the recommendations

9. What else can be tried?

Up to this point we have learnt what is a recommendation engine, its different types and their workings. Both content-based filtering and collaborative filtering algorithms have their strengths and weaknesses.

In some domains, generating a useful description of the content can be very difficult. A content-based filtering model will not select items if the user’s previous behavior does not provide evidence for this. Additional techniques have to be used so that the system can make suggestions outside the scope of what the user has already shown an interest in.

A collaborative filtering model doesn’t have these shortcomings. Because there is no need for a description of the items being recommended, the system can deal with any kind of information. Furthermore, it can recommend products which the user has not shown an interest in previously. But, collaborative filtering cannot provide recommendations for new items if there are no user ratings upon which to base a prediction. Even if users start rating the item, it will take some time before the item has received enough ratings in order to make accurate recommendations.

A system that combines content-based filtering and collaborative filtering could potentially take advantage from both the representation of the content as well as the similarities among users. One approach to combine collaborative and content-based filtering is to make predictions based on a weighted average of the content-based recommendations and the collaborative recommendations. Various means of doing so are:

- Combining item scores

- In this approach, we combine the ratings obtained from both the filtering methods. The simplest way is to take the average of the ratings

- Suppose one method suggested a rating of 4 for a movie while the other suggested a rating of 5 for the same movie. So the final recommendation will be the average of both ratings, i.e. 4.5

- We can assign different weights to different methods as well

- Combining item ranks:

- Suppose collaborative filtering recommended 5 movies A, B, C, D and E in the following order: A, B, C, D, E while content based filtering recommended them in the following order: B, D, A, C, E

- The rank for the movies will be:

Collaborative filtering

| Movie | Rank |

| A | 1 |

| B | 0.8 |

| C | 0.6 |

| D | 0.4 |

| E | 0.2 |

Content Based Filtering:

| Movie | Rank |

| B | 1 |

| D | 0.8 |

| A | 0.6 |

| C | 0.4 |

| E | 0.2 |

So, a hybrid recommender engine will combine these ranks and make final recommendations based on the combined rankings. The combined rank will be:

| Movie | New Rank |

| A | 1+0.6 = 1.6 |

| B | 0.8+1 = 1.8 |

| C | 0.6+0.4 = 1 |

| D | 0.4+0.8 = 1.2 |

| E | 0.2+0.2 = 0.4 |

The recommendations will be made based on these rankings. So, the final recommendations will look like this: B, A, D, C, E.

In this way, two or more techniques can be combined to build a hybrid recommendation engine and to improve their overall recommendation accuracy and power.

End Notes

This was a very comprehensive article on recommendation engines. This tutorial should be good enough to get you started with this topic. We not only covered basic recommendation techniques but also saw how to implement some of the more advanced techniques available in the industry today.

We also covered some key facts associated with each technique. As somebody who wants to learn how to make a recommendation engine, I’d advise you to learn the techniques discussed in this tutorial and later implement them in your models.

Did you find this article useful? Share your opinions / views in the comments section below!

Ref:

- 另一篇很好的相关文章推荐 Implementing your own recommender systems in Python

- http://cis.csuohio.edu/~sschung/CIS660/CollaborativeFilteringSuhua.pdf

- https://www.math.uci.edu/icamp/courses/math77b/lecture_12w/pdfs/Chapter%2002%20-%20Collaborative%20recommendation.pdf

- http://wulc.me/2016/02/22/%E3%80%8AProgramming%20Collective%20Intelligence%E3%80%8B%E8%AF%BB%E4%B9%A6%E7%AC%94%E8%AE%B0(2)--%E5%8D%8F%E5%90%8C%E8%BF%87%E6%BB%A4/ 也是好文章,讲到了 Adjusted cosine 和 Pearson correlation的区别

Comprehensive learning path – Data Science in Python深度学习路径-用python进行数据学习

http://blog.csdn.net/pipisorry/article/details/44245575

关于怎么学习python,并将python用于数据科学、数据分析、机器学习中的一篇很好的文章

Comprehensive learning path – Data Science in Python

深度学习路径-用python进行数据学习

Journey from a Pythonnoob(新手) to a Kaggler on Python

So, you want to become a data scientist or may be you are already one and want toexpand(扩张) your toolrepository(贮藏室). You have landed at the right place. The aim of this page is to provide a comprehensive learning path to people new to python for data analysis. This path provides a comprehensiveoverview(综述) of steps you need to learn to use Python for data analysis. If you already have some background, or don’t need all thecomponents(成分), feel free toadapt(适应) your own paths and let us know how you made changes in the path.

Step 0: Warming up

Before starting your journey, the first question to answer is:

Why use Python?

or

How would Python be useful?

Watch the first 30 minutes of this talk from Jeremy, Founder of DataRobot at PyCon 2014, Ukraine to get an idea of how useful Python could be.

Step 1: Setting up your machine

Now that you have made up your mind, it is time to set up your machine. The easiest way toproceed(开始) is to justdownload Anaconda from Continuum.io . It comes packaged with most of the things you will need ever. The majordownside(下降趋势) of taking thisroute(路线) is that you will need to wait for Continuum to update their packages, even when there might be an update available to theunderlying(潜在的) libraries. If you are a starter, that should hardly matter.

If you face any challenges in installing(安装), you can find moredetailed instructions for various OS here

Step 2: Learn the basics of Python language

You should start by understanding the basics of the language, libraries and datastructure(结构). The python track fromCodecademy is one of the best places to start your journey. By end of this course, you should be comfortable writing small scripts on Python, but also understand classes and objects.

Specifically learn: Lists, Tuples, Dictionaries, List comprehensions(理解), Dictionary comprehensions

Assignment: Solve the python tutorial(辅导的) questions on HackerRank. These should get your brain thinking on Python scripting

Alternate resources: If interactive(交互式的) coding is not your style of learning, you can also look at TheGoogle Class for Python. It is a 2 day class series and also covers some of the parts discussed later.

Step 3: Learn Regular Expressions in Python

You will need to use them a lot for data cleansing(净化), especially if you are working on text data. The best way to learn Regular expressions is to go through the Google class and keep this cheat sheet handy.

Assignment: Do the baby names exercise

If you still need more practice, follow this tutorial(个别指导) for text cleaning. It will challenge you on various stepsinvolved(包含) in datawrangling(争论).

Step 4: Learn Scientific libraries in Python – NumPy, SciPy, Matplotlib and Pandas

This is where fun begins! Here is a brief introduction to various libraries. Let’s start practicing some common operations.

- Practice the NumPy tutorial thoroughly, especially NumPy arrays(数组). This will form a goodfoundation(基础) for things to come.

- Next, look at the SciPy tutorials. Go through the introduction and the basics and do the remaining onesbasis(基础) your needs.

- If you guessed Matplotlib tutorials next, you are wrong! They are too comprehensive(综合的) for our need here. Instead look at thisipython notebook till Line 68 (i.e. till animations(活泼))

- Finally, let us look at Pandas. Pandas provide DataFrame functionality(功能) (like R) for Python. This is also where you should spend good time practicing. Pandas would become the mosteffective(有效的) tool for all mid-size data analysis. Start with a short introduction,10 minutes to pandas. Then move on to a more detailedtutorial on pandas.

You can also look at Exploratory(勘探的) Data Analysis with Pandas andData munging with Pandas

Additional Resources:

- If you need a book on Pandas and NumPy, “Python(巨蟒) for Data Analysis by Wes McKinney”

- There are a lot of tutorials(个别指导) as part of Pandasdocumentation(文件材料). You can have a look at themhere

Assignment: Solve this assignment(分配) from CS109 course from Harvard.

Step 5: Effective Data Visualization

Go through this lecture form CS109. You can ignore(驳回诉讼) the initial 2 minutes, but what follows after that isawesome(可怕的)! Follow this lecture up withthis assignment

Step 6: Learn Scikit-learn and Machine Learning

Now, we come to the meat of this entire process. Scikit-learn is the most useful library onpython(巨蟒) for machine learning. Here is abriefoverview(综述) of the library. Go through lecture 10 to lecture 18 fromCS109 course from Harvard. You will go through an overview of machine learning, Supervised learningalgorithms(算法) likeregressions(回归), decision trees,ensemble(全体) modeling and non-supervised learning algorithms likeclustering(聚集). Followindividual(个人的) lectures with theassignments from those lectures.

Additional Resources:

- If there is one book, you must read, it is Programming Collective Intelligence – a classic(经典的), but still one of the best books on the subject.

- Additionally(附加的), you can also follow one of the best courses onMachine Learning course from Yaser Abu-Mostafa. If you need more lucid(明晰的) explanation for the techniques, you can opt for theMachine learning course from Andrew Ng and follow the exercises on Python.

- Tutorials(个别指导) on Scikit learn

Assignment: Try out this challenge on Kaggle

Step 7: Practice, practice and Practice

Congratulations, you made it!

You now have all what you need in technical skills. It is a matter of practice and what better place to practice than compete with fellow Data Scientists on Kaggle. Go, dive into one of the live competitions currently running onKaggle and give all what you have learnt a try!

Step 8: Deep Learning

Now that you have learnt most of machine learning techniques, it is time to give Deep Learning a shot. There is a good chance that you already know what is Deep Learning, but if you still need a briefintro(介绍),here it is.

I am myself new to deep learning, so please take these suggestions with apinch(匮乏) of salt. The mostcomprehensive(综合的) resource isdeeplearning.net. You will find everything here – lectures, datasets, challenges, tutorials. You can also try thecourse from Geoff Hinton a try in a bid to understand the basics of Neural Networks.

P.S. In case you need to use Big Data libraries, give Pydoop and PyMongo a try. They are not included here as Big Data learning path is an entire topic in itself.

from: http://blog.csdn.net/pipisorry/article/details/44245575ref:http://www.analyticsvidhya.com/learning-paths-data-science-business-analytics-business-intelligence-big-data/learning-path-data-science-python/

全中文

HeadFirstPython 学习笔记(0)--list comprehension(列表推导)

clean_james = []

clean_sarah = []

clean_julie = []

clean_mikey = []

for each_time in james:

clean_james.append(sanitize(each_time))

for each_time in sarah:

clean_sarah.append(sanitize(each_time))

for each_time in julie:

clean_julie.append(sanitize(each_time))

for each_time in mikey:

clean_mikey.append(sanitize(each_time))

#使用代码推导,可以替换为:

clean_james = [sanitize(each_time) for each_time in james]

clean_sarah = [sanitize(each_time) for each_time in sarah]

clean_julie = [sanitize(each_time) for each_time in julie]

clean_mikey = [sanitize(each_time) for each_time in mikey]

#其它例子:

Start by transforming a list of minutes into a list of seconds:

>>> mins = [1, 2, 3]

>>> secs = [m * 60 for m in mins]

>>> secs

[60, 120, 180]

How about meters into feet?

>>> meters = [1, 10, 3]

>>> feet = [m * 3.281 for m in meters]

>>> feet

[3.281, 32.81, 9.843]

Given a list of strings in mixed and lowercase, it’s a breeze to transform the strings to UPPERCASE:

>>> lower = ["I", "don''t", "like", "spam"]

>>> upper = [s.upper() for s in lower]

>>> upper

[''I'', "DON''T", ''LIKE'', ''SPAM'']

Let’s use your sanitize() function to transform some list data into correctly formatted times:

>>> dirty = [''2-22'', ''2:22'', ''2.22'']

>>> clean = [sanitize(t) for t in dirty]

>>> clean

[''2.22'', ''2.22'', ''2.22'']

It’s also possible to assign the results of the list transformation back onto the original target identifier. This

example transforms a list of strings into floating point numbers, and then replaces the original list data:

>>> clean = [float(s) for s in clean]

>>> clean

[2.22, 2.22, 2.22]

And, of course, the transformation can be a function chain, if that’s what you need:

>>> clean = [float(sanitize(t)) for t in [''2-22'', ''3:33'', ''4.44'']]

>>> clean

[2.22, 3.33, 4.44]今天的关于Python中的 List Comprehension 以及 Generator和list.reverse. python的分享已经结束,谢谢您的关注,如果想了解更多关于Attention-over-Attention Neural Networks for Reading Comprehension论文总结、Comprehensive Guide to build a Recommendation Engine from scratch (in Python) / 从0开始搭建推荐系统、Comprehensive learning path – Data Science in Python深度学习路径-用python进行数据学习、HeadFirstPython 学习笔记(0)--list comprehension(列表推导)的相关知识,请在本站进行查询。

本文标签:

![[转帖]Ubuntu 安装 Wine方法(ubuntu如何安装wine)](https://www.gvkun.com/zb_users/cache/thumbs/4c83df0e2303284d68480d1b1378581d-180-120-1.jpg)