想了解[simpletype,classio.druid.indexing.kafka.supervisor.KafkaSupervisorTuningConfig]valuefailed:的新动态吗

想了解[simple type, class io.druid.indexing.kafka.supervisor.KafkaSupervisorTuningConfig] value failed:的新动态吗?本文将为您提供详细的信息,此外,我们还将为您介绍关于 java.lang.NoClassDefFoundError: org/apache/spark/streaming/kafka/KafkaUtils、Caused by: java.lang.ClassNotFoundException: org.apache.spark.streaming.kafka.KafkaUtils、CDH-Kafka-SparkStreaming 异常:org.apache.kafka.clients.consumer.KafkaConsumer.subscribe(Ljava/uti、Django笔记 生产环境部署 gunicorn+nginx+supervisor c&b supervisor store supervisor piping superviso的新知识。

本文目录一览:- [simple type, class io.druid.indexing.kafka.supervisor.KafkaSupervisorTuningConfig] value failed:

- java.lang.NoClassDefFoundError: org/apache/spark/streaming/kafka/KafkaUtils

- Caused by: java.lang.ClassNotFoundException: org.apache.spark.streaming.kafka.KafkaUtils

- CDH-Kafka-SparkStreaming 异常:org.apache.kafka.clients.consumer.KafkaConsumer.subscribe(Ljava/uti

- Django笔记 生产环境部署 gunicorn+nginx+supervisor c&b supervisor store supervisor piping superviso

![[simple type, class io.druid.indexing.kafka.supervisor.KafkaSupervisorTuningConfig] value failed: [simple type, class io.druid.indexing.kafka.supervisor.KafkaSupervisorTuningConfig] value failed:](http://www.gvkun.com/zb_users/upload/2025/01/8310e05c-9ad1-4889-a7a3-92fa75333d951737855695033.jpg)

[simple type, class io.druid.indexing.kafka.supervisor.KafkaSupervisorTuningConfig] value failed:

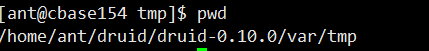

"error": "Instantiation of [simple type, class io.druid.indexing.kafka.supervisor.KafkaSupervisorTuningConfig] value failed: Failed to create directory within 10000 attempts (tried 1508304116732-0 to 1508304116732-9999)"

此异常是由于 var/ 未创建 tmp 目录导致

创建目录 tmp

java.lang.NoClassDefFoundError: org/apache/spark/streaming/kafka/KafkaUtils

WARN streaming.StreamingContext: spark.master should be set as local[n], n > 1 in local mode if you have receivers to get data, otherwise Spark jobs will not get resources to process the received data.

java.lang.NoClassDefFoundError: org/apache/spark/streaming/kafka/KafkaUtils

at org.apache.spark.examples.streaming.JavaKafkaWordCount.main(JavaKafkaWordCount.java:95)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:738)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:187)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:212)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:126)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: org.apache.spark.streaming.kafka.KafkaUtils

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 10 more

17/06/29 18:33:37 INFO spark.SparkContext: Invoking stop() from shutdown hook

17/06/29 18:33:37 INFO server.ServerConnector: Stopped ServerConnector@2a76b80a{HTTP/1.1}{0.0.0.0:4040}

17/06/29 18:33:37 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@1601e47{/stages/stage/kill,null,UN

Caused by: java.lang.ClassNotFoundException: org.apache.spark.streaming.kafka.KafkaUtils

Successfully started service ''org.apache.spark.network.netty.NettyBlockTransferService'' on port 37493.

17/06/29 18:10:40 INFO netty.NettyBlockTransferService: Server created on 192.168.8.29:37493

17/06/29 18:10:40 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

17/06/29 18:10:40 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 192.168.8.29, 37493, None)

17/06/29 18:10:40 INFO storage.BlockManagerMasterEndpoint: Registering block manager 192.168.8.29:37493 with 912.3 MB RAM, BlockManagerId(driver, 192.168.8.29, 37493, None)

17/06/29 18:10:40 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 192.168.8.29, 37493, None)

17/06/29 18:10:40 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, 192.168.8.29, 37493, None)

17/06/29 18:10:40 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4aeaadc1{/metrics/json,null,AVAILABLE}

17/06/29 18:10:40 WARN streaming.StreamingContext: spark.master should be set as local[n], n > 1 in local mode if you have receivers to get data, otherwise Spark jobs will not get resources to process the received data.

Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/spark/streaming/kafka/KafkaUtils

at org.apache.spark.examples.streaming.JavaKafkaWordCount.main(JavaKafkaWordCount.java:95)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:738)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:187)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:212)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:126)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: java.lang.ClassNotFoundException: org.apache.spark.streaming.kafka.KafkaUtils

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 10 more

17/06/29 18:10:40 INFO spark.SparkContext: Invoking stop() from shutdown hook

17/06/29 18:10:40 INFO server.ServerConnector: Stopped ServerConnector@2a76b80a{HTTP/1.1}{0.0.0.0:4040}

17/06/29 18:10:40 INFO handler.ContextHandler: Stopped o.s.j.s.ServletContextHandler@1601e47{/stages/stage/kill,null,UNAVAILABLE}

CDH-Kafka-SparkStreaming 异常:org.apache.kafka.clients.consumer.KafkaConsumer.subscribe(Ljava/uti

参考文章:

flume kafka sparkstreaming整合后集群报错org.apache.kafka.clients.consumer.KafkaConsumer.subscribe(Ljava/ut

https://blog.csdn.net/u010936936/article/details/77247075?locationNum=2&fps=1

最近在使用CDH 环境 提交 Kafka-Spark Streaming 作业的时候遇到了一些问题,特此记录如下:

主要的报错信息为:

Exception in thread "streaming-start" java.lang.NoSuchMethodError: org.apache.kafka.clients.consumer.KafkaConsumer.subscribe(Ljava/util/Collection;

Exception in thread "streaming-start" java.lang.NoSuchMethodError: org.apache.kafka.clients.consumer.KafkaConsumer.subscribe(Ljava/util/Collection;

并且在报错之前,可以看到 kafka-client 为 0.9.0 版本

18/11/01 16:48:04 INFO AppInfoParser: Kafka version : 0.9.0.0

18/11/01 16:48:04 INFO AppInfoParser: Kafka commitId : cb8625948210849f

但是,我们查看打的包高于此版本。

原因分析

其实这个在官方文档中有介绍。地址如下:

https://www.cloudera.com/documentation/spark2/latest/topics/spark2_kafka.html#running_jobs

简单说,就是kafka集成spark2,需要在CDH中进行设置。官网介绍了2中方法。这里我采用了第二种,在CDH中进行修改配置的方法。

步骤如下:

1.进入CDH的spark2配置界面,在搜索框中输入SPARK_KAFKA_VERSION,出现如下图,

2.然后选择对应版本,这里我应该选择的是None,

即 : 选用集群上传的Kafka-client 版本 !!

3.然后保存配置,重启生效。

4.重新跑sparkstreaming任务,问题解决。

Django笔记 生产环境部署 gunicorn+nginx+supervisor c&b supervisor store supervisor piping superviso

我们今天的关于[simple type, class io.druid.indexing.kafka.supervisor.KafkaSupervisorTuningConfig] value failed:的分享已经告一段落,感谢您的关注,如果您想了解更多关于 java.lang.NoClassDefFoundError: org/apache/spark/streaming/kafka/KafkaUtils、Caused by: java.lang.ClassNotFoundException: org.apache.spark.streaming.kafka.KafkaUtils、CDH-Kafka-SparkStreaming 异常:org.apache.kafka.clients.consumer.KafkaConsumer.subscribe(Ljava/uti、Django笔记 生产环境部署 gunicorn+nginx+supervisor c&b supervisor store supervisor piping superviso的相关信息,请在本站查询。

本文标签:

![[转帖]Ubuntu 安装 Wine方法(ubuntu如何安装wine)](https://www.gvkun.com/zb_users/cache/thumbs/4c83df0e2303284d68480d1b1378581d-180-120-1.jpg)