最近很多小伙伴都在问MyAlgorithmtoCalculatePositionofSmartphone-GPSandSensors这两个问题,那么本篇文章就来给大家详细解答一下,同时本文还将给你拓展

最近很多小伙伴都在问My Algorithm to Calculate Position of Smartphone - GPS and Sensors这两个问题,那么本篇文章就来给大家详细解答一下,同时本文还将给你拓展(Pixel2PixelGANs)Image-to-Image translation with conditional adversarial networks、A Benchmark Comparsion of Monocular Visual-Inertial Odometry Algorithms for Flying Robots论文笔记、Algorithm - Sorting a partially sorted List、Algorithms 普林斯顿知识点熟记 - Analysis of Algorithms等相关知识,下面开始了哦!

本文目录一览:- My Algorithm to Calculate Position of Smartphone - GPS and Sensors

- (Pixel2PixelGANs)Image-to-Image translation with conditional adversarial networks

- A Benchmark Comparsion of Monocular Visual-Inertial Odometry Algorithms for Flying Robots论文笔记

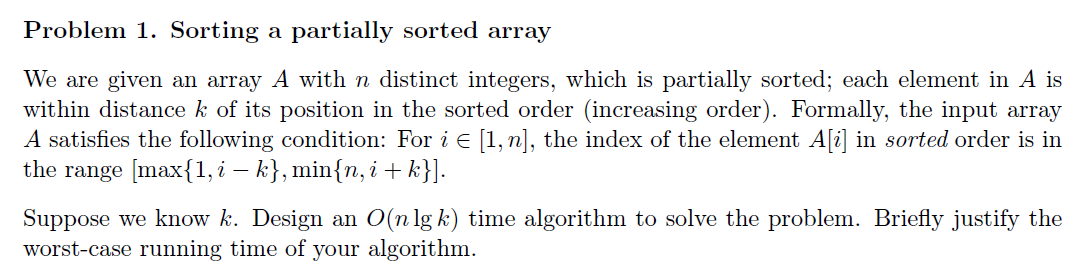

- Algorithm - Sorting a partially sorted List

- Algorithms 普林斯顿知识点熟记 - Analysis of Algorithms

My Algorithm to Calculate Position of Smartphone - GPS and Sensors

I am developing an android application to calculate position based on Sensor’s

Data

Accelerometer –> Calculate Linear Acceleration

Magnetometer + Accelerometer –> Direction of movement

The initial position will be taken from GPS (Latitude + Longitude).

Now based on Sensor’s Readings i need to calculate the new position of the

Smartphone:

My Algorithm is following - (But is not calculating Accurate Position): Please

help me improve it.

Note: My algorithm Code is in C# (I am sending Sensor Data to Server -

Where Data is stored in the Database. I am calculating the position on

Server)

All DateTime Objects have been calculated using TimeStamps - From

01-01-1970

var prevLocation = ServerHandler.getLatestPosition(IMEI); var newLocation = new ReceivedDataDTO() { LocationDataDto = new LocationDataDTO(), UsersDto = new UsersDTO(), DeviceDto = new DeviceDTO(), SensorDataDto = new SensorDataDTO() }; //First Reading if (prevLocation.Latitude == null) { //Save GPS Readings newLocation.LocationDataDto.DeviceId = ServerHandler.GetDeviceIdByIMEI(IMEI); newLocation.LocationDataDto.Latitude = Latitude; newLocation.LocationDataDto.Longitude = Longitude; newLocation.LocationDataDto.Acceleration = float.Parse(currentAcceleration); newLocation.LocationDataDto.Direction = float.Parse(currentDirection); newLocation.LocationDataDto.Speed = (float) 0.0; newLocation.LocationDataDto.ReadingDateTime = date; newLocation.DeviceDto.IMEI = IMEI; // saving to database ServerHandler.SaveReceivedData(newLocation); return; } //If Previous Position not NULL --> Calculate New Position **//Algorithm Starts HERE** var oldLatitude = Double.Parse(prevLocation.Latitude); var oldLongitude = Double.Parse(prevLocation.Longitude); var direction = Double.Parse(currentDirection); Double initialVelocity = prevLocation.Speed; //Get Current Time to calculate time Travelling - In seconds var secondsTravelling = date - tripStartTime; var t = secondsTravelling.TotalSeconds; //Calculate Distance using physice formula, s= Vi * t + 0.5 * a * t^2 // distanceTravelled = initialVelocity * timeTravelling + 0.5 * currentAcceleration * timeTravelling * timeTravelling; var distanceTravelled = initialVelocity * t + 0.5 * Double.Parse(currentAcceleration) * t * t; //Calculate the Final Velocity/ Speed of the device. // this Final Velocity is the Initil Velocity of the next reading //Physics Formula: Vf = Vi + a * t var finalvelocity = initialVelocity + Double.Parse(currentAcceleration) * t; //Convert from Degree to Radians (For Formula) oldLatitude = Math.PI * oldLatitude / 180; oldLongitude = Math.PI * oldLongitude / 180; direction = Math.PI * direction / 180.0; //Calculate the New Longitude and Latitude var newLatitude = Math.Asin(Math.Sin(oldLatitude) * Math.Cos(distanceTravelled / earthRadius) + Math.Cos(oldLatitude) * Math.Sin(distanceTravelled / earthRadius) * Math.Cos(direction)); var newLongitude = oldLongitude + Math.Atan2(Math.Sin(direction) * Math.Sin(distanceTravelled / earthRadius) * Math.Cos(oldLatitude), Math.Cos(distanceTravelled / earthRadius) - Math.Sin(oldLatitude) * Math.Sin(newLatitude)); //Convert From Radian to degree/Decimal newLatitude = 180 * newLatitude / Math.PI; newLongitude = 180 * newLongitude / Math.PI;This is the Result I get –> Phone was not moving. As you can see speed is

27.3263111114502 So there is something wrong in calculating Speed but I

don’t know what

ANSWER:

I found a solution to calculate position based on Sensor: I have posted an

Answer below.

If you need any help, please leave a comment

this is The results compared to GPS ( Note: GPS is in Red)

答案1

小编典典As some of you mentioned you got the equations wrong but that is just a part

of the error.

Newton - D’Alembert physics for non relativistic speeds dictates this:

// init valuesdouble ax=0.0,ay=0.0,az=0.0; // acceleration [m/s^2]

double vx=0.0,vy=0.0,vz=0.0; // velocity [m/s]

double x=0.0, y=0.0, z=0.0; // position [m]// iteration inside some timer (dt [seconds] period) …

ax,ay,az = accelerometer values

vx+=axdt; // update speed via integration of acceleration

vy+=aydt;

vz+=azdt;

x+=vxdt; // update position via integration of velocity

y+=vydt;

z+=vzdt;the sensor can rotate so the direction must be applied:

// init valuesdouble gx=0.0,gy=-9.81,gz=0.0; // [edit1] background gravity in map coordinate system [m/s^2]

double ax=0.0,ay=0.0,az=0.0; // acceleration [m/s^2]

double vx=0.0,vy=0.0,vz=0.0; // velocity [m/s]

double x=0.0, y=0.0, z=0.0; // position [m]

double dev[9]; // actual device transform matrix … local coordinate system

(x,y,z) <- GPS position;// iteration inside some timer (dt [seconds] period) …

dev <- compass direction

ax,ay,az = accelerometer values (measured in device space)

(ax,ay,az) = dev(ax,ay,az); // transform acceleration from device space to global map space without any translation to preserve vector magnitude

ax-=gx; // [edit1] remove background gravity (in map coordinate system)

ay-=gy;

az-=gz;

vx+=axdt; // update speed (in map coordinate system)

vy+=aydt;

vz+=azdt;

x+=vxdt; // update position (in map coordinate system)

y+=vydt;

z+=vz*dt;gx,gy,gzis the global gravity vector (~9.81 m/s^2on Earth)- in code my global

Yaxis points up so thegy=-9.81and the rest are0.0 - measure timings are critical

Accelerometer must be checked as often as possible (second is a very long

time). I recommend not to use timer period bigger than 10 ms to preserve

accuracy also time to time you should override calculated position with GPS

value. Compass direction can be checked less often but with proper filtration

- compass is not correct all the time

Compass values should be filtered for some peak values. Sometimes it read bad

values and also can be off by electro-magnetic polution or metal enviroment.

In that case the direction can be checked by GPS during movement and some

corrections can be made. For example chech GPS every minute and compare GPS

direction with compass and if it is constantly of by some angle then add it or

substract it.

- why do simple computations on server ???

Hate on-line waste of traffic. Yes you can log data on server (but still i

think file on device will be better) but why to heck limit position

functionality by internet connection ??? not to mention the delays …

[Edit 1] additional notes

Edited the code above a little. The orientation must be as precise as it can

be to minimize cumulative errors.

Gyros would be better than compass (or even better use them both).

Acceleration should be filtered. Some low pass filtering should be OK. After

gravity removal I would limit ax,ay,az to usable values and throw away too

small values. If near low speed also do full stop (if it is not a train or

motion in vacuum). That should lower the drift but increase other errors so an

compromise has to be found between them.

Add calibration on the fly. When filtered acceleration = 9.81 or very close

to it then the device is probably stand still (unless its a flying machine).

Orientation/direction can be corrected by actual gravity direction.

(Pixel2PixelGANs)Image-to-Image translation with conditional adversarial networks

Introduction

1. develop a common framework for all problems that are the task of predicting pixels from pixels.

2. CNNs learn to minimize a loss function -an objective that scores the quality of results-- and although the learning process is automatic, a lot of manual effort still goes into designing effective losses.

3.the CNN to minimize Euclidean distance(欧式距离L2) between predicted and ground truth pixels, it will tend to produce blurry results.

why? because the L2 distance is minimized by averaging all plausible outputs, which cause blurring.

4.GANs learn a loss that tries to classify if the output image is real of fake , blurry images will not be tolerated since they obviously fake!

5. they apply cGANs suitable for image-to-image translation tasks, where we condition on input image and generate a corresponding output image.

Releted work

1.image-to-image translation problems are formulated as per-pixel(逐个像素的)classfication or regression.but these formulations treat the output space as “unstructured” ,each output pixel is considered conditionally independent from all others given the input image.(独立性!)

2. conditional GANs learn a structured loss.

3. cGANs is different in that the loss is learned(损失可以学习), in theory, penalize any possible structure that differs between output and target.(条件GAN的不同之处在于,损失是可以习得的,理论上,它可以惩罚产出和目标之间可能存在差异的任何结构。)

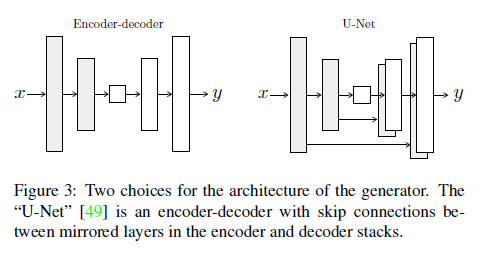

4. the choices for generator and discriminator achitecture:

for G: using ''U-Net ''

for D: using PatchGAN classifier penalizes structure at the scale of image patches.

The purpose of PatchGAN aim to capure local style statistics.(用于捕获本地样式统计信息)

Method

1. The whole of framwork is that conditional GANs learn a mapping from observed image x and random noise vector z, to y. $G:{x,z}\rightarrow y(ground-truth)$ .

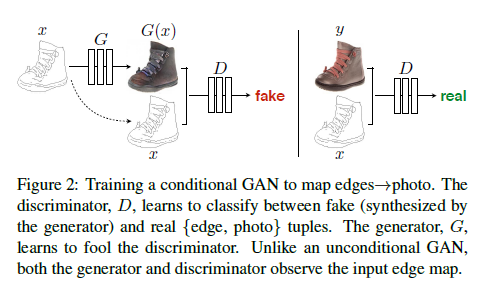

2. Unlike an unconditional GAN, both the generator and discriminator observe the input edge map.

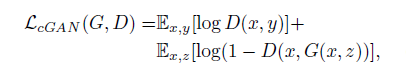

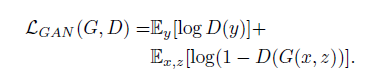

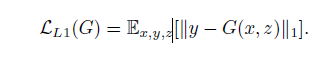

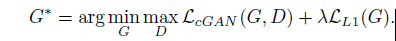

3. objective function:

G try to minimize this objective against an adversarial D that try to maximize it.

4. they test the importence of conditioning the disctiminator, the discriminator dose not oberve x(edge map):

5. it''s beneficial to mix GAN objective with a more traditional loss, such as L2-distance.

6. G is tasked to not only fool the discriminator but also to be near the ground truth output in an L2 sense.

7. L1 distance is applied into the additional loss rather than L2 as L1 encourages less blurring(remeber it!).

8.

final objective

9. without $z$ (random noise vector), the net still learn a mapping from $x$ to $y$, but would produce deterministic output, therefore fail to match any distribution other than a delta function.(因此无法匹配除函数之外的任何分布)

10. towords $z$, Gaussian noise often is used in the past, but authors find this strategy ineffective, the G simply learned to ignore the noise. Finally, in the form of dropout is provided.but we observe only minor stochasticity in the output of our nets.

Network Architecture

1. The whole of generator and discriminator architectures from DCGANs.

For G: U-Net;DCGAN; encoder- decoder; bottleneck; shuttle the information;

The job:

1.mapping a high resolution grid to a high resolution output grid.

2. although the input and output differ in surface appearance, but both are rendering of same underlying structure.

The character:

structure in the input is roughly aligned with structure in the output.

The previous measures:

1.encoder-decoder network is applied.

2.until a bottleneck layer, downsample is changed to upsample.

Q:

1. A great deal of low-level information shared between the input and output, shuttling this information directly across the net is desirable.例如,在图像着色的情况下,输入和输出共享突出边缘的位置。

END:

To give the generator a means to circumvent(绕过) the bottleneck for information like this, adding skip connections is adopted, this architecture called ''U-Net''

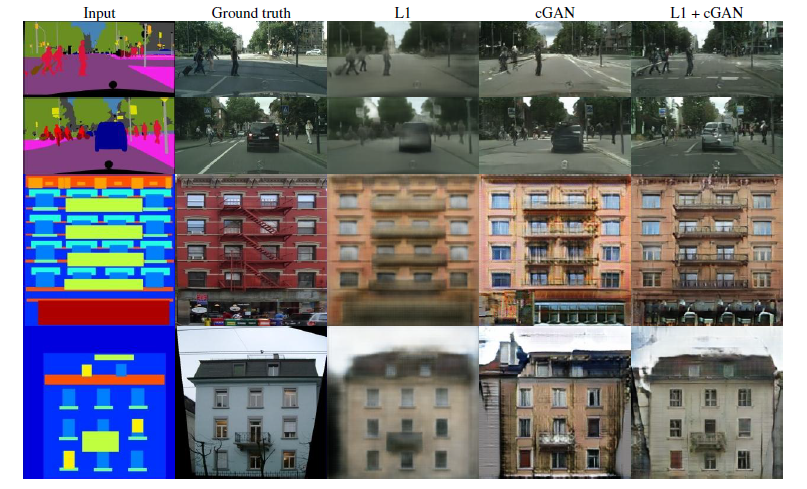

The results of different loss function:

L1 loss or L2 loss produce the blurry results on image generation problems.

For D:

1. both L1 and L2 produce blurry results on image generation problems.

2. L1 and L2 fail to encourage high frequency crispness(锐度),nonetheless(仍然) accurately capture the low frequencies.

3.in order to model high-frequencies , we pay attention to the structure in local image patches.

4.This discriminator tries to classify if each patch in an N*N image is real or fake. We run this discriminator convolutationally across the image, averaging all responses to provide the ultimate output of D.(这个鉴别器试图分类一个N*N图像中的每个补丁是真还是假。我们用这个判别器对图像进行卷积,对所有响应进行平均,得到D的最终输出).

5. N can be much smaller than full size of image and still produce high quality results. smaller pathGAN have mang advantages.

6. D effectively models the image as Markov random field, PatchGAN cn be understand as a form of texture/ style loss!

For Optimization.

1. slows down D relative to G.(此外,在优化D时,我们将目标除以2,这减慢了D相对于G的学习速度)

2.当批大小设置为1时,这种批处理规范化方法被称为实例规范化,并被证明在图像生成任务中是有效的,

batchsize is setted into 1 to 10

3. Instance normalization(IN) and batch normalization(BN), the strategy of IN is adopted in this paper because IN has been demonstrated to be effective at image generation task.

BN 是一个batch 里面的所有图片的均值和标准差,IN 是对一张图片求均值和标准差,shuffle的存在让batch 不稳定, 本来就相当于引入了noise, in the task of image generation, IN outperforms compared with BN, 因为这类生成式任务自己的风格较为独立不应该与batch中的其他样本产生较大的联系,相反在图像和视频的task of classification, BN outperforms IN .

For Experiments

1. removing conditioning for D have very poor performance because the loss does not penalize mismatch between the input and output; it only cares

that the output look realistic.

2. L1 + CGANs create realistic rendersings(渲染), L1 penalize the distance between ground truth outputs, which correctly match the input and synthesized outputs.

3.An advantage of the PatchGAN is that a fixed-size patch discriminator can be applied to arbitrarily large images.

4.

A Benchmark Comparsion of Monocular Visual-Inertial Odometry Algorithms for Flying Robots论文笔记

摘要:

本文主要比较单目VIO的算法在飞行机器人上运行的性能,测试使用统一数据集为EuRoC。其中评价指标为:姿态估计精度、每帧处理时间以及CPU和内存负载使用率,同时还有RMSE(运行轨迹与真实轨迹的比较指标)。比较的单目VIO分别为:MSCKF、OKVIS、ROVIO、VINS-Mono、SVO-MSF、SVO-GTSAM。其中运用了四个测试平台Intel NUC(desktop PC)、laptop、UP Board(embedded system for flying robots)、ODROID(an embedded PC containing a hybrid processing unit)

介绍:

选择单目的原因是由于单目是可靠状态估计所需的最小单元,而且相对于其他传感器而言,单目更加适合飞行机器人的负载和功耗需求,所以文章选择比较单目的VIO。因为各个单目VIO算法的性能比较都不全面,而且它们都没考虑计算能力限制的需求,所以本文将它们统一起来一起比较。

贡献:

1、全面比较了公开的单目VIO算法

2、提供比较结果的性能

算法介绍:

1)MSCKF(Multi-state constraint Kalman Filter)主要特点是提出一个测量模型,该模型描述了观察到特定图像特征的所有摄像机帧的姿态之间的几何约束,而不需要维持对该时刻3维特征位置的估计。扩展卡尔曼滤波后端实现了基于事件的相机输入的MSCKF形式,后面改为标准的相机追踪模式。

2)OKVIS(Open Keyframe-based Visual-Inertial SLAM)提出基于关键帧处理的思想,对包括关键帧位姿的一个滑动窗口进行非线性优化。文中提出一个基于视觉路标加权的投影误差和加权的惯性误差项组成的代价函数。前端使用多尺度Harris角点检测和提取BRISK描述子,后端使用ceres进行非线性优化。

3)ROVIO(Robust Visual Inertial Odometry)基于EKF的slam算法。提取Fast角特征,以机器人为中心的方位向量和距离参数化3D位姿,从围绕这些特征的图像流中摄取多层次的patch特征。在状态更新中提出光度误差项。

4)VINS-Mono提出基于滑动窗口的非线性优化估计器,前端追踪鲁棒的角特征。提出一种松耦合的传感器数据融合的初始化过程,可以从任意的状态下引导估计量。IMU测量在优化之前先预积分,提出紧耦合的优化方法。提出4自由度的优化位姿图和回环检测线程。

5)SVO+MSF(Semi-Direct Visual Odometry)MSF是在状态估计中融合不同传感器的通用EKF框架。SVO估计的位姿提供给MSF作为通用的位姿传感器输出,然后使用MSF融合IMU数据。由于松耦合的数据结合,姿态的尺度必须近似正确,需要手动设置初始化的值。

6)SVO+GTSAM后端使用iSAM2中执行在线因子图全平滑优化算法,在位姿图优化中提出使用预积分的IMU因子。

结论:

通过额外计算能力可以提高准确性和健壮性,但是在资源受限系统中,需要在计算能力和性能之间找到合适的平衡。在计算能力受限的系统中,ODROID、SVO+MSF算法性能最好,但是牺牲了精度,得出的鲁棒的轨迹;在计算能力不受限的系统中,VINS-Mono显示高精度和很好的鲁棒性。两者折中方案是ROVIO,比ODROID、SVO+MSF有更好的精度,且比VINS-Mono计算需求低。但是ROVIO对每帧处理时间敏感,无法在一些飞行机器人机载系统上使用。

Algorithm - Sorting a partially sorted List

给定一个list,里面每个元素都是partially sorted的,所谓partially sorted的意思是说,每个元素距离他们sorted好后的位置在一定范围[0,k]内

set i=n-2k;

while i>=0:

sort [i, i+2k] range of elements in List;

Time complexity: (n/k)*(2k*log2k)=O(nlgk)

解释:每次sort 2k个元素其实是保证了第[k, 2k]sort完成,好好想想。

Algorithms 普林斯顿知识点熟记 - Analysis of Algorithms

为什么需要分析算法?

- 对性能进行预测

- 进行算法比较

- 为结果提供保障

- 理解理论基础

双对数(以2为底)坐标作图,横坐标是数据量的对数,纵坐标是运行时间的对数,得到的斜率 k 的值意味着 数据量每增加一倍,所要花费的时间大约是之前运行时间的2^k倍。比如斜率为3,1k用时为1s,那么2k用时就为1*2^3 s = 8 s

幂定律

a 取决于电脑本身的硬件和软件等

b 取决于算法

数学模型

计算如下代码片段数组的访问次数

int sum = 0;

for (int i = 0; i < n; i++)

for (int j = i+1; j < n; j++)

for (int k = 1; k < n; k = k*2)

if (a[i] + a[j] >= a[k]) sum++;前面两个循环共执行如下次数

第三个循环中,k 的值依次是

注:

故第三个循环共执行如下次数:

所以整个数组的访问次数 = 前两个循环的循环次数 * 第三个循环的次数 * 每次循环访问数组的次数

增长阶数

二叉搜索分析

public static int binarySearch(int[] a, int key)

{

int lo = 0, hi = a.length-1;

while (lo <= hi) {

int mid = lo + (hi - lo) / 2;

if (key < a[mid]) hi = mid - 1;

else if (key > a[mid]) lo = mid + 1;

else return mid;

}

return -1;

}优化后的 3-sum 算法(复杂度为 N^2 logN)

Theory of Algorithms

O 增长阶数的上界

Ω 增长阶数的下界

Θ 增长阶数的上下界

关于 Which of the following function is O(n^3) ?

该问题为什么全选,coursera的导师Beau Dobbin是这样回答的

Getting familiar with Big "O" notation takes a little while. The key to remember is that Big "O" defines an upper bound for a growth rate. It can seem confusing that such different functions have the same Big "O" _order_.

In other words, as all three functions approach infinite, they are all less than or equal to "n^3". You could also say that all three are O(n^789457398). As long as the Big "O" function grows faster (or equal), the notation holds.

Similarly, Big Omega "Ω" and Theta "Ө" notations represent lower bounds and _simultaneous upper and lower bounds_.

In other words:

If your algorithm "runs in O(n^2) time", it takes at most (n^2) time.

If your algorithm "runs in Ω(n^2) time", it takes at least (n^2) time.

If your algorithm "runs in Ө(n^2) time", it takes at most (n^2) time and at least (n^2) time. This is similar to saying "it runs in exactly (n^2) time," but it''s not exact. It''s the asymptotic time as n approaches infinite.

https://en.wikipedia.org/wiki/Big_O_notation : Look for "A description of a function in terms of big O notation usually only provides an upper bound on the growth rate of the function."[](https://www.desmos.com/calcul...

[](https://en.wikipedia.org/wiki...https://en.wikipedia.org/wiki/Big_O_notation#Family_of_Bachmann.E2.80.93Landau_notations : A comparison of a few common notations.

https://www.desmos.com/calculator/7vmsklh8o9 : A graph of the three functions.

memory

- Object overhead. 16 bytes.

- Reference. 8 bytes.

- Padding. Each object uses a multiple of 8 bytes

今天关于My Algorithm to Calculate Position of Smartphone - GPS and Sensors的讲解已经结束,谢谢您的阅读,如果想了解更多关于(Pixel2PixelGANs)Image-to-Image translation with conditional adversarial networks、A Benchmark Comparsion of Monocular Visual-Inertial Odometry Algorithms for Flying Robots论文笔记、Algorithm - Sorting a partially sorted List、Algorithms 普林斯顿知识点熟记 - Analysis of Algorithms的相关知识,请在本站搜索。

本文标签:

![[转帖]Ubuntu 安装 Wine方法(ubuntu如何安装wine)](https://www.gvkun.com/zb_users/cache/thumbs/4c83df0e2303284d68480d1b1378581d-180-120-1.jpg)