如果您对IntroducingMakisu:Uber’sFast,ReliableDockerImageBuilderforApacheMesosandKubernetes感兴趣,那么这篇文章一定是您

如果您对Introducing Makisu: Uber’s Fast, Reliable Docker Image Builder for Apache Mesos and Kubernetes感兴趣,那么这篇文章一定是您不可错过的。我们将详细讲解Introducing Makisu: Uber’s Fast, Reliable Docker Image Builder for Apache Mesos and Kubernetes的各种细节,此外还有关于010.Kubernetes二进制部署kube-controller-manager、ABAP 的 Package interface, 安卓的 manifest.xml 和 Kubernetes 的 Capabilities、ABAP的Package interface, 安卓的manifest.xml和Kubernetes的Capabilities、AWS Kubernetes/k8s kubeamd 初始化后kube-controller-manager pod CrashLoopBackOff 错误启动不了的实用技巧。

本文目录一览:- Introducing Makisu: Uber’s Fast, Reliable Docker Image Builder for Apache Mesos and Kubernetes

- 010.Kubernetes二进制部署kube-controller-manager

- ABAP 的 Package interface, 安卓的 manifest.xml 和 Kubernetes 的 Capabilities

- ABAP的Package interface, 安卓的manifest.xml和Kubernetes的Capabilities

- AWS Kubernetes/k8s kubeamd 初始化后kube-controller-manager pod CrashLoopBackOff 错误启动不了

Introducing Makisu: Uber’s Fast, Reliable Docker Image Builder for Apache Mesos and Kubernetes

转自:https://eng.uber.com/makisu/?amp

To ensure the stable, scalable growth of our diverse tech stack, we leverage a microservices-oriented architecture, letting engineers deploy thousands of services on a dynamic, high-velocity release cycle. These services enable new features to greatly improve the experiences of riders, drivers, and eaters on our platform.

Although this paradigm supported hypergrowth in both scale and application complexity, it resulted in serious growing pains given the size and scope of our business. For the ease of maintaining and upgrading microservices, we adopted Docker in 2015 to enforce resource constraints and encapsulate executable with its dependencies.

As part of the Docker migration, the core infrastructure team developed a pipeline that quickly and reliably generates Dockerfiles and builds application code into Docker images for Apache Mesos and Kubernetes-based container ecosystems. Giving back to the growing stack of microservice technologies, we open sourced its core component, Makisu, to enable other organizations to leverage the same benefits for their own architectures.

Docker migration at Uber

In early 2015, we were deploying 400 services to bare metal hosts across our infrastructure. These services shared dependencies and config files that were put directly on the hosts, and had few resource constraints. As the engineering organization grew, so too did the need for setting up proper dependency management and resource isolation. To address this, the core infrastructure team started migrating services to Docker containers. As part of this effort, we built an automated service to standardize and streamline our Docker image build process.

The new containerized runtime brought significant improvements in speed and reliability to our service lifecycle management operations. However, secrets (a term for security features such as passwords, keys, and certificates) used to access service dependencies were being built into images, leading to possible security concerns. In short, once a file is created or copied into a Docker image, it is persisted into a layer. While it is possible to mask files using special whiteout files so that secrets will not appear in the resulted image, Docker does not provide a mechanism to actually remove the files from intermediate layers.

The first solution we tried for mitigating this security risk was docker-squash, an open source tool that combines Docker layers by deleting files only used in intermediate build steps. Once part of our workflow, docker-squash removed secrets, but doubled image build time. Building Docker images is already a lengthy process, and the addition of docker-squash negated the benefits of increased developer velocity from having a microservice-based architecture.

Unsatisfied with our post-hoc squashing solution, we decided to fork Docker to add the support we needed: If we could mount volumes at build time that weren’t included in the resulting image, we could use this mount to access secrets while building and leave no trace of the secret in the final container image. The approach worked well; There was no additional latency and the necessary code changes were relatively minimal, leading to quick implementation. Both the infrastructure team and service owners were satisfied at the time, and enabled Docker migration to progress smoothly.

Building container images at scale

By 2017, this setup was no longer sufficient for our scale. As Uber grew, the size and scope of our infrastructure similarly burgeoned. Building upwards of 3,000 services each a few times per day resulted in a much more time-consuming image build process. Some of these builds took up to two hours and produced images whose sizes exceeded 10GB, consuming significant storage space and bandwidth, and hurting developer productivity.

At this point, we realized that we had to take a step back and rethink how we should be building container images at scale. In doing so, we arrived at three major requirements for our next-generation build solution: portable building, distributed cache support, and image size optimization.

Portable building

In 2017, the core infrastructure team began an effort to move Uber’s compute workload to a unified platform that would provide greater automaticity, additional scalability, and more elastic resource management. To accommodate this, the Dockerfile build process also needed to be able to run in a generic container in shared clusters.

Unfortunately, Docker’s build logic relies on a copy-on-write file system to determine the differences between layers at build time. This logic requires elevated privileges to mount and unmount directories inside build containers, a behavior that we wanted to prevent on our new platform to ensure optimal security. This meant that, for our needs, fully-portable building was not an option with Docker.

Distributed cache support

Layer caching allows users to create builds consisting of the same build steps as previous builds and reuse formerly generated layers, thereby reducing execution redundancy. Docker offers some distributed cache support for individual builds, but this support does not generally extend between branches or services. We relied primarily on Docker’s local layer cache, but the sheer volume of builds spread across even a small cluster of our machines forced us to perform cleanup frequently, and resulted in a low cache hit rate which largely contributed to the inflated build times.

In the past, there had been efforts at Uber to improve this hit rate by performing subsequent builds of the same service on the same machine. However, this method was insufficient as the builds consisted of a diverse set of services as opposed to just a few. This process also increased the complexity of build scheduling, a nontrivial feat given the thousands of services we leverage on a daily basis.

It became apparent to us that greater cache support was a necessity for our new solution. After conducting some research, we determined that implementing a distributed layer cache across services addresses both issues by improving builds across different services and machines without imposing any constraints on the location of the build.

Image size optimization

Smaller images save storage space and take less time to transfer, decompress, and start.

To optimize image size, we looked into using multi-stage builds in our solution. This is a common practice among Docker image build solutions: performing build steps in an intermediate image, then copying runtime files to a slimmer final image. Although this feature does require more complicated Dockerfiles, we found that it can drastically reduce final image sizes, thereby making it a requirement for building and deploying images at scale.

Another optimization tactic we explored for decreasing image size is to reasonably reduce the number of layers in an image. Fat images are sometimes the result of files being created and deleted or updated by intermediate layers. As mentioned earlier, even when a temporary file is removed in a later step, it remains in the creation layer, taking up precious space. Having fewer layers in an image decreases the chance of deleted or updated files remaining in previous layers, hence reducing the image size.

Introducing Makisu

To address the above considerations, we built our own image building tool, Makisu, a solution that allows for more flexible, faster container image building at scale. Specifically, Makisu:

- requires no elevated privileges, making the build process portable.

- uses a distributed layer cache to improve performance across a build cluster.

- provides flexible layer generation, preventing unnecessary files in images.

- is Docker-compatible, supporting multi-stage builds and common build commands.

Below, we go into greater detail about these features.

Building without elevated privileges

One of the reasons that a Docker image is lightweight when compared to other deployment units, such as virtual machines (VMs), is that its intermediate layers only consist of the file system differences before and after running the current build step. Docker computes differences between steps and generates layers using copy-on-write file system (CoW), which requires privileges.

To generate layers without elevated privileges, Makisu performs file system scans and keeps an in-memory representation of the file system. After running a build step, it scans the file system again, updates the in-memory view, and adds each changed file (i.e., an added, modified, or removed file) to a new layer.

Makisu also facilitates high compression speed, an important consideration for working at scale. Docker uses Go’s default gzip library to compress layers, which does not meet our performance requirements for large image layers with a lot of plain text files. Despite the time cost of scanning, Makisu is faster than Docker in a lot of scenarios, even without cache. The P90 build time decreased by almost 50 percent after we migrated the build process from Docker to Makisu.

Distributed cache

Makisu uses a key-value store to map lines of a given Dockerfile to the digests of the layers stored in a Docker registry. The key-value store can be backed by either Redis or a file system. Makisu also enforces a cache TTL, making sure cache entries do not become too stale.

The cache key is generated using the current build command and the keys of previous build commands within a stage. The cache value is the content hash of the generated layer, which is also used to identify the actual layer stored in the Docker registry.

During the initial build of a Dockerfile, layers are generated and asynchronously pushed to the Docker registry and the key-value store. Subsequent builds that share the same build steps can then fetch and unpack the cached layers, preventing redundant executions.

Flexible layer generation

To provide control over image layers that are generated during the build process, Makisu uses a new optional commit annotation, #!COMMIT,that specifies which lines in a Dockerfile can generate a new layer. This simple mechanism allows for reduced container image sizes (as some layers might delete or modify files added by previous layers) and solves the secret-inclusion problem without post-hoc layer squashing. Each of the committed layers is also uploaded to a distributed cache, meaning that it can be used by builds on other machines in the cluster.

Below, we share an example Dockerfile that leverages Makisu’s layer generation annotation:

FROM debian:8 AS build_phase

RUN apt-get install wget #!COMMIT

RUN apt-get install go1.10 #!COMMIT

COPY git-repo git-repo

RUN cd git-repo && make

FROM debian:8 AS run_phase

RUN apt-get install wget #!COMMIT

LABEL service-name=test

COPY –from=build_phase git-repo/binary /binary

ENTRYPOINT /binary

In this instance, the multi-stage build installs some build-time requirements (wget and go1.10), commits each in their own layer, and proceeds to build the service code. As soon as these committed layers are built, they are uploaded to the distributed cache and available to other builds, providing significant speedups due to redundancy in application dependencies.

In the second stage, the build once again installs wget, but this time Makisu reuses the layer committed during the build phase. Finally, the service binary is copied from the build stage and the final image is generated. This common paradigm results in a slim, final image that is cheaper to construct (due to the benefits of distributed caching) and takes up less space, depicted in Figure 1, below:

Figure 1. While Docker starts a new container for each step and generates three layers, Makisu orchestrates all steps in one container and skips unnecessary layer generations.

Figure 1. While Docker starts a new container for each step and generates three layers, Makisu orchestrates all steps in one container and skips unnecessary layer generations.

Docker compatibility

Given that a Docker image is just composed of a few plain tar files that are unpacked in sequential order and two configuration files, the images generated by Makisu are fully compatible with both the Docker daemon and registry.

Makisu also has full support for multi-stage builds. With smart uses of #!COMMIT, stages can be optimized so those that are unlikely to be reused can avoid layer generations, saving storage space.

Additionally, because the commit annotations are formatted as comments, Dockerfiles used by Makisu can be built by Docker without issue.

Using Makisu

To get started with Makisu, users can download the binary directly onto their laptops and build simple Dockerfiles without the RUN directive. Users can also download Makisu and run it inside a container locally for full Docker compatibility or implement it as part of a Kubernetes or Apache Mesos continuous integration (CI) workflow:

- Using the Makisu binary locally

- Using the Makisu image locally

- Example workflow for using Makisu on Kubernetes

Others solutions in the open source community

There are various other open source projects that facilitate Docker image builds, including:

- Bazel was one of the first tools that could build Docker compatible images without using Docker or any other form of containerizer. It works very well with a subset of Docker build scenarios given a Bazel build file. We were inspired by its approach of building images, but we found that it does not support the RUN directive, which means users cannot install some dependencies (for example, apt-get install wget), making it hard to replace most Docker build workflows.

- Kaniko is an image build tool that provides robust compatibility with Docker and executes build commands in user space without the need for a Docker daemon. Kaniko offers smooth integration with Kubernetes and multiple registry implementations, making it a competent tool for Kubernetes users. However, Makisu provides a solution that better handles large images, especially those with node_modules, allows cache to expire, and offers more control over cache generation, three features critical for handling more complex workflows.

- BuildKit, a Dockerfile-agnostic builder toolkit, depends on runC and containerd, and supports parallel stage executions. BuildKit needs access to /proc to launch nested containers, which is not doable in our production environments.

The abundance and diversity of these solutions shows the need for alternative image builders in the Docker ecosystem.

Moving forward

Compared to our previous build process, Makisu reduced Docker image build time by up to 90 percent and on average 40 percent, saving precious developer time and CPU resources. These improvements depend on how a given Dockerfile is structured and how useful distributed cache is to its specific set-up. Makisu also produces images up to 50 percent smaller in size by getting rid of temporary and cache files, as well as build-time only dependencies, which saves space in our compute cluster and speeds up container deployments.

We are actively working on additional performance improvements and adding more features for better integration with container ecosystems. We also plan to add support for Open Container Initiative (OCI) image formats and integrate Makisu with existing CI/CD solutions.

We encourage you to try out Makisu for your container CI/CD workflow and provide us with your feedback! Please contact us via our Slackand GitHub channels.

If you’re interested in challenges with managing containers at scale, consider applying for a role on our team!

010.Kubernetes二进制部署kube-controller-manager

一 部署高可用kube-controller-manager

1.1 高可用kube-controller-manager介绍

- 与 kube-apiserver 的安全端口通信;

- 在安全端口(https,10252) 输出 prometheus 格式的 metrics。

1.2 创建kube-controller-manager证书和私钥

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# cat > kube-controller-manager-csr.json <<EOF

3 {

4 "CN": "system:kube-controller-manager",

5 "hosts": [

6 "127.0.0.1",

7 "172.24.8.71",

8 "172.24.8.72",

9 "172.24.8.73"

10 ],

11 "key": {

12 "algo": "rsa",

13 "size": 2048

14 },

15 "names": [

16 {

17 "C": "CN",

18 "ST": "Shanghai",

19 "L": "Shanghai",

20 "O": "system:kube-controller-manager",

21 "OU": "System"

22 }

23 ]

24 }

25 EOF

26 #创建kube-controller-manager的CA证书请求文件 1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# cfssl gencert -ca=/opt/k8s/work/ca.pem \

3 -ca-key=/opt/k8s/work/ca-key.pem -config=/opt/k8s/work/ca-config.json \

4 -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager #生成CA密钥(ca-key.pem)和证书(ca.pem)1.3 分发证书和私钥

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]}

4 do

5 echo ">>> ${master_ip}"

6 scp kube-controller-manager*.pem root@${master_ip}:/etc/kubernetes/cert/

7 done1.4 创建和分发kubeconfig

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# kubectl config set-cluster kubernetes \

4 --certificate-authority=/opt/k8s/work/ca.pem \

5 --embed-certs=true \

6 --server=${KUBE_APISERVER} \

7 --kubeconfig=kube-controller-manager.kubeconfig

8

9 [root@k8smaster01 work]# kubectl config set-credentials system:kube-controller-manager \

10 --client-certificate=kube-controller-manager.pem \

11 --client-key=kube-controller-manager-key.pem \

12 --embed-certs=true \

13 --kubeconfig=kube-controller-manager.kubeconfig

14

15 [root@k8smaster01 work]# kubectl config set-context system:kube-controller-manager \

16 --cluster=kubernetes \

17 --user=system:kube-controller-manager \

18 --kubeconfig=kube-controller-manager.kubeconfig

19

20 [root@k8smaster01 work]# kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

21

22 [root@k8smaster01 ~]# cd /opt/k8s/work

23 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

24 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]}

25 do

26 echo ">>> ${master_ip}"

27 scp kube-controller-manager.kubeconfig root@${master_ip}:/etc/kubernetes/

28 done1.5 创建kube-controller-manager的systemd

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# cat > kube-controller-manager.service.template <<EOF

4 [Unit]

5 Description=Kubernetes Controller Manager

6 Documentation=https://github.com/GoogleCloudPlatform/kubernetes

7

8 [Service]

9 WorkingDirectory=${K8S_DIR}/kube-controller-manager

10 ExecStart=/opt/k8s/bin/kube-controller-manager \\

11 --profiling \\

12 --cluster-name=kubernetes \\

13 --controllers=*,bootstrapsigner,tokencleaner \\

14 --kube-api-qps=1000 \\

15 --kube-api-burst=2000 \\

16 --leader-elect \\

17 --use-service-account-credentials\\

18 --concurrent-service-syncs=2 \\

19 --bind-address=##MASTER_IP## \\

20 --secure-port=10252 \\

21 --tls-cert-file=/etc/kubernetes/cert/kube-controller-manager.pem \\

22 --tls-private-key-file=/etc/kubernetes/cert/kube-controller-manager-key.pem \\

23 --port=0 \\

24 --authentication-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

25 --client-ca-file=/etc/kubernetes/cert/ca.pem \\

26 --requestheader-allowed-names="" \\

27 --requestheader-client-ca-file=/etc/kubernetes/cert/ca.pem \\

28 --requestheader-extra-headers-prefix="X-Remote-Extra-" \\

29 --requestheader-group-headers=X-Remote-Group \\

30 --requestheader-username-headers=X-Remote-User \\

31 --authorization-kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

32 --cluster-signing-cert-file=/etc/kubernetes/cert/ca.pem \\

33 --cluster-signing-key-file=/etc/kubernetes/cert/ca-key.pem \\

34 --experimental-cluster-signing-duration=8760h \\

35 --horizontal-pod-autoscaler-sync-period=10s \\

36 --concurrent-deployment-syncs=10 \\

37 --concurrent-gc-syncs=30 \\

38 --node-cidr-mask-size=24 \\

39 --service-cluster-ip-range=${SERVICE_CIDR} \\

40 --pod-eviction-timeout=6m \\

41 --terminated-pod-gc-threshold=10000 \\

42 --root-ca-file=/etc/kubernetes/cert/ca.pem \\

43 --service-account-private-key-file=/etc/kubernetes/cert/ca-key.pem \\

44 --kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \\

45 --logtostderr=true \\

46 --v=2

47 Restart=on-failure

48 RestartSec=5

49

50 [Install]

51 WantedBy=multi-user.target

52 EOF1.6 分发systemd

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# for (( i=0; i < 3; i++ ))

4 do

5 sed -e "s/##MASTER_NAME##/${MASTER_NAMES[i]}/" -e "s/##MASTER_IP##/${MASTER_IPS[i]}/" kube-controller-manager.service.template > kube-controller-manager-${MASTER_IPS[i]}.service

6 done #修正相应IP

7 [root@k8smaster01 work]# ls kube-controller-manager*.service

8 [root@k8smaster01 ~]# cd /opt/k8s/work

9 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

10 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]}

11 do

12 echo ">>> ${master_ip}"

13 scp kube-controller-manager-${master_ip}.service root@${master_ip}:/etc/systemd/system/kube-controller-manager.service

14 done #分发system二 启动并验证

2.1 启动kube-controller-manager 服务

1 [root@k8smaster01 ~]# cd /opt/k8s/work

2 [root@k8smaster01 work]# source /opt/k8s/bin/environment.sh

3 [root@k8smaster01 work]# for master_ip in ${MASTER_IPS[@]}

4 do

5 echo ">>> ${master_ip}"

6 ssh root@${master_ip} "mkdir -p ${K8S_DIR}/kube-controller-manager"

7 ssh root@${master_ip} "systemctl daemon-reload && systemctl enable kube-controller-manager && systemctl restart kube-controller-manager"

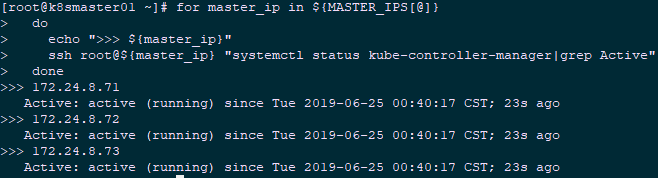

8 done2.2 检查kube-controller-manager 服务

1 [root@k8smaster01 ~]# source /opt/k8s/bin/environment.sh

2 [root@k8smaster01 ~]# for master_ip in ${MASTER_IPS[@]}

3 do

4 echo ">>> ${master_ip}"

5 ssh root@${master_ip} "systemctl status kube-controller-manager|grep Active"

6 done

2.3 查看输出的 metrics

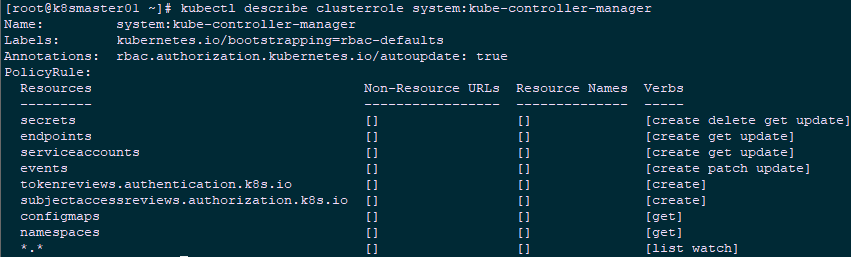

1 [root@k8smaster01 ~]# curl -s --cacert /opt/k8s/work/ca.pem --cert /opt/k8s/work/admin.pem --key /opt/k8s/work/admin-key.pem https://172.24.8.71:10252/metrics |head2.4 查看权限

1 [root@k8smaster01 ~]# kubectl describe clusterrole system:kube-controller-manager

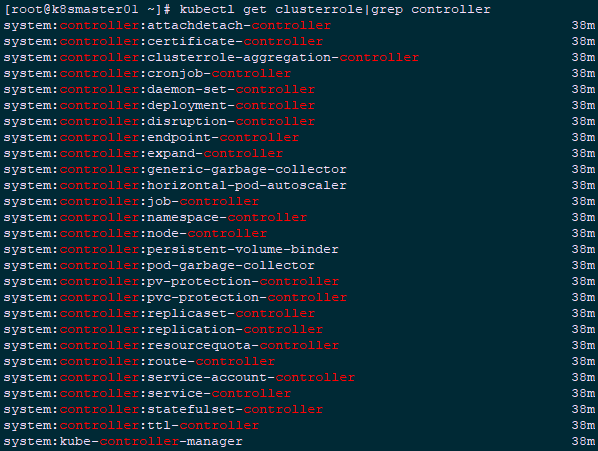

1 [root@k8smaster01 ~]# kubectl get clusterrole|grep controller

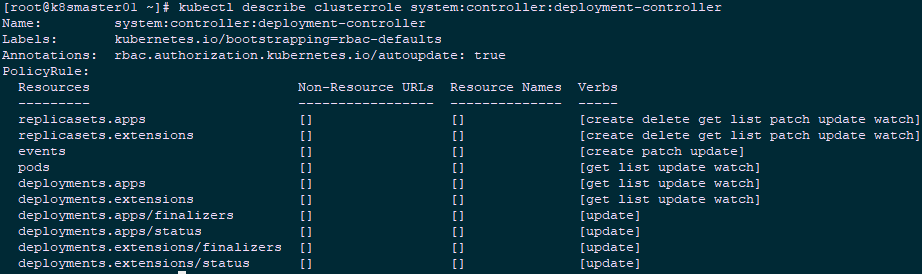

1 [root@k8smaster01 ~]# kubectl describe clusterrole system:controller:deployment-controller

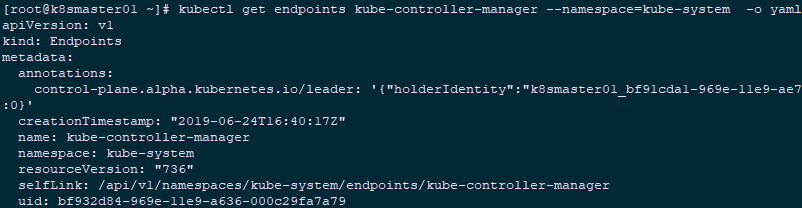

2.5 查看当前leader

1 [root@k8smaster01 ~]# kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

原文出处:https://www.cnblogs.com/itzgr/p/11876066.html

ABAP 的 Package interface, 安卓的 manifest.xml 和 Kubernetes 的 Capabilities

ABAP

事务码 SE21 创建 ABAP 包接口。这是 ABAP 基于包层面的访问控制实现逻辑。包里可以存储很多 ABAP 对象。如果开发人员想将某些对象声明为包外程序也能访问,可以将这些对象放在包接口的 Visible Elements 标签页下面。

当然,如果一个对象没有出现在 Visible elements 标签页下,包外面的代码运行时仍然能访问到这个对象,只不过静态语法检查会报 warning。

Android manifest.xml

每个 Android 应用都需要一个名为 AndroidManifest.xml 的程序清单文件,这个清单文件名是固定的并且放在每个 Android 应用的根目录下。类似 ABAP 包接口的权限控制功能,manifest.xml 和安卓应用权限控制相关的配置信息:

- 描述了应用程序使用某些受保护的程序 API 或和其它应用程序交互所需的权限。

- 描述了其它应用程序和该应用交互时应拥有的权限。

Allowed operations of a given Android application should be defined in manifest.xml:

Capabilities in Kubernetes

默认情况下,容器都是以 non-privileged 方式运行。For example, no virtual network creation or virtual network configuration is allowed within container.

Kubernetes 提供了修改 Capabilities 的机制,可以按需要给给容器增加或删除。比如下面的配置给容器增加了 CAP_NET_ADMIN 并删除了 CAP_KILL:

要获取更多 Jerry 的原创文章,请关注公众号 "汪子熙":

ABAP的Package interface, 安卓的manifest.xml和Kubernetes的Capabilities

ABAP

事务码SE21创建ABAP包接口。这是ABAP基于包层面的访问控制实现逻辑。包里可以存储很多ABAP对象。如果开发人员想将某些对象声明为包外程序也能访问,可以将这些对象放在包接口的Visible Elements标签页下面。

当然,如果一个对象没有出现在Visible elements标签页下,包外面的代码运行时仍然能访问到这个对象,只不过静态语法检查会报warning。

Android manifest.xml

每个Android应用都需要一个名为AndroidManifest.xml的程序清单文件,这个清单文件名是固定的并且放在每个Android应用的根目录下。类似ABAP包接口的权限控制功能,manifest.xml和安卓应用权限控制相关的配置信息:

- 描述了应用程序使用某些受保护的程序API或和其它应用程序交互所需的权限。

- 描述了其它应用程序和该应用交互时应拥有的权限。

Allowed operations of a given Android application should be defined in manifest.xml:

Capabilities in Kubernetes

默认情况下,容器都是以non-privileged方式运行。For example, no virtual network creation or virtual network configuration is allowed within container.

Kubernetes提供了修改Capabilities的机制,可以按需要给给容器增加或删除。比如下面的配置给容器增加了 CAP_NET_ADMIN 并删除了 CAP_KILL:

要获取更多Jerry的原创文章,请关注公众号"汪子熙":

AWS Kubernetes/k8s kubeamd 初始化后kube-controller-manager pod CrashLoopBackOff 错误启动不了

问题出现在版本1.22和1.21中,同样的配置在1.19和1.20版本中成功配置没有问题。

kubeadm init

初始化后提示成功,在master第二个节点 kubeadm join时提示

Could not find a JWS signature

于是回到第一个master上看一下cluster info信息

kubectl get configmap cluster-info --namespace=kube-public -o yaml

非常奇怪的是没有jws段,jws是一个证书的签名用来验证证书的token,这里提一下它并不安全最好不要用于所有节点,可以通过kubeadm create token xxx来创建。

没有jws段那么判断没有生效的token,但是用

kubeadm token list

可以看到token全部正常有效,这个问题就很奇怪了。

在阅读bootstrap-tokens鉴权和kubeadm实现细节后发现,原来cluster info中的aws需要在kube-controller-manager运行后创建。

这时才发现kube-controller-manager的pod没有起来,我们知道kubeadm文档中说过如果init后pod有没有成功生效的那么就要发issue证明是kubeadm坏了,这个判断大概率不成立,肯定是kubeadm配置错误。

kubectl describe kube-controller-manager -nkube-system

kubectl logs -n kube-system kube-controller-manager

后提示:

Error: unkNown flag: --horizontal-pod-autoscaler-use-rest-clients

原来1.21的kube-controller-manager不再支持这个参数。

去掉后pod成功启动

关于Introducing Makisu: Uber’s Fast, Reliable Docker Image Builder for Apache Mesos and Kubernetes的介绍现已完结,谢谢您的耐心阅读,如果想了解更多关于010.Kubernetes二进制部署kube-controller-manager、ABAP 的 Package interface, 安卓的 manifest.xml 和 Kubernetes 的 Capabilities、ABAP的Package interface, 安卓的manifest.xml和Kubernetes的Capabilities、AWS Kubernetes/k8s kubeamd 初始化后kube-controller-manager pod CrashLoopBackOff 错误启动不了的相关知识,请在本站寻找。

本文标签:

![[转帖]Ubuntu 安装 Wine方法(ubuntu如何安装wine)](https://www.gvkun.com/zb_users/cache/thumbs/4c83df0e2303284d68480d1b1378581d-180-120-1.jpg)