在这篇文章中,我们将带领您了解kubernetesnginx虚拟服务器子路由的全貌,包括nginxingress虚拟路径的相关情况。同时,我们还将为您介绍有关Kubernetes--k8s-nginx

在这篇文章中,我们将带领您了解kubernetes nginx 虚拟服务器子路由的全貌,包括nginx ingress 虚拟路径的相关情况。同时,我们还将为您介绍有关Kubernetes - - k8s - nginx-ingress、Kubernetes - Launch Single Node Kubernetes Cluster、Kubernetes Nginx Ingress Controller、Kubernetes Nginx Ingress 安装与使用的知识,以帮助您更好地理解这个主题。

本文目录一览:- kubernetes nginx 虚拟服务器子路由(nginx ingress 虚拟路径)

- Kubernetes - - k8s - nginx-ingress

- Kubernetes - Launch Single Node Kubernetes Cluster

- Kubernetes Nginx Ingress Controller

- Kubernetes Nginx Ingress 安装与使用

kubernetes nginx 虚拟服务器子路由(nginx ingress 虚拟路径)

如何解决kubernetes nginx 虚拟服务器子路由?

我对 kubernetes Nginx 虚拟子路由有点困惑。 https://docs.nginx.com/nginx-ingress-controller/configuration/virtualserver-and-virtualserverroute-resources/#virtualserverroute-subroute

“在前缀的情况下,路径必须以与引用此资源的VirtualServer的路由路径相同的路径开头”

path: /coffee

action:

path: coffee

/coffee 会传递给应用吗?

因为当我尝试使用路由部署虚拟服务器时它不起作用(下面的示例)

path: /one

action:

path: hellok8s

但是,我以前使用的这条路线是有效的

path: /

action:

path: hellok8s

举个例子,如果我有一个 app-1 和 app-2...我应该通过主机还是通过子路径来区分它们?

- app-1:helloworld.test.com

- app-2:helloworld2.test.com

或者有什么方法可以通过下面的路径区分它们?

- app-1:helloworld.test.com/appone

- app-2:helloworld.test.com/apptwo

--- 编辑

apiVersion: k8s.Nginx.org/v1

kind: VirtualServer

Metadata:

name: hellok8s-app-vs

spec:

host: helloworld.moonshot.com

tls:

secret: Nginx-tls-secret

# basedOn: scheme

redirect:

enable: true

code: 301

upstream:

- name: hellok8s

service: hellok8s-service

port: 8080

routes:

- path: /one

action:

proxy:

upstream: hellok8s

rewritePath: /

解决方法

所以路径是 Nginx 将暴露给外部世界的 URL。该路径在内部会发生什么取决于操作的子属性,一些示例:

此处的 /coffee 是最终用户看到的内容,但请求被发送到咖啡服务的根目录。因此,如果咖啡是在 8080 上运行的 K8S 中的服务,则请求将在 coffee:8080

path: /coffee

action:

pass: coffee

但是还有更多actions。假设您使用 action.proxy,那么您可以在更细粒度的级别定义路径应该发生的情况。因此,在下面的示例中,我们转发到 coffee 服务,但请求路径被重新写入 filtercoffee

proxy:

upstream: coffee

rewritePath: /filtercoffee

你也可以使用redirect,return in action的pass指令,但是你必须use one of the four listed here

Kubernetes - - k8s - nginx-ingress

1,简介

- Kubernetes 暴露服务的有三种方式,分别为 LoadBlancer Service、NodePort Service、Ingress。

- Kubernetes 中为了实现服务实例间的负载均衡和不同服务间的服务发现,创造了 Serivce 对象,同时又为从集群外部访问集群创建了 Ingress 对象。

1.1 NodePort 类型

- 如果设置 type 的值为 "NodePort",Kubernetes master 将从给定的配置范围内(默认:30000-32767)分配端口,每个 Node 将从该端口(每个 Node 上的同一端口)代理到 Service。该端口将通过 Service 的 spec.ports [*].nodePort 字段被指定。

- 如果需要指定的端口号,可以配置 nodePort 的值,系统将分配这个端口,否则调用 API 将会失败(比如,需要关心端口冲突的可能性)。

- 这可以让开发人员自由地安装他们自己的负载均衡器,并配置 Kubernetes 不能完全支持的环境参数,或者直接暴露一个或多个 Node 的 IP 地址。

- 需要注意的是,Service 将能够通过 <NodeIP>:spec.ports [].nodePort 和 spec.clusterIp:spec.ports [].port 而对外可见。

- NodePort Service 是通过在节点上暴漏端口,然后通过将端口映射到具体某个服务上来实现服务暴漏,比较直观方便,但是对于集群来说,随着 Service 的不断增加,需要的端口越来越多,很容易出现端口冲突,而且不容易管理。当然对于小规模的集群服务,还是比较不错的。

1.2 LoadBalancer 类型

- LoadBlancer Service 是 Kubernetes 结合云平台的组件,如国外 GCE、AWS、国内阿里云等等,使用它向使用的底层云平台申请创建负载均衡器来实现,有局限性,对于使用云平台的集群比较方便。

- 使用支持外部负载均衡器的云提供商的服务,设置 type 的值为 "LoadBalancer",将为 Service 提供负载均衡器。 负载均衡器是异步创建的,关于被提供的负载均衡器的信息将会通过 Service 的 status.loadBalancer 字段被发布出去。

- 来自外部负载均衡器的流量将直接打到 backend Pod 上,不过实际它们是如何工作的,这要依赖于云提供商。 在这些情况下,将根据用户设置的 loadBalancerIP 来创建负载均衡器。 某些云提供商允许设置 loadBalancerIP。如果没有设置 loadBalancerIP,将会给负载均衡器指派一个临时 IP。 如果设置了 loadBalancerIP,但云提供商并不支持这种特性,那么设置的 loadBalancerIP 值将会被忽略掉。

1.3 Ingress 解析

- ingress 就是从 kubernetes 集群外访问集群的入口,将用户的 URL 请求转发到不同的 service 上。Ingress 相当于 nginx、apache 等负载均衡方向代理服务器,其中还包括规则定义,即 URL 的路由信息,路由信息得的刷新由 Ingress controller 来提供。

- 通常情况下,service 和 pod 的 IP 仅可在集群内部访问。集群外部的请求需要通过负载均衡转发到 service 在 Node 上暴露的 NodePort 上,然后再由 kube-proxy 将其转发给相关的 Pod。

- Ingress 可以给 service 提供集群外部访问的 URL、负载均衡、SSL 终止、HTTP 路由等。为了配置这些 Ingress 规则,集群管理员需要部署一个 Ingress controller,它监听 Ingress 和 service 的变化,并根据规则配置负载均衡并提供访问入口。

- Ingress Controller 实质上可以理解为是个监视器,Ingress Controller 通过不断地跟 kubernetes API 打交道,实时的感知后端 service、pod 等变化,比如新增和减少 pod,service 增加与减少等;当得到这些变化信息后,Ingress Controller 再结合下文的 Ingress 生成配置,然后更新反向代理负载均衡器,并刷新其配置,达到服务发现的作用。

1.3.1 Nginx Ingress

- nginx-ingress 可以实现 7/4 层的代理功能(4 层代理基于 ConfigMap;7 层的 Nginx 反向代理) ,主要负责向外暴露服务,同时提供负载均衡等附加功能

- 反向代理负载均衡器,通常以 Service 的 Port 方式运行,接收并按照 ingress 定义的规则进行转发,通常为 nginx,haproxy,traefik 等

- ingress 是 kubernetes 的一个资源对象,用于编写定义规则,通过它定义某个域名的请求过来之后转发到集群中指定的 Service。它可以通过 Yaml 文件定义,可以给一个或多个 Service 定义一个或多个 Ingress 规则。

- Ingress Controller 可以理解为控制器,它通过不断的跟 Kubernetes API 监听交互,实时获取后端 Service、Pod 等的变化,比如新增、删除等,然后结合 Ingress 定义的规则生成配置,然后动态更新上边的 Nginx 负载均衡器,并刷新使配置生效,来达到服务自动发现的作用。

- nginx-ingress 模块在运行时主要包括三个主体:NginxController、Store、SyncQueue。

- Store 主要负责从 kubernetes APIServer 收集运行时信息,感知各类资源(如 ingress、service 等)的变化,并及时将更新事件消息(event)写入一个环形管道。

- SyncQueue 协程定期扫描 syncQueue 队列,发现有任务就执行更新操作,即借助 Store 完成最新运行数据的拉取,然后根据一定的规则产生新的 nginx 配置,(有些更新必须 reload,就本地写入新配置,执行 reload),然后执行动态更新操作,即构造 POST 数据,向本地 Nginx Lua 服务模块发送 post 请求,实现配置更新。

- NginxController 作为中间的联系者,监听 updateChannel,一旦收到配置更新事件,就向同步队列 syncQueue 里写入一个更新请求。

2,部署 Nginx ingress

-

下载地址:https://github.com/kubernetes/ingress-nginx/archive/nginx-0.22.0.tar.gz

-

ingress-nginx 文件位于 deploy 目录下,各文件的作用:

- configmap.yaml: 提供 configmap 可以在线更行 nginx 的配置,修改 L4 负载均衡配置的 configmap

- namespace.yaml: 创建一个独立的命名空间 ingress-nginx

- rbac.yaml: 创建对应的 role rolebinding 用于 rbac

- with-rbac.yaml: 有应用 rbac 的 nginx-ingress-controller 组件

- mandatory.yaml: 是其它组件 yaml 之和

2.1 修改 with-rbac.yaml 或者 mandatory.yaml

- kind: DaemonSet:官方原始文件使用的是 deployment,replicate 为 1,这样将会在某一台节点上启动对应的 nginx-ingress-controller pod。外部流量访问至该节点,由该节点负载分担至内部的 service。测试环境考虑防止单点故障,改为 DaemonSet 然后删掉 replicate ,配合亲和性部署在制定节点上启动 nginx-ingress-controller pod,确保有多个节点启动 nginx-ingress-controller pod,后续将这些节点加入到外部硬件负载均衡组实现高可用性。

- hostNetwork: true:添加该字段,暴露 nginx-ingress-controller pod 的服务端口(80)

- nodeSelector: 增加亲和性部署,有 custom/ingress-controller-ready 标签的节点才会部署该 DaemonSet

# with-rbac.yaml

apiVersion: apps/v1

# kind: Deployment

kind: DeamonSet

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

spec:

# replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

serviceAccountName: nginx-ingress-serviceaccount

hostNetwork: true

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.22.0

args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --publish-service=$(POD_NAMESPACE)/ingress-nginx

- --annotations-prefix=nginx.ingress.kubernetes.io

securityContext:

allowPrivilegeEscalation: true

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

# www-data -> 33

runAsUser: 33

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

nodeSelector:

custom/ingress-controller-ready: "true"

---

2.3 为需要部署 nginx-ingress-controller 的节点设置 lable

kubectl label nodes 192.168.2.101 custom/ingress-controller-ready=true

kubectl label nodes 192.168.2.102 custom/ingress-controller-ready=true

kubectl label nodes 192.168.2.103 custom/ingress-controller-ready=true

2.4 创建 nginx-ingress

kubectl create -f mandatory.yaml

- 或

kubectl create -f configmap.yaml

kubectl create -f namespace.yaml

kubectl create -f rbac.yaml

kubectl create -f with-rbac.yaml

3,测试 ingress

3.1 创建一个 apache 的 Service

# cat > my-apache.yaml << EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: my-apache

spec:

replicas: 2

template:

metadata:

labels:

run: my-apache

spec:

containers:

- name: my-apache

image: httpd

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: my-apache

spec:

metadata:

labels:

run: my-apache

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30002

selector:

run: my-apache

EOF

3.2 创建一个 nginx 的 Service

# cat > my-nginx.yaml << EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: my-nginx

spec:

replicas: 2

template:

metadata:

labels:

run: my-nginx

spec:

containers:

- name: my-nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: my-nginx

spec:

template:

matadata:

lables:

run: my-nginx

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

nodePort: 30001

selector:

run: my-nginx

EOF

3.3 配置 ingress 转发文件

- host: 对应的域名

- path: url 上下文

- backend: 后向转发 到对应的 serviceName: servicePort:

# cat > test-ingress.yaml << EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-ingress

namespace: default

spec:

rules:

- host: test.apache.ingress

http:

paths:

- path: /

backend:

serviceName: my-apache

servicePort: 80

- host: test.nginx.ingress

http:

paths:

- path: /

backend:

serviceName: my-nginx

servicePort: 80

EOF

3.4 查看状态

# kubectl get ingress

NAME HOSTS ADDRESS PORTS AGE

test-ingress test.apache.ingress,test.nginx.ingress 80 23s

# kubectl get deploy,pod,svc

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.extensions/my-apache 2 2 2 1 12s

deployment.extensions/my-nginx 2 2 2 2 12s

NAME READY STATUS RESTARTS AGE

pod/my-apache-57874fd49c-dc4vx 1/1 Running 0 12s

pod/my-apache-57874fd49c-lfhld 0/1 ContainerCreating 0 12s

pod/my-nginx-756f645cd7-fvq9d 1/1 Running 0 11s

pod/my-nginx-756f645cd7-ngj99 1/1 Running 0 12s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 10d

service/my-apache NodePort 10.254.95.131 <none> 80:30002/TCP 12s

service/my-nginx NodePort 10.254.92.19 <none> 80:30001/TCP 11s

3.5 解析域名到 ipvs 虚拟 VIP,访问测试

3.5.1 通过 - H 指定模拟的域名

- test.apache.ingress

# curl -v http://192.168.2.100 -H ''host: test.apache.ingress''

* About to connect() to 192.168.2.100 port 80 (#0)

* Trying 192.168.2.100...

* Connected to 192.168.2.100 (192.168.2.100) port 80 (#0)

> GET / HTTP/1.1

> User-Agent: curl/7.29.0

> Accept: */*

> host: test.apache.ingress

>

< HTTP/1.1 200 OK

< Server: nginx/1.15.8

< Date: Fri, 25 Jan 2019 08:24:37 GMT

< Content-Type: text/html

< Content-Length: 45

< Connection: keep-alive

< Last-Modified: Mon, 11 Jun 2007 18:53:14 GMT

< ETag: "2d-432a5e4a73a80"

< Accept-Ranges: bytes

<

<html><body><h1>It works!</h1></body></html>

* Connection #0 to host 192.168.2.100 left intact

- test.nginx.ingress

# curl -v http://192.168.2.100 -H ''host: test.nginx.ingress''

* About to connect() to 192.168.2.100 port 80 (#0)

* Trying 192.168.2.100...

* Connected to 192.168.2.100 (192.168.2.100) port 80 (#0)

> GET / HTTP/1.1

> User-Agent: curl/7.29.0

> Accept: */*

> host: test.nginx.ingress

>

< HTTP/1.1 200 OK

< Server: nginx/1.15.8

< Date: Fri, 25 Jan 2019 08:24:53 GMT

< Content-Type: text/html

< Content-Length: 612

< Connection: keep-alive

< Vary: Accept-Encoding

< Last-Modified: Tue, 25 Dec 2018 09:56:47 GMT

< ETag: "5c21fedf-264"

< Accept-Ranges: bytes

<

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

* Connection #0 to host 192.168.2.100 left intact

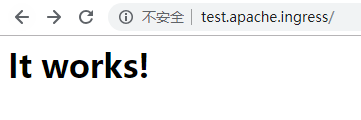

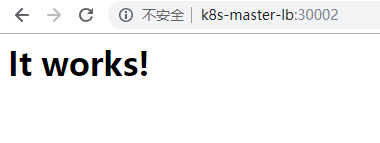

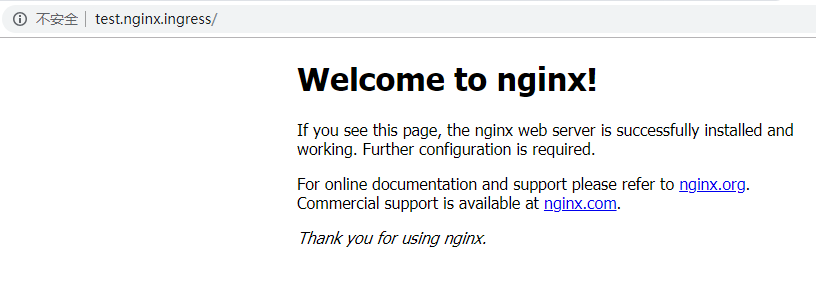

3.5.2 浏览器访问

- http://test.apache.ingress/

- http://k8s-master-lb:30002/

- http://test.nginx.ingress/

- http://k8s-master-lb:30001/

4,部署 monitoring

4.1 配置 ingress

# cat > monitoring/prometheus-grafana-ingress.yaml << EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: prometheus-grafana-ingress

namespace: ingress-nginx

spec:

rules:

- host: grafana.k8s.ing

http:

paths:

- path: /

backend:

serviceName: grafana

servicePort: 3000

- host: prometheus.k8s.ing

http:

paths:

- path: /

backend:

serviceName: prometheus-server

servicePort: 9090

EOF

4.2 在 ingress-nginx 官网 deploy/monitoring 目录下载相关 yaml 文件

# ls monitoring/

configuration.yaml grafana.yaml prometheus-grafana-ingress.yaml prometheus.yaml

4.3 部署服务

# kubectl apply -f monitoring/

4.4 查看状态

# kubectl get pod,svc,ingress -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/grafana-5ccff7668d-7lk6q 1/1 Running 0 2d14h

pod/prometheus-server-7f87788f6-7zcfx 1/1 Running 0 2d14h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/glusterfs-dynamic-pvc-grafana ClusterIP 10.254.146.102 <none> 1/TCP 2d14h

service/glusterfs-dynamic-pvc-prometheus ClusterIP 10.254.160.58 <none> 1/TCP 2d14h

service/grafana NodePort 10.254.244.77 <none> 3000:30303/TCP 2d14h

service/prometheus-server NodePort 10.254.168.143 <none> 9090:32090/TCP 2d14h

NAME HOSTS ADDRESS PORTS AGE

ingress.extensions/prometheus-grafana-ingress grafana.k8s.ing,prometheus.k8s.ing 80 2d14h

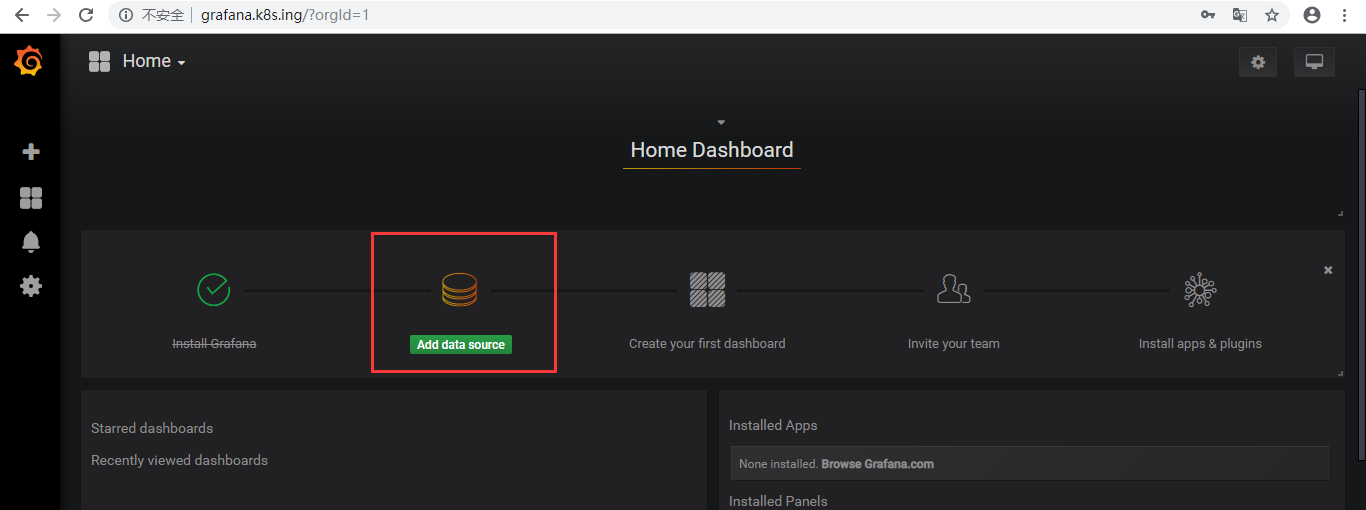

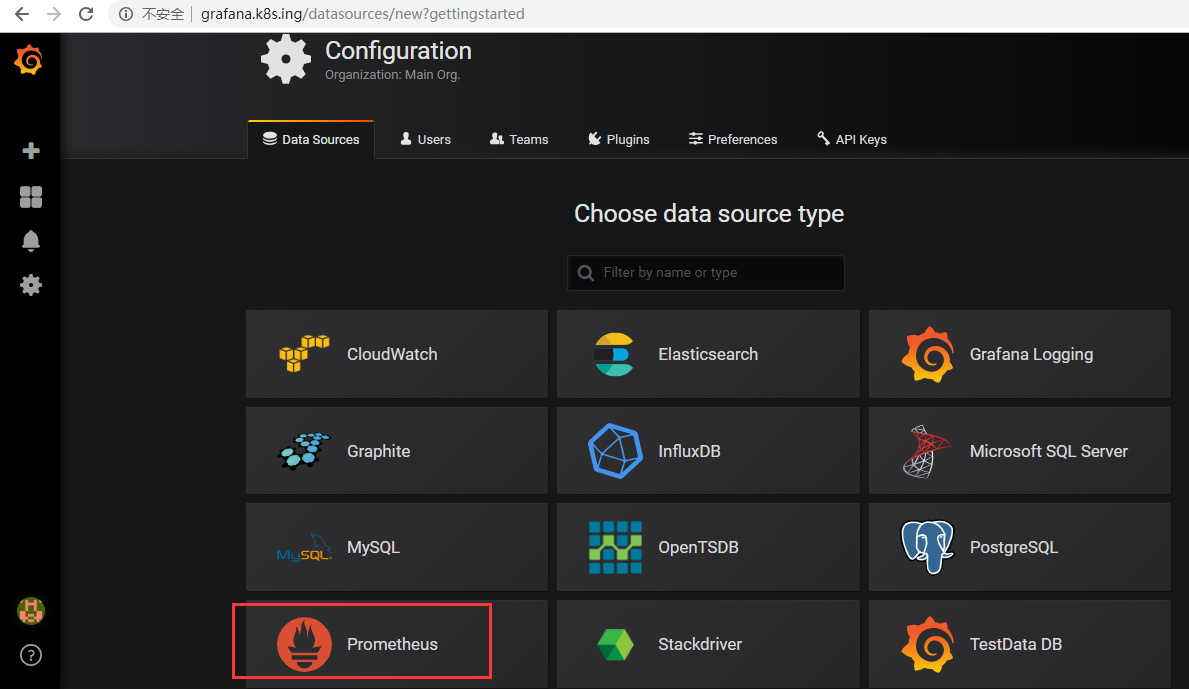

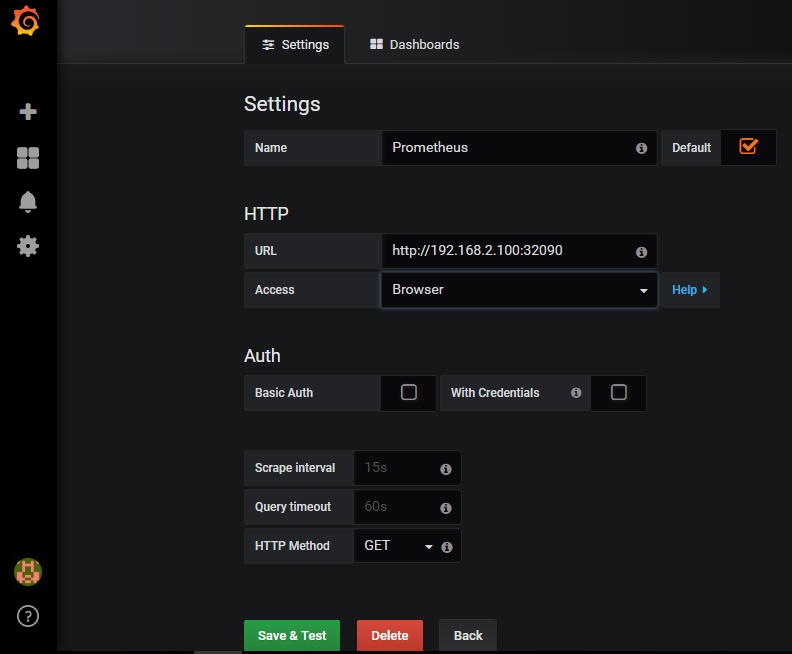

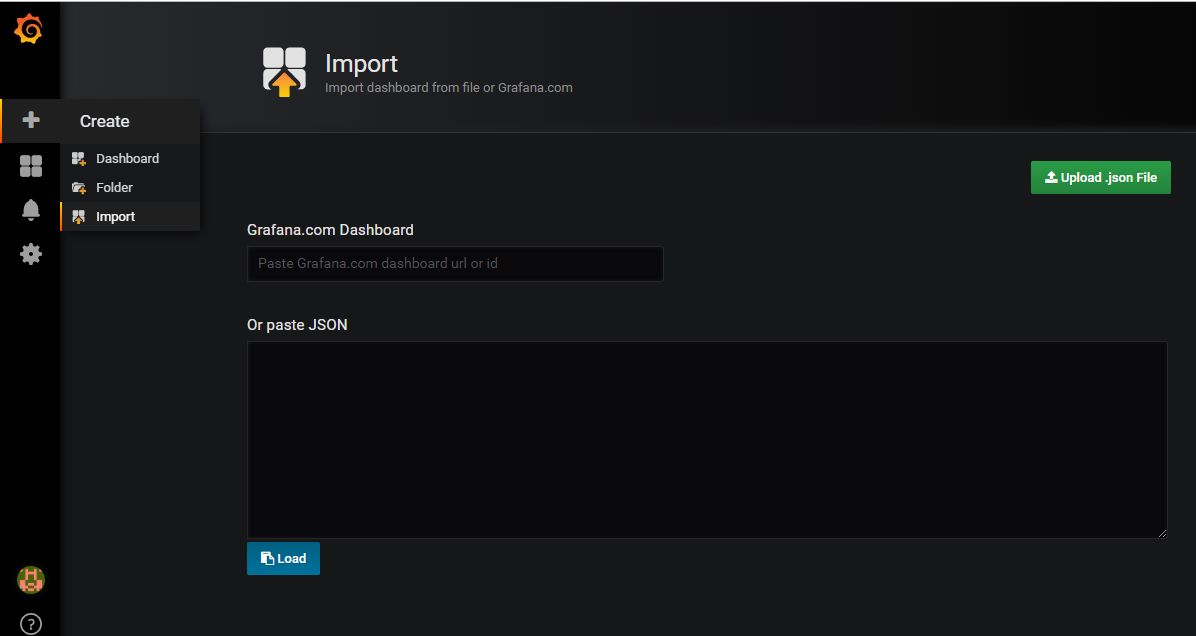

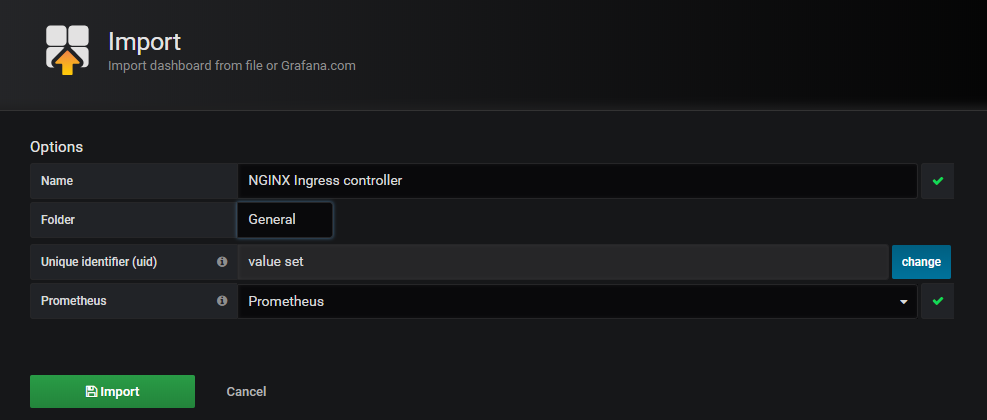

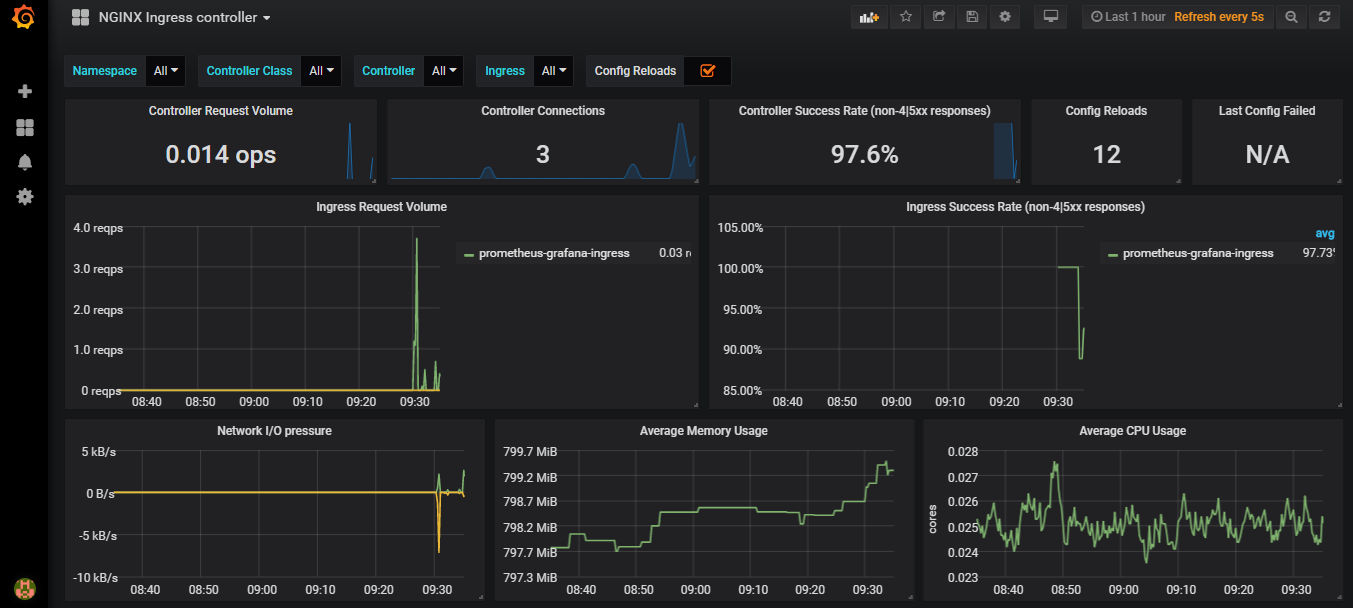

4.5 配置 grafana

- 解析打开页面:http://grafana.k8s.ing

- 导入 json 文件

- 在 ingress-nginx 官网 deploy/grafana/dashboards 目录下载相关 nginx.json 文件

- 参考:

- https://blog.csdn.net/shida_csdn/article/details/84032019

- http://blog.51cto.com/devingeng/2149377

- https://www.jianshu.com/p/ed97007604d7

Kubernetes - Launch Single Node Kubernetes Cluster

Minikube is a tool that makes it easy to run Kubernetes locally. Minikube runs a single-node Kubernetes cluster inside a VM on your laptop for users looking to try out Kubernetes or develop with it day-to-day.

More details can be found at https://github.com/kubernetes/minikube

Step 1 - Start Minikube

Minikube has been installed and configured in the environment. Check that it is properly installed, by running the minikube version command:

minikube versionStart the cluster, by running the minikube start command:

minikube startGreat! You now have a running Kubernetes cluster in your online terminal. Minikube started a virtual machine for you, and a Kubernetes cluster is now running in that VM.

Step 2 - Cluster Info

The cluster can be interacted with using the kubectl CLI. This is the main approach used for managing Kubernetes and the applications running on top of the cluster.

Details of the cluster and its health status can be discovered via

kubectl cluster-infoTo view the nodes in the cluster using

kubectl get nodesIf the node is marked as NotReady then it is still starting the components.

This command shows all nodes that can be used to host our applications. Now we have only one node, and we can see that it’s status is ready (it is ready to accept applications for deployment).

Step 3 - Deploy Containers

With a running Kubernetes cluster, containers can now be deployed.

Using kubectl run, it allows containers to be deployed onto the cluster -

kubectl run first-deployment --image=katacoda/docker-http-server --port=80The status of the deployment can be discovered via the running Pods -

kubectl get podsOnce the container is running it can be exposed via different networking options, depending on requirements. One possible solution is NodePort, that provides a dynamic port to a container.

kubectl expose deployment first-deployment --port=80 --type=NodePortThe command below finds the allocated port and executes a HTTP request.

export PORT=$(kubectl get svc first-deployment -o go-template=''{{range.spec.ports}}{{if .nodePort}}{{.nodePort}}{{"\n"}}{{end}}{{end}}'') echo "Accessing host01:$PORT" curl host01:$PORTThe results is the container that processed the request.

Step 4 - Dashboard

The Kubernetes dashboard allows you to view your applications in a UI. In this deployment, the dashboard has been made available on port 30000.

The URL to the dashboard is https://2886795296-30000-ollie02.environments.katacoda.com/

Kubernetes Nginx Ingress Controller

Last update: January 17, 2019

Ingress is the built‑in Kubernetes load‑balancing framework for HTTP traffic. With Ingress, you control the routing of external traffic. When running on public clouds like AWS or GKE, the load-balancing feature is available out of the box. You don''t need to define Ingress rules. In this post, I will focus on creating Kubernetes Nginx Ingress controller running on Vagrant or any other non-cloud based solution, like bare metal deployments. I deployed my test cluster on Vagrant, with kubeadm.

Create a Sample App Deployment

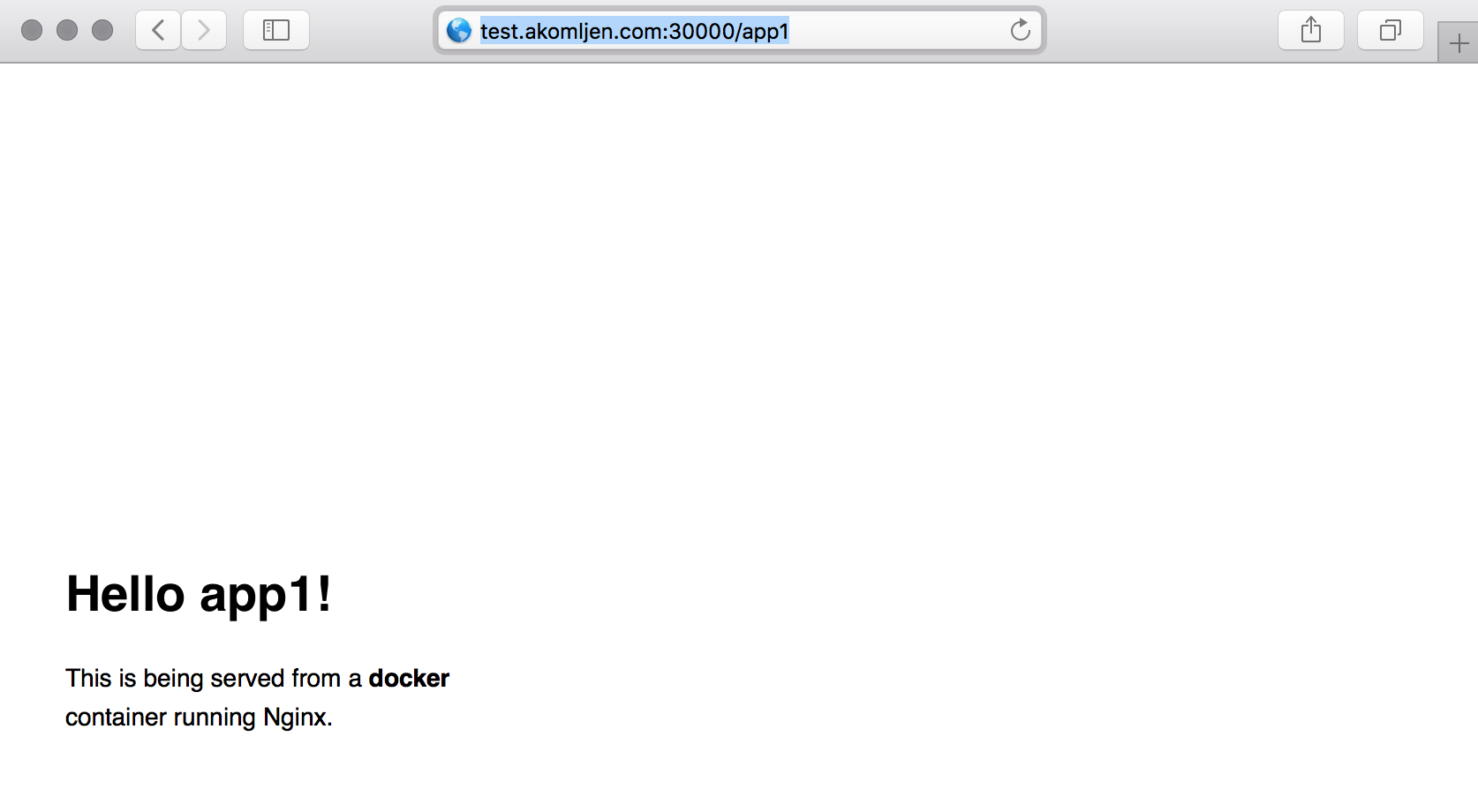

For this lab, let''s create two simple web apps based on dockersamples/static-site docker image. Those are Nginx containers that display application name which will help us to identify which app we are accessing. The result, both apps accessible through load balancer:

Here is the app deployment resource, the two same web apps with a different name and two replicas for each:

⚡ cat > app-deployment.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: app1

spec:

replicas: 2

template:

metadata:

labels:

app: app1

spec:

containers:

- name: app1

image: dockersamples/static-site

env:

- name: AUTHOR

value: app1

ports:

- containerPort: 80

---

apiVersion: extensions/v1beta1 kind: Deployment metadata: name: app2 spec: replicas: 2 template: metadata: labels: app: app2 spec: containers: - name: app2 image: dockersamples/static-site env: - name: AUTHOR value: app2 ports: - containerPort: 80 EOF And same for services:

⚡ cat > app-service.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: appsvc1

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: app1

---

apiVersion: v1 kind: Service metadata: name: appsvc2 spec: ports: - port: 80 protocol: TCP targetPort: 80 selector: app: app2 EOF Next, we''ll create above resources:

⚡ kubectl create -f app-deployment.yaml -f app-service.yaml Create Nginx Ingress Controller

If you prefer Helm, installation of the Nginx Ingress controller is easier. This article is the hard way, but you will understand the process better.

All resources for Nginx Ingress controller will be in a separate namespace, so let''s create it:

⚡ kubectl create namespace ingress

The first step is to create a default backend endpoint. Default endpoint redirects all requests which are not defined by Ingress rules:

⚡ cat > default-backend-deployment.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: default-backend

spec:

replicas: 2

template:

metadata:

labels:

app: default-backend spec: terminationGracePeriodSeconds: 60 containers: - name: default-backend image: gcr.io/google_containers/defaultbackend:1.0 livenessProbe: httpGet: path: /healthz port: 8080 scheme: HTTP initialDelaySeconds: 30 timeoutSeconds: 5 ports: - containerPort: 8080 resources: limits: cpu: 10m memory: 20Mi requests: cpu: 10m memory: 20Mi EOF And to create a default backend service:

⚡ cat > default-backend-service.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: default-backend

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: default-backend

EOF

We will create those resources in ingress namespace:

⚡ kubectl create -f default-backend-deployment.yaml -f default-backend-service.yaml -n=ingress

Then, we need to create a Nginx config to show a VTS page on our load balancer:

⚡ cat > nginx-ingress-controller-config-map.yaml <<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-ingress-controller-conf

labels:

app: nginx-ingress-lb

data:

enable-vts-status: ''true''

EOF

⚡ kubectl create -f nginx-ingress-controller-config-map.yaml -n=ingress

And here is the actual Nginx Ingress controller deployment:

⚡ cat > nginx-ingress-controller-deployment.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-ingress-controller

spec:

replicas: 1

revisionHistoryLimit: 3

template:

metadata:

labels:

app: nginx-ingress-lb

spec:

terminationGracePeriodSeconds: 60

serviceAccount: nginx

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.9.0

imagePullPolicy: Always

readinessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 5

args:

- /nginx-ingress-controller

- --default-backend-service=\$(POD_NAMESPACE)/default-backend - --configmap=\$(POD_NAMESPACE)/nginx-ingress-controller-conf - --v=2 env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace ports: - containerPort: 80 - containerPort: 18080 EOF Notice the \--v=2 argument, which is a log level and it shows the Nginx config diff on start. Don''t create Nginx controller yet.

Kubernetes and RBAC

Before we create Ingress controller and move forward you might need to create RBAC rules. Clusters deployed with kubeadm have RBAC enabled by default:

⚡ cat > nginx-ingress-controller-roles.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: nginx-role rules: - apiGroups: - "" resources: - configmaps - endpoints - nodes - pods - secrets verbs: - list - watch - apiGroups: - "" resources: - nodes verbs: - get - apiGroups: - "" resources: - services verbs: - get - list - update - watch - apiGroups: - extensions resources: - ingresses verbs: - get - list - watch - apiGroups: - "" resources: - events verbs: - create - patch - apiGroups: - extensions resources: - ingresses/status verbs: - update --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1beta1 metadata: name: nginx-role roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: nginx-role subjects: - kind: ServiceAccount name: nginx namespace: ingress EOF ⚡ kubectl create -f nginx-ingress-controller-roles.yaml -n=ingress So now you can create Ingress controller also:

⚡ kubectl create -f nginx-ingress-controller-deployment.yaml -n=ingress

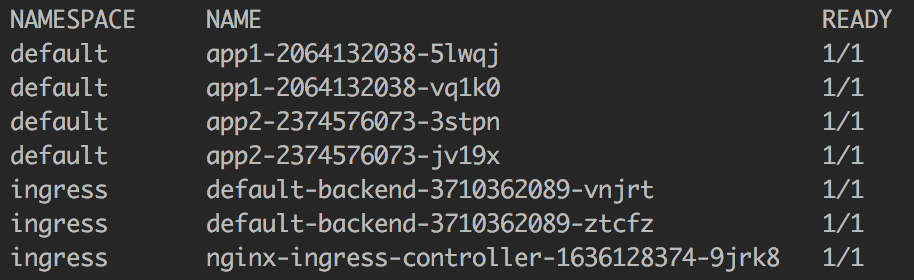

If you check your pods, you should get something like this:

Create Ingress Rules for Applications

Everything is ready now. The last step is to define Ingress rules for load balancer status page:

⚡ cat > nginx-ingress.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-ingress

spec:

rules:

- host: test.akomljen.com

http:

paths:

- backend:

serviceName: nginx-ingress

servicePort: 18080

path: /nginx_status

EOF

And Ingress rules for sample web apps:

⚡ cat > app-ingress.yaml <<EOF

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

name: app-ingress

spec:

rules:

- host: test.akomljen.com

http:

paths:

- backend:

serviceName: appsvc1

servicePort: 80

path: /app1

- backend:

serviceName: appsvc2

servicePort: 80

path: /app2

EOF

Notice the nginx.ingress.kubernetes.io/rewrite-target: / annotation. We are using /app1 and /app2 paths, but the apps don’t exist there. This annotation redirects requests to the /. You can create both ingress rules now:

⚡ kubectl create -f nginx-ingress.yaml -n=ingress

⚡ kubectl create -f app-ingress.yaml

Expose Nginx Ingress Controller

The last step is to expose nginx-ingress-lb deployment for external access. We will expose it with NodePort, but we could also use ExternalIPs here:

⚡ cat > nginx-ingress-controller-service.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress

spec:

type: NodePort

ports:

- port: 80

nodePort: 30000

name: http

- port: 18080

nodePort: 32000

name: http-mgmt

selector:

app: nginx-ingress-lb

EOF

⚡ kubectl create -f nginx-ingress-controller-service.yaml -n=ingress

If you are running everything on VirtualBox, as I do, forward ports 30000 and 32000 from one Kubernetes worker node to localhost:

⚡ VBoxManage modifyvm "worker_node_vm_name" --natpf1 "nodeport,tcp,127.0.0.1,30000,,30000" ⚡ VBoxManage modifyvm "worker_node_vm_name" --natpf1 "nodeport2,tcp,127.0.0.1,32000,,32000" Also add test.akomljen.com domain to hosts file:

⚡ echo "127.0.0.1 test.akomljen.com" | sudo tee -a /etc/hosts

You can verify everything by accessing at those endpoints:

http://test.akomljen.com:30000/app1

http://test.akomljen.com:30000/app2

http://test.akomljen.com:32000/nginx_status

NOTE: You can access apps using DNS name only, not IP directly!

Any other endpoint redirects the request to default backend. Ingress controller is functional now, and you could add more apps to it. For any problems during the setup, please leave a comment. Don’t forget to share this post if you find it useful.

Summary

Having an Ingress is the first step towards the more automation on Kubernetes. Now, you can have automatic SSL with Let''s encrypt to increase security also. If you don''t want to manage all those configuration files manually, I suggest you look into Helm. Instaling Ingress controller would be only one command. Stay tuned for the next one.

Kubernetes Nginx Ingress 安装与使用

Kubernetes Nginx Ingress 安装与使用 博客分类: Kubernetes目录 (Table of Contents)

[TOCM]

- 概述

- 下载搭建环境所需要的镜像

- 配置default-http-bankend

- 配置inrgess-controller

- 配置需要测试的service

- 配置ingress

- 问题集

概述

用过kubernetes的人都知道,kubernetes的service的网络类型有三种:cluertip,nodeport,loadbanlance,各种类型的作用就不在这里描述了。如果一个service想向外部暴露服务,有nodeport和loadbanlance类型,但是nodeport类型,你的知道service对应的pod所在的node的ip,而loadbanlance通常需要第三方云服务商提供支持。如果没有第三方服务商服务的就没办法做了。除此之外还有很多其他的替代方式,以下我主要讲解的是通过ingress的方式来实现service的对外服务的暴露。

下载搭建环境所需要的镜像

- gcr.io/google_containers/nginx-ingress-controller:0.8.3 (ingress controller 的镜像)

- gcr.io/google_containers/defaultbackend:1.0 (默认路由的servcie的镜像)

- gcr.io/google_containers/echoserver:1.0 (用于测试ingress的service镜像,当然这个你可以换成你自己的service都可以)

说明:由于GFW的原因下载不下来的,都可以到时速云去下载相应的镜像(只要把grc.io换成index.tenxcloud.com就可以了),由于我用的是自己私用镜像仓库,所以之后看到的镜像都是我自己tag之后上传到自己私有镜像仓库的。

配置default-http-bankend

首先便捷default-http-backend.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: default-http-backend

spec:

replicas: 1

selector:

app: default-http-backend

template:

metadata:

labels:

app: default-http-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

# Any image is permissable as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: hub.yfcloud.io/google_containers/defaultbackend:1.0

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi然后创建创建default-http-backend的rc:

kubectl create -f default-http-backend .yaml最后创建default-http-backend的service,有两种方式创建,一种是:便捷default-http-backend-service.yaml,另一种通过如下命令创建:

kubectl expose rc default-http-backend --port=80 --target-port=8080 --name=default-http-backend最后查看相应的rc以及service是否成功创建:然后自己测试能否正常访问,访问方式如下,直接通过的pod的ip/healthz或者serive的ip/(如果是srevice的ip加port)

配置inrgess-controller

首先编辑ingress-controller.yaml文件:

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-ingress-controller

labels:

k8s-app: nginx-ingress-lb

spec:

replicas: 1

selector:

k8s-app: nginx-ingress-lb

template:

metadata:

labels:

k8s-app: nginx-ingress-lb

name: nginx-ingress-lb

spec:

terminationGracePeriodSeconds: 60

containers:

- image: hub.yfcloud.io/google_containers/nginx-ingress-controller:0.8.3

name: nginx-ingress-lb

imagePullPolicy: Always

readinessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1

# use downward API

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: KUBERNETES_MASTER

value: http://183.131.19.231:8080

ports:

- containerPort: 80

hostPort: 80

- containerPort: 443

hostPort: 443

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

imagePullSecrets:

- name: hub.yfcloud.io.key创建ingress-controller的rc:

kubectl create -f ingress-controller.yaml最后验证ingress-controller是否正常启动成功,方法 如下:查看ingress-controller中对应pod的ip,然后通过ip:80/healthz 访问,成功的话会返回 ok。

配置需要测试的service

首先编辑测试用的test.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: echoheaders

spec:

replicas: 1

template:

metadata:

labels:

app: echoheaders

spec:

containers:

- name: echoheaders

image: hub.yfcloud.io/google_containers/echoserver:test

ports:

- containerPort: 8080测试service的yaml这里创建多个:

sv-alp-default.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-default

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30302

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaderssv-alp-x.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-x

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30301

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaderssv-alp-y.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-y

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30284

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaders接着是

配置ingress

编辑ingress-alp.yaml:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: echomap

spec:

rules:

- host: foo.bar.com

http:

paths:

- path: /foo

backend:

serviceName: echoheaders-x

servicePort: 80

- host: bar.baz.com

http:

paths:

- path: /bar

backend:

serviceName: echoheaders-y

servicePort: 80

- path: /foo

backend:

serviceName: echoheaders-x

servicePort: 80最后测试,测试方法有两种,如下:

方法一:

curl -v http://nodeip:80/foo -H ''host: foo.bar.com''结果如下:

* About to connect() to 183.131.19.232 port 80 (#0)

* Trying 183.131.19.232...

* Connected to 183.131.19.232 (183.131.19.232) port 80 (#0)

> GET /foo HTTP/1.1

> User-Agent: curl/7.29.0

> Accept: */*

> host: foo.bar.com

>

< HTTP/1.1 200 OK

< Server: nginx/1.11.3

< Date: Wed, 12 Oct 2016 10:04:40 GMT

< Transfer-Encoding: chunked

< Connection: keep-alive

<

CLIENT VALUES:

client_address=(''10.1.58.3'', 57364) (10.1.58.3)

command=GET

path=/foo

real path=/foo

query=

request_version=HTTP/1.1

SERVER VALUES:

server_version=BaseHTTP/0.6

sys_version=Python/3.5.0

protocol_version=HTTP/1.0

HEADERS RECEIVED:

Accept=*/*

Connection=close

Host=foo.bar.com

User-Agent=curl/7.29.0

X-Forwarded-For=183.131.19.231

X-Forwarded-Host=foo.bar.com

X-Forwarded-Port=80

X-Forwarded-Proto=http

X-Real-IP=183.131.19.231

* Connection #0 to host 183.131.19.232 left intact其他路由的测试修改对应的url即可。

**注意:**nodeip是你的ingress-controller所对应的pod所在的node的ip。

方法二:

将访问url的主机的host中加上:

nodeip foo.bar.com

nodeip bar.baz.com在浏览器中直接访问:

foo.bar.com/foo

bar.baz.com/bar

bar.baz.com/foo都是可以的。

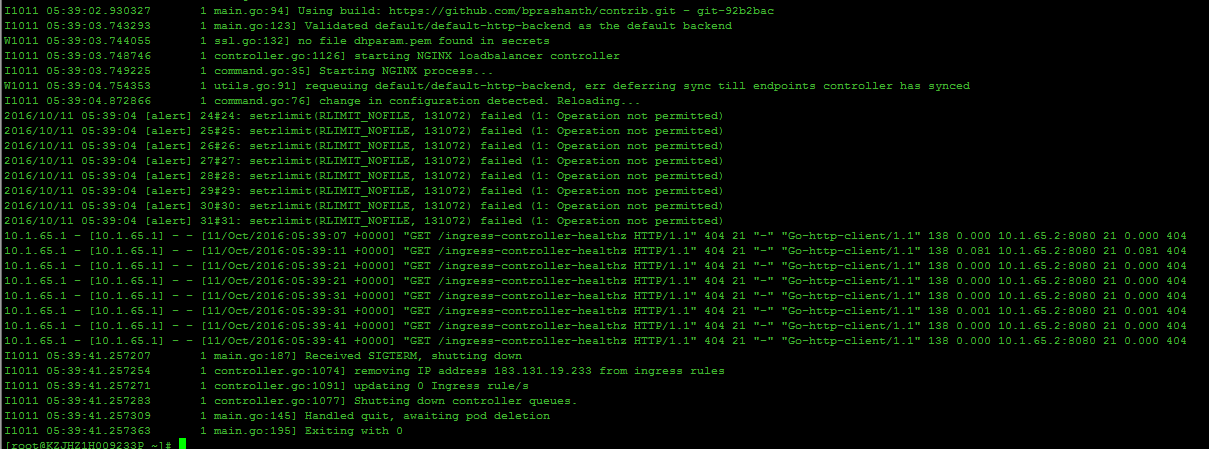

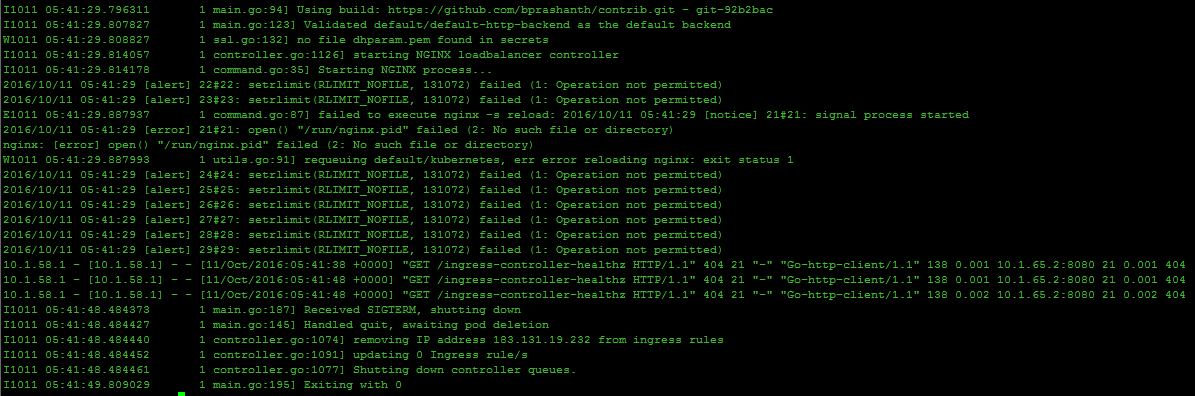

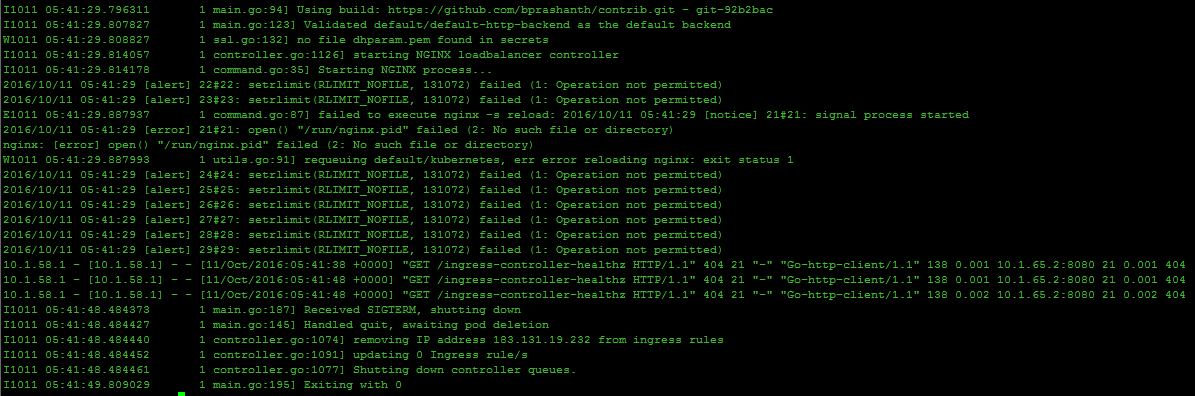

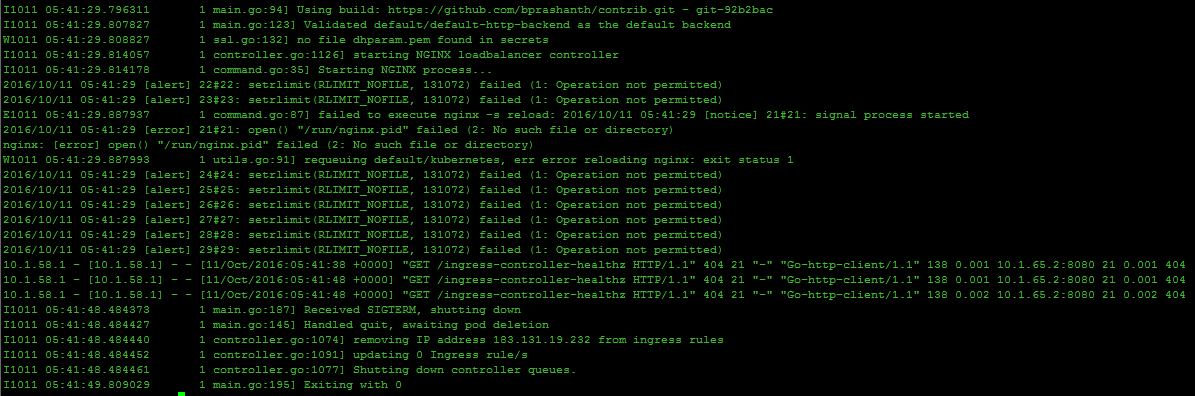

问题集

在创建ingress-controller的时候报错(ingress的yaml文件是官网中的yaml文件配置,我只改了相对应的镜像。):

问题一:

以上错误信息是ingress-controller创建失败的原因,通过docker log 查看rc中的对应的ingress-controller的容器的日志。

原因:从错误信息来看是因为ingress内部程序默认是通过localhost:8080连接master的apiserver,如果是单机版的k8s环境,就不会报这个错,如果是集群环境,并且你的ingress-controller的pod所对应的容器不是在master节点上(因为有的集群环境master也是node,这样的话,如果你的pod对应的容器在master节点上也不会报这个错),就会报这个错。

解决方法:在ingress-controller.yaml中加上如下参数,

- name: KUBERNETES_MASTER

value: http://183.131.19.231:8080之后的yaml文件就是如前面定义的yaml文件。

问题二:

这个问题是在创建ingress rc成功之后,马上查看rc对应的pod对应的容器时报的错!

从这个问题衍生出个连带的问题,如下图:

问题1:

这个问题是在创建ingress rc成功之后,当第一个容器失败,rc的垃圾机制回收第一次创建停止的容器后,重新启动的容器。查看rc对应的pod对应的容器时报的错!

原因:因为ingress中的源码的健康检查的url改成了 /healthz,而官方文档中的yaml中定义的健康检查的路由是/ingress-controller-healthz,这个url是ingress的自检url,当超过8次之后如果得到结果还是unhealthy,则会挂掉ingress对应的容器。

解决方法:将yaml中的健康检查的url改成:/healthz即可。:

readinessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1目录 (Table of Contents)

[TOCM]

- 概述

- 下载搭建环境所需要的镜像

- 配置default-http-bankend

- 配置inrgess-controller

- 配置需要测试的service

- 配置ingress

- 问题集

概述

用过kubernetes的人都知道,kubernetes的service的网络类型有三种:cluertip,nodeport,loadbanlance,各种类型的作用就不在这里描述了。如果一个service想向外部暴露服务,有nodeport和loadbanlance类型,但是nodeport类型,你的知道service对应的pod所在的node的ip,而loadbanlance通常需要第三方云服务商提供支持。如果没有第三方服务商服务的就没办法做了。除此之外还有很多其他的替代方式,以下我主要讲解的是通过ingress的方式来实现service的对外服务的暴露。

下载搭建环境所需要的镜像

- gcr.io/google_containers/nginx-ingress-controller:0.8.3 (ingress controller 的镜像)

- gcr.io/google_containers/defaultbackend:1.0 (默认路由的servcie的镜像)

- gcr.io/google_containers/echoserver:1.0 (用于测试ingress的service镜像,当然这个你可以换成你自己的service都可以)

说明:由于GFW的原因下载不下来的,都可以到时速云去下载相应的镜像(只要把grc.io换成index.tenxcloud.com就可以了),由于我用的是自己私用镜像仓库,所以之后看到的镜像都是我自己tag之后上传到自己私有镜像仓库的。

配置default-http-bankend

首先便捷default-http-backend.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: default-http-backend

spec:

replicas: 1

selector:

app: default-http-backend

template:

metadata:

labels:

app: default-http-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

# Any image is permissable as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: hub.yfcloud.io/google_containers/defaultbackend:1.0

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi然后创建创建default-http-backend的rc:

kubectl create -f default-http-backend .yaml最后创建default-http-backend的service,有两种方式创建,一种是:便捷default-http-backend-service.yaml,另一种通过如下命令创建:

kubectl expose rc default-http-backend --port=80 --target-port=8080 --name=default-http-backend最后查看相应的rc以及service是否成功创建:然后自己测试能否正常访问,访问方式如下,直接通过的pod的ip/healthz或者serive的ip/(如果是srevice的ip加port)

配置inrgess-controller

首先编辑ingress-controller.yaml文件:

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-ingress-controller

labels:

k8s-app: nginx-ingress-lb

spec:

replicas: 1

selector:

k8s-app: nginx-ingress-lb

template:

metadata:

labels:

k8s-app: nginx-ingress-lb

name: nginx-ingress-lb

spec:

terminationGracePeriodSeconds: 60

containers:

- image: hub.yfcloud.io/google_containers/nginx-ingress-controller:0.8.3

name: nginx-ingress-lb

imagePullPolicy: Always

readinessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1

# use downward API

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: KUBERNETES_MASTER

value: http://183.131.19.231:8080

ports:

- containerPort: 80

hostPort: 80

- containerPort: 443

hostPort: 443

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

imagePullSecrets:

- name: hub.yfcloud.io.key创建ingress-controller的rc:

kubectl create -f ingress-controller.yaml最后验证ingress-controller是否正常启动成功,方法 如下:查看ingress-controller中对应pod的ip,然后通过ip:80/healthz 访问,成功的话会返回 ok。

配置需要测试的service

首先编辑测试用的test.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: echoheaders

spec:

replicas: 1

template:

metadata:

labels:

app: echoheaders

spec:

containers:

- name: echoheaders

image: hub.yfcloud.io/google_containers/echoserver:test

ports:

- containerPort: 8080测试service的yaml这里创建多个:

sv-alp-default.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-default

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30302

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaderssv-alp-x.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-x

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30301

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaderssv-alp-y.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-y

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30284

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaders接着是

配置ingress

编辑ingress-alp.yaml:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: echomap

spec:

rules:

- host: foo.bar.com

http:

paths:

- path: /foo

backend:

serviceName: echoheaders-x

servicePort: 80

- host: bar.baz.com

http:

paths:

- path: /bar

backend:

serviceName: echoheaders-y

servicePort: 80

- path: /foo

backend:

serviceName: echoheaders-x

servicePort: 80最后测试,测试方法有两种,如下:

方法一:

curl -v http://nodeip:80/foo -H ''host: foo.bar.com''结果如下:

* About to connect() to 183.131.19.232 port 80 (#0)

* Trying 183.131.19.232...

* Connected to 183.131.19.232 (183.131.19.232) port 80 (#0)

> GET /foo HTTP/1.1

> User-Agent: curl/7.29.0

> Accept: */*

> host: foo.bar.com

>

< HTTP/1.1 200 OK

< Server: nginx/1.11.3

< Date: Wed, 12 Oct 2016 10:04:40 GMT

< Transfer-Encoding: chunked

< Connection: keep-alive

<

CLIENT VALUES:

client_address=(''10.1.58.3'', 57364) (10.1.58.3)

command=GET

path=/foo

real path=/foo

query=

request_version=HTTP/1.1

SERVER VALUES:

server_version=BaseHTTP/0.6

sys_version=Python/3.5.0

protocol_version=HTTP/1.0

HEADERS RECEIVED:

Accept=*/*

Connection=close

Host=foo.bar.com

User-Agent=curl/7.29.0

X-Forwarded-For=183.131.19.231

X-Forwarded-Host=foo.bar.com

X-Forwarded-Port=80

X-Forwarded-Proto=http

X-Real-IP=183.131.19.231

* Connection #0 to host 183.131.19.232 left intact其他路由的测试修改对应的url即可。

**注意:**nodeip是你的ingress-controller所对应的pod所在的node的ip。

方法二:

将访问url的主机的host中加上:

nodeip foo.bar.com

nodeip bar.baz.com在浏览器中直接访问:

foo.bar.com/foo

bar.baz.com/bar

bar.baz.com/foo都是可以的。

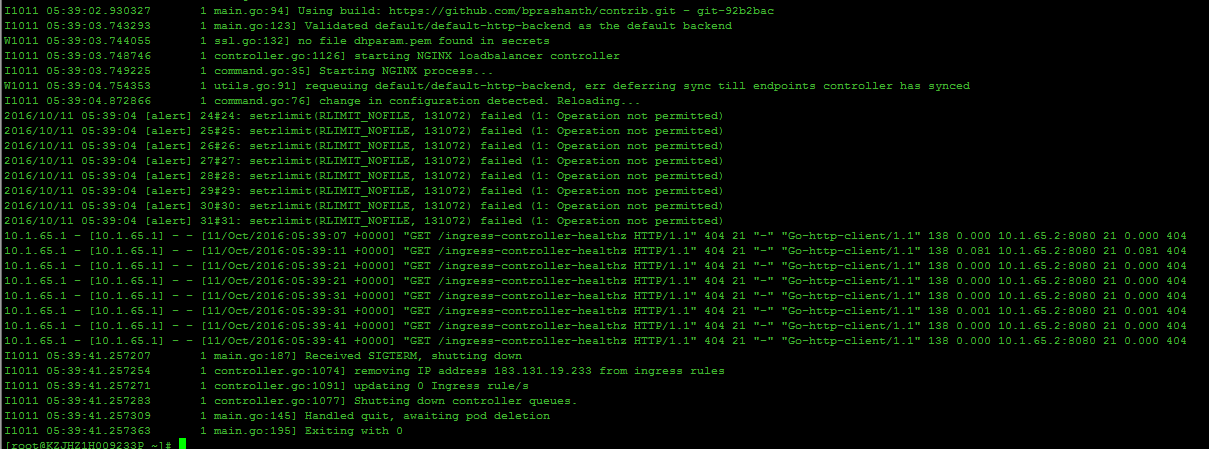

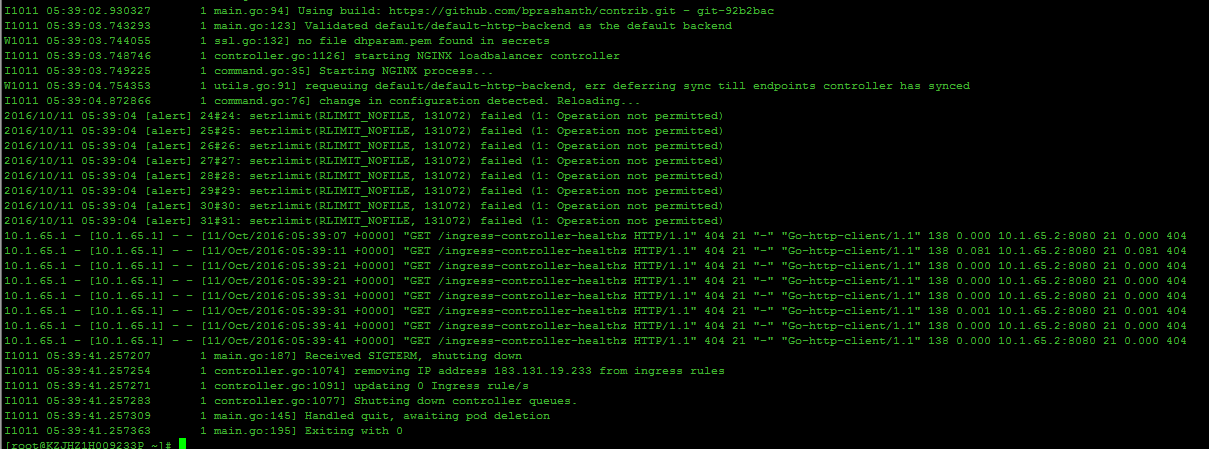

问题集

在创建ingress-controller的时候报错(ingress的yaml文件是官网中的yaml文件配置,我只改了相对应的镜像。):

问题一:

以上错误信息是ingress-controller创建失败的原因,通过docker log 查看rc中的对应的ingress-controller的容器的日志。

原因:从错误信息来看是因为ingress内部程序默认是通过localhost:8080连接master的apiserver,如果是单机版的k8s环境,就不会报这个错,如果是集群环境,并且你的ingress-controller的pod所对应的容器不是在master节点上(因为有的集群环境master也是node,这样的话,如果你的pod对应的容器在master节点上也不会报这个错),就会报这个错。

解决方法:在ingress-controller.yaml中加上如下参数,

- name: KUBERNETES_MASTER

value: http://183.131.19.231:8080之后的yaml文件就是如前面定义的yaml文件。

问题二:

这个问题是在创建ingress rc成功之后,马上查看rc对应的pod对应的容器时报的错!

从这个问题衍生出个连带的问题,如下图:

问题1:

这个问题是在创建ingress rc成功之后,当第一个容器失败,rc的垃圾机制回收第一次创建停止的容器后,重新启动的容器。查看rc对应的pod对应的容器时报的错!

原因:因为ingress中的源码的健康检查的url改成了 /healthz,而官方文档中的yaml中定义的健康检查的路由是/ingress-controller-healthz,这个url是ingress的自检url,当超过8次之后如果得到结果还是unhealthy,则会挂掉ingress对应的容器。

解决方法:将yaml中的健康检查的url改成:/healthz即可。:

readinessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1目录 (Table of Contents)

[TOCM]

- 概述

- 下载搭建环境所需要的镜像

- 配置default-http-bankend

- 配置inrgess-controller

- 配置需要测试的service

- 配置ingress

- 问题集

概述

用过kubernetes的人都知道,kubernetes的service的网络类型有三种:cluertip,nodeport,loadbanlance,各种类型的作用就不在这里描述了。如果一个service想向外部暴露服务,有nodeport和loadbanlance类型,但是nodeport类型,你的知道service对应的pod所在的node的ip,而loadbanlance通常需要第三方云服务商提供支持。如果没有第三方服务商服务的就没办法做了。除此之外还有很多其他的替代方式,以下我主要讲解的是通过ingress的方式来实现service的对外服务的暴露。

下载搭建环境所需要的镜像

- gcr.io/google_containers/nginx-ingress-controller:0.8.3 (ingress controller 的镜像)

- gcr.io/google_containers/defaultbackend:1.0 (默认路由的servcie的镜像)

- gcr.io/google_containers/echoserver:1.0 (用于测试ingress的service镜像,当然这个你可以换成你自己的service都可以)

说明:由于GFW的原因下载不下来的,都可以到时速云去下载相应的镜像(只要把grc.io换成index.tenxcloud.com就可以了),由于我用的是自己私用镜像仓库,所以之后看到的镜像都是我自己tag之后上传到自己私有镜像仓库的。

配置default-http-bankend

首先便捷default-http-backend.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: default-http-backend

spec:

replicas: 1

selector:

app: default-http-backend

template:

metadata:

labels:

app: default-http-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

# Any image is permissable as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: hub.yfcloud.io/google_containers/defaultbackend:1.0

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi然后创建创建default-http-backend的rc:

kubectl create -f default-http-backend .yaml最后创建default-http-backend的service,有两种方式创建,一种是:便捷default-http-backend-service.yaml,另一种通过如下命令创建:

kubectl expose rc default-http-backend --port=80 --target-port=8080 --name=default-http-backend最后查看相应的rc以及service是否成功创建:然后自己测试能否正常访问,访问方式如下,直接通过的pod的ip/healthz或者serive的ip/(如果是srevice的ip加port)

配置inrgess-controller

首先编辑ingress-controller.yaml文件:

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-ingress-controller

labels:

k8s-app: nginx-ingress-lb

spec:

replicas: 1

selector:

k8s-app: nginx-ingress-lb

template:

metadata:

labels:

k8s-app: nginx-ingress-lb

name: nginx-ingress-lb

spec:

terminationGracePeriodSeconds: 60

containers:

- image: hub.yfcloud.io/google_containers/nginx-ingress-controller:0.8.3

name: nginx-ingress-lb

imagePullPolicy: Always

readinessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1

# use downward API

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: KUBERNETES_MASTER

value: http://183.131.19.231:8080

ports:

- containerPort: 80

hostPort: 80

- containerPort: 443

hostPort: 443

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

imagePullSecrets:

- name: hub.yfcloud.io.key创建ingress-controller的rc:

kubectl create -f ingress-controller.yaml最后验证ingress-controller是否正常启动成功,方法 如下:查看ingress-controller中对应pod的ip,然后通过ip:80/healthz 访问,成功的话会返回 ok。

配置需要测试的service

首先编辑测试用的test.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: echoheaders

spec:

replicas: 1

template:

metadata:

labels:

app: echoheaders

spec:

containers:

- name: echoheaders

image: hub.yfcloud.io/google_containers/echoserver:test

ports:

- containerPort: 8080测试service的yaml这里创建多个:

sv-alp-default.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-default

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30302

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaderssv-alp-x.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-x

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30301

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaderssv-alp-y.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-y

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30284

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaders接着是

配置ingress

编辑ingress-alp.yaml:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: echomap

spec:

rules:

- host: foo.bar.com

http:

paths:

- path: /foo

backend:

serviceName: echoheaders-x

servicePort: 80

- host: bar.baz.com

http:

paths:

- path: /bar

backend:

serviceName: echoheaders-y

servicePort: 80

- path: /foo

backend:

serviceName: echoheaders-x

servicePort: 80最后测试,测试方法有两种,如下:

方法一:

curl -v http://nodeip:80/foo -H ''host: foo.bar.com''结果如下:

* About to connect() to 183.131.19.232 port 80 (#0)

* Trying 183.131.19.232...

* Connected to 183.131.19.232 (183.131.19.232) port 80 (#0)

> GET /foo HTTP/1.1

> User-Agent: curl/7.29.0

> Accept: */*

> host: foo.bar.com

>

< HTTP/1.1 200 OK

< Server: nginx/1.11.3

< Date: Wed, 12 Oct 2016 10:04:40 GMT

< Transfer-Encoding: chunked

< Connection: keep-alive

<

CLIENT VALUES:

client_address=(''10.1.58.3'', 57364) (10.1.58.3)

command=GET

path=/foo

real path=/foo

query=

request_version=HTTP/1.1

SERVER VALUES:

server_version=BaseHTTP/0.6

sys_version=Python/3.5.0

protocol_version=HTTP/1.0

HEADERS RECEIVED:

Accept=*/*

Connection=close

Host=foo.bar.com

User-Agent=curl/7.29.0

X-Forwarded-For=183.131.19.231

X-Forwarded-Host=foo.bar.com

X-Forwarded-Port=80

X-Forwarded-Proto=http

X-Real-IP=183.131.19.231

* Connection #0 to host 183.131.19.232 left intact其他路由的测试修改对应的url即可。

**注意:**nodeip是你的ingress-controller所对应的pod所在的node的ip。

方法二:

将访问url的主机的host中加上:

nodeip foo.bar.com

nodeip bar.baz.com在浏览器中直接访问:

foo.bar.com/foo

bar.baz.com/bar

bar.baz.com/foo都是可以的。

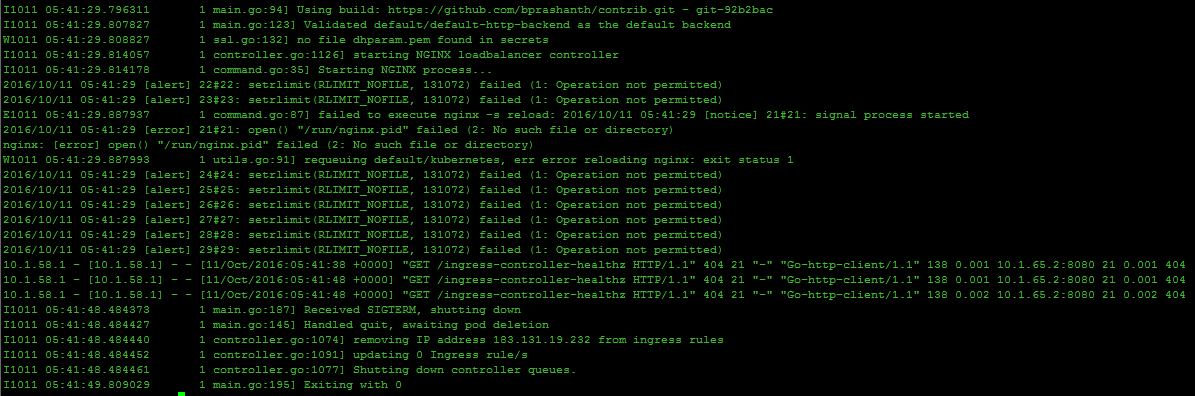

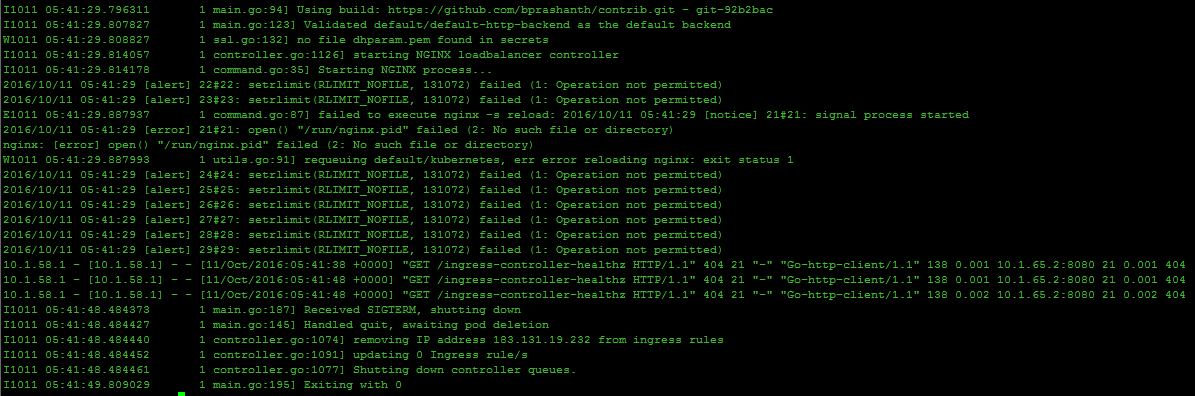

问题集

在创建ingress-controller的时候报错(ingress的yaml文件是官网中的yaml文件配置,我只改了相对应的镜像。):

问题一:

以上错误信息是ingress-controller创建失败的原因,通过docker log 查看rc中的对应的ingress-controller的容器的日志。

原因:从错误信息来看是因为ingress内部程序默认是通过localhost:8080连接master的apiserver,如果是单机版的k8s环境,就不会报这个错,如果是集群环境,并且你的ingress-controller的pod所对应的容器不是在master节点上(因为有的集群环境master也是node,这样的话,如果你的pod对应的容器在master节点上也不会报这个错),就会报这个错。

解决方法:在ingress-controller.yaml中加上如下参数,

- name: KUBERNETES_MASTER

value: http://183.131.19.231:8080之后的yaml文件就是如前面定义的yaml文件。

问题二:

这个问题是在创建ingress rc成功之后,马上查看rc对应的pod对应的容器时报的错!

从这个问题衍生出个连带的问题,如下图:

问题1:

这个问题是在创建ingress rc成功之后,当第一个容器失败,rc的垃圾机制回收第一次创建停止的容器后,重新启动的容器。查看rc对应的pod对应的容器时报的错!

原因:因为ingress中的源码的健康检查的url改成了 /healthz,而官方文档中的yaml中定义的健康检查的路由是/ingress-controller-healthz,这个url是ingress的自检url,当超过8次之后如果得到结果还是unhealthy,则会挂掉ingress对应的容器。

解决方法:将yaml中的健康检查的url改成:/healthz即可。:

readinessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1

目录 (Table of Contents)

[TOCM]

- 概述

- 下载搭建环境所需要的镜像

- 配置default-http-bankend

- 配置inrgess-controller

- 配置需要测试的service

- 配置ingress

- 问题集

概述

用过kubernetes的人都知道,kubernetes的service的网络类型有三种:cluertip,nodeport,loadbanlance,各种类型的作用就不在这里描述了。如果一个service想向外部暴露服务,有nodeport和loadbanlance类型,但是nodeport类型,你的知道service对应的pod所在的node的ip,而loadbanlance通常需要第三方云服务商提供支持。如果没有第三方服务商服务的就没办法做了。除此之外还有很多其他的替代方式,以下我主要讲解的是通过ingress的方式来实现service的对外服务的暴露。

下载搭建环境所需要的镜像

- gcr.io/google_containers/nginx-ingress-controller:0.8.3 (ingress controller 的镜像)

- gcr.io/google_containers/defaultbackend:1.0 (默认路由的servcie的镜像)

- gcr.io/google_containers/echoserver:1.0 (用于测试ingress的service镜像,当然这个你可以换成你自己的service都可以)

说明:由于GFW的原因下载不下来的,都可以到时速云去下载相应的镜像(只要把grc.io换成index.tenxcloud.com就可以了),由于我用的是自己私用镜像仓库,所以之后看到的镜像都是我自己tag之后上传到自己私有镜像仓库的。

配置default-http-bankend

首先便捷default-http-backend.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: default-http-backend

spec:

replicas: 1

selector:

app: default-http-backend

template:

metadata:

labels:

app: default-http-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

# Any image is permissable as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: hub.yfcloud.io/google_containers/defaultbackend:1.0

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi然后创建创建default-http-backend的rc:

kubectl create -f default-http-backend .yaml最后创建default-http-backend的service,有两种方式创建,一种是:便捷default-http-backend-service.yaml,另一种通过如下命令创建:

kubectl expose rc default-http-backend --port=80 --target-port=8080 --name=default-http-backend最后查看相应的rc以及service是否成功创建:然后自己测试能否正常访问,访问方式如下,直接通过的pod的ip/healthz或者serive的ip/(如果是srevice的ip加port)

配置inrgess-controller

首先编辑ingress-controller.yaml文件:

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-ingress-controller

labels:

k8s-app: nginx-ingress-lb

spec:

replicas: 1

selector:

k8s-app: nginx-ingress-lb

template:

metadata:

labels:

k8s-app: nginx-ingress-lb

name: nginx-ingress-lb

spec:

terminationGracePeriodSeconds: 60

containers:

- image: hub.yfcloud.io/google_containers/nginx-ingress-controller:0.8.3

name: nginx-ingress-lb

imagePullPolicy: Always

readinessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1

# use downward API

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: KUBERNETES_MASTER

value: http://183.131.19.231:8080

ports:

- containerPort: 80

hostPort: 80

- containerPort: 443

hostPort: 443

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

imagePullSecrets:

- name: hub.yfcloud.io.key创建ingress-controller的rc:

kubectl create -f ingress-controller.yaml最后验证ingress-controller是否正常启动成功,方法 如下:查看ingress-controller中对应pod的ip,然后通过ip:80/healthz 访问,成功的话会返回 ok。

配置需要测试的service

首先编辑测试用的test.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: echoheaders

spec:

replicas: 1

template:

metadata:

labels:

app: echoheaders

spec:

containers:

- name: echoheaders

image: hub.yfcloud.io/google_containers/echoserver:test

ports:

- containerPort: 8080测试service的yaml这里创建多个:

sv-alp-default.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-default

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30302

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaderssv-alp-x.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-x

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30301

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaderssv-alp-y.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-y

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30284

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaders接着是

配置ingress

编辑ingress-alp.yaml:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: echomap

spec:

rules:

- host: foo.bar.com

http:

paths:

- path: /foo

backend:

serviceName: echoheaders-x

servicePort: 80

- host: bar.baz.com

http:

paths:

- path: /bar

backend:

serviceName: echoheaders-y

servicePort: 80

- path: /foo

backend:

serviceName: echoheaders-x

servicePort: 80最后测试,测试方法有两种,如下:

方法一:

curl -v http://nodeip:80/foo -H ''host: foo.bar.com''结果如下:

* About to connect() to 183.131.19.232 port 80 (#0)

* Trying 183.131.19.232...

* Connected to 183.131.19.232 (183.131.19.232) port 80 (#0)

> GET /foo HTTP/1.1

> User-Agent: curl/7.29.0

> Accept: */*

> host: foo.bar.com

>

< HTTP/1.1 200 OK

< Server: nginx/1.11.3

< Date: Wed, 12 Oct 2016 10:04:40 GMT

< Transfer-Encoding: chunked

< Connection: keep-alive

<

CLIENT VALUES:

client_address=(''10.1.58.3'', 57364) (10.1.58.3)

command=GET

path=/foo

real path=/foo

query=

request_version=HTTP/1.1

SERVER VALUES:

server_version=BaseHTTP/0.6

sys_version=Python/3.5.0

protocol_version=HTTP/1.0

HEADERS RECEIVED:

Accept=*/*

Connection=close

Host=foo.bar.com

User-Agent=curl/7.29.0

X-Forwarded-For=183.131.19.231

X-Forwarded-Host=foo.bar.com

X-Forwarded-Port=80

X-Forwarded-Proto=http

X-Real-IP=183.131.19.231

* Connection #0 to host 183.131.19.232 left intact其他路由的测试修改对应的url即可。

**注意:**nodeip是你的ingress-controller所对应的pod所在的node的ip。

方法二:

将访问url的主机的host中加上:

nodeip foo.bar.com

nodeip bar.baz.com在浏览器中直接访问:

foo.bar.com/foo

bar.baz.com/bar

bar.baz.com/foo都是可以的。

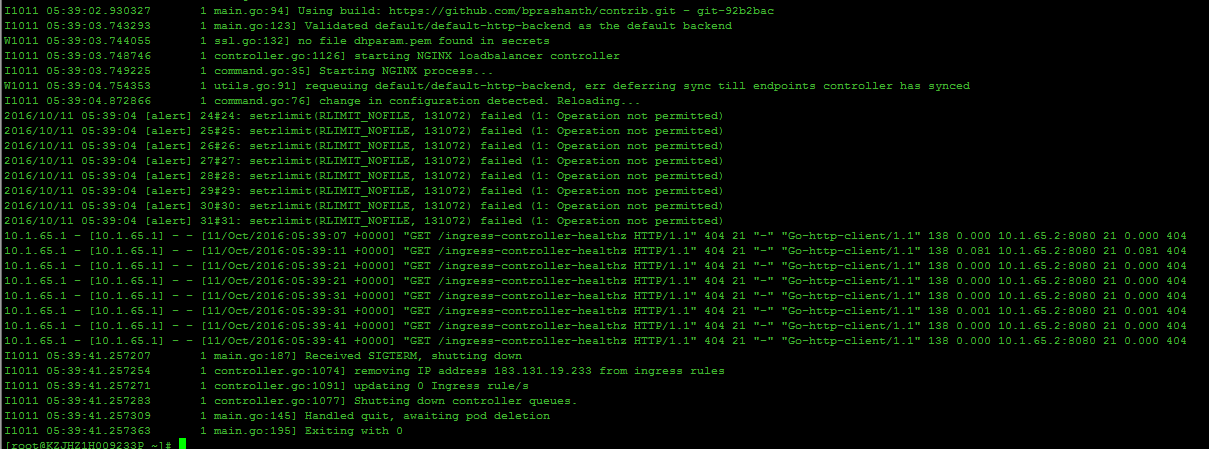

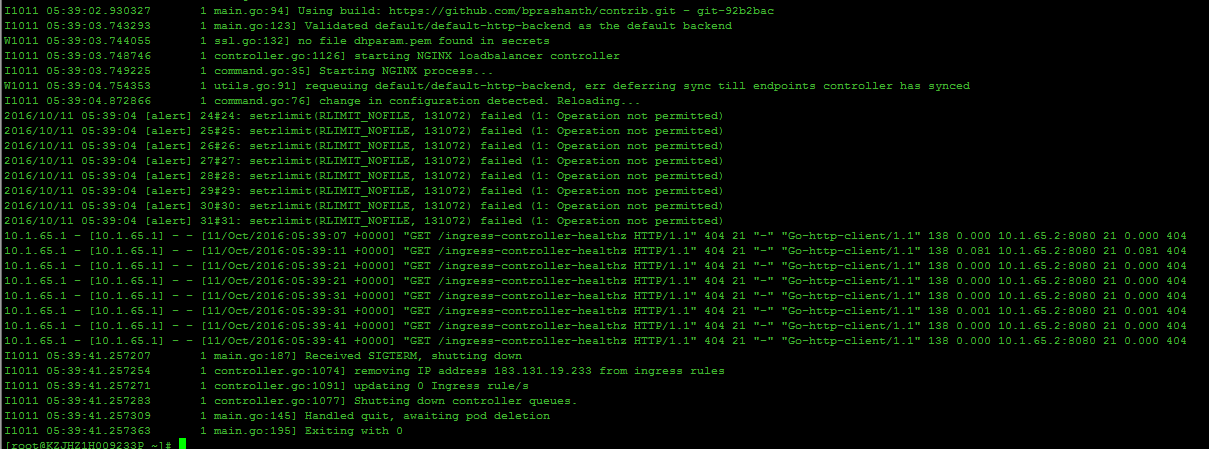

问题集

在创建ingress-controller的时候报错(ingress的yaml文件是官网中的yaml文件配置,我只改了相对应的镜像。):

问题一:

以上错误信息是ingress-controller创建失败的原因,通过docker log 查看rc中的对应的ingress-controller的容器的日志。

原因:从错误信息来看是因为ingress内部程序默认是通过localhost:8080连接master的apiserver,如果是单机版的k8s环境,就不会报这个错,如果是集群环境,并且你的ingress-controller的pod所对应的容器不是在master节点上(因为有的集群环境master也是node,这样的话,如果你的pod对应的容器在master节点上也不会报这个错),就会报这个错。

解决方法:在ingress-controller.yaml中加上如下参数,

- name: KUBERNETES_MASTER

value: http://183.131.19.231:8080之后的yaml文件就是如前面定义的yaml文件。

问题二:

这个问题是在创建ingress rc成功之后,马上查看rc对应的pod对应的容器时报的错!

从这个问题衍生出个连带的问题,如下图:

问题1:

这个问题是在创建ingress rc成功之后,当第一个容器失败,rc的垃圾机制回收第一次创建停止的容器后,重新启动的容器。查看rc对应的pod对应的容器时报的错!

原因:因为ingress中的源码的健康检查的url改成了 /healthz,而官方文档中的yaml中定义的健康检查的路由是/ingress-controller-healthz,这个url是ingress的自检url,当超过8次之后如果得到结果还是unhealthy,则会挂掉ingress对应的容器。

解决方法:将yaml中的健康检查的url改成:/healthz即可。:

readinessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1目录 (Table of Contents)

[TOCM]

- 概述

- 下载搭建环境所需要的镜像

- 配置default-http-bankend

- 配置inrgess-controller

- 配置需要测试的service

- 配置ingress

- 问题集

概述

用过kubernetes的人都知道,kubernetes的service的网络类型有三种:cluertip,nodeport,loadbanlance,各种类型的作用就不在这里描述了。如果一个service想向外部暴露服务,有nodeport和loadbanlance类型,但是nodeport类型,你的知道service对应的pod所在的node的ip,而loadbanlance通常需要第三方云服务商提供支持。如果没有第三方服务商服务的就没办法做了。除此之外还有很多其他的替代方式,以下我主要讲解的是通过ingress的方式来实现service的对外服务的暴露。

下载搭建环境所需要的镜像

- gcr.io/google_containers/nginx-ingress-controller:0.8.3 (ingress controller 的镜像)

- gcr.io/google_containers/defaultbackend:1.0 (默认路由的servcie的镜像)

- gcr.io/google_containers/echoserver:1.0 (用于测试ingress的service镜像,当然这个你可以换成你自己的service都可以)

说明:由于GFW的原因下载不下来的,都可以到时速云去下载相应的镜像(只要把grc.io换成index.tenxcloud.com就可以了),由于我用的是自己私用镜像仓库,所以之后看到的镜像都是我自己tag之后上传到自己私有镜像仓库的。

配置default-http-bankend

首先便捷default-http-backend.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: default-http-backend

spec:

replicas: 1

selector:

app: default-http-backend

template:

metadata:

labels:

app: default-http-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

# Any image is permissable as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: hub.yfcloud.io/google_containers/defaultbackend:1.0

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi然后创建创建default-http-backend的rc:

kubectl create -f default-http-backend .yaml最后创建default-http-backend的service,有两种方式创建,一种是:便捷default-http-backend-service.yaml,另一种通过如下命令创建:

kubectl expose rc default-http-backend --port=80 --target-port=8080 --name=default-http-backend最后查看相应的rc以及service是否成功创建:然后自己测试能否正常访问,访问方式如下,直接通过的pod的ip/healthz或者serive的ip/(如果是srevice的ip加port)

配置inrgess-controller

首先编辑ingress-controller.yaml文件:

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-ingress-controller

labels:

k8s-app: nginx-ingress-lb

spec:

replicas: 1

selector:

k8s-app: nginx-ingress-lb

template:

metadata:

labels:

k8s-app: nginx-ingress-lb

name: nginx-ingress-lb

spec:

terminationGracePeriodSeconds: 60

containers:

- image: hub.yfcloud.io/google_containers/nginx-ingress-controller:0.8.3

name: nginx-ingress-lb

imagePullPolicy: Always

readinessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1

# use downward API

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: KUBERNETES_MASTER

value: http://183.131.19.231:8080

ports:

- containerPort: 80

hostPort: 80

- containerPort: 443

hostPort: 443

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

imagePullSecrets:

- name: hub.yfcloud.io.key创建ingress-controller的rc:

kubectl create -f ingress-controller.yaml最后验证ingress-controller是否正常启动成功,方法 如下:查看ingress-controller中对应pod的ip,然后通过ip:80/healthz 访问,成功的话会返回 ok。

配置需要测试的service

首先编辑测试用的test.yaml:

apiVersion: v1

kind: ReplicationController

metadata:

name: echoheaders

spec:

replicas: 1

template:

metadata:

labels:

app: echoheaders

spec:

containers:

- name: echoheaders

image: hub.yfcloud.io/google_containers/echoserver:test

ports:

- containerPort: 8080测试service的yaml这里创建多个:

sv-alp-default.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-default

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30302

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaderssv-alp-x.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-x

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30301

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaderssv-alp-y.yaml:

apiVersion: v1

kind: Service

metadata:

name: echoheaders-y

labels:

app: echoheaders

spec:

type: NodePort

ports:

- port: 80

nodePort: 30284

targetPort: 8080

protocol: TCP

name: http

selector:

app: echoheaders接着是

配置ingress

编辑ingress-alp.yaml:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: echomap

spec:

rules:

- host: foo.bar.com

http:

paths:

- path: /foo

backend:

serviceName: echoheaders-x

servicePort: 80

- host: bar.baz.com

http:

paths:

- path: /bar

backend:

serviceName: echoheaders-y

servicePort: 80

- path: /foo

backend:

serviceName: echoheaders-x

servicePort: 80最后测试,测试方法有两种,如下:

方法一:

curl -v http://nodeip:80/foo -H ''host: foo.bar.com''结果如下:

* About to connect() to 183.131.19.232 port 80 (#0)

* Trying 183.131.19.232...

* Connected to 183.131.19.232 (183.131.19.232) port 80 (#0)

> GET /foo HTTP/1.1

> User-Agent: curl/7.29.0

> Accept: */*

> host: foo.bar.com

>

< HTTP/1.1 200 OK

< Server: nginx/1.11.3

< Date: Wed, 12 Oct 2016 10:04:40 GMT

< Transfer-Encoding: chunked

< Connection: keep-alive

<

CLIENT VALUES:

client_address=(''10.1.58.3'', 57364) (10.1.58.3)

command=GET

path=/foo

real path=/foo

query=

request_version=HTTP/1.1

SERVER VALUES:

server_version=BaseHTTP/0.6

sys_version=Python/3.5.0

protocol_version=HTTP/1.0

HEADERS RECEIVED:

Accept=*/*

Connection=close

Host=foo.bar.com

User-Agent=curl/7.29.0

X-Forwarded-For=183.131.19.231

X-Forwarded-Host=foo.bar.com

X-Forwarded-Port=80

X-Forwarded-Proto=http

X-Real-IP=183.131.19.231

* Connection #0 to host 183.131.19.232 left intact其他路由的测试修改对应的url即可。

**注意:**nodeip是你的ingress-controller所对应的pod所在的node的ip。

方法二:

将访问url的主机的host中加上:

nodeip foo.bar.com

nodeip bar.baz.com在浏览器中直接访问:

foo.bar.com/foo

bar.baz.com/bar

bar.baz.com/foo都是可以的。

问题集

在创建ingress-controller的时候报错(ingress的yaml文件是官网中的yaml文件配置,我只改了相对应的镜像。):

问题一:

以上错误信息是ingress-controller创建失败的原因,通过docker log 查看rc中的对应的ingress-controller的容器的日志。

原因:从错误信息来看是因为ingress内部程序默认是通过localhost:8080连接master的apiserver,如果是单机版的k8s环境,就不会报这个错,如果是集群环境,并且你的ingress-controller的pod所对应的容器不是在master节点上(因为有的集群环境master也是node,这样的话,如果你的pod对应的容器在master节点上也不会报这个错),就会报这个错。

解决方法:在ingress-controller.yaml中加上如下参数,

- name: KUBERNETES_MASTER

value: http://183.131.19.231:8080之后的yaml文件就是如前面定义的yaml文件。

问题二:

这个问题是在创建ingress rc成功之后,马上查看rc对应的pod对应的容器时报的错!

从这个问题衍生出个连带的问题,如下图:

问题1:

这个问题是在创建ingress rc成功之后,当第一个容器失败,rc的垃圾机制回收第一次创建停止的容器后,重新启动的容器。查看rc对应的pod对应的容器时报的错!

原因:因为ingress中的源码的健康检查的url改成了 /healthz,而官方文档中的yaml中定义的健康检查的路由是/ingress-controller-healthz,这个url是ingress的自检url,当超过8次之后如果得到结果还是unhealthy,则会挂掉ingress对应的容器。

解决方法:将yaml中的健康检查的url改成:/healthz即可。:

readinessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 80

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1http://blog.csdn.net/u013812710/article/details/52801656

今天的关于kubernetes nginx 虚拟服务器子路由和nginx ingress 虚拟路径的分享已经结束,谢谢您的关注,如果想了解更多关于Kubernetes - - k8s - nginx-ingress、Kubernetes - Launch Single Node Kubernetes Cluster、Kubernetes Nginx Ingress Controller、Kubernetes Nginx Ingress 安装与使用的相关知识,请在本站进行查询。

本文标签:

![[转帖]Ubuntu 安装 Wine方法(ubuntu如何安装wine)](https://www.gvkun.com/zb_users/cache/thumbs/4c83df0e2303284d68480d1b1378581d-180-120-1.jpg)