对于想了解CAFFE安装笔记【windows和ubuntu】的读者,本文将提供新的信息,我们将详细介绍caffe安装教程windows,并且为您提供关于Buildcaffeusingqmake(ubu

对于想了解CAFFE 安装笔记【windows 和 ubuntu】的读者,本文将提供新的信息,我们将详细介绍caffe安装教程windows,并且为您提供关于Build caffe using qmake (ubuntu&windows)、caffe 安装(Ubuntu 14.04 +caffe+GTX 1080+CUDA8.0 +cuDNN v5)、Caffe+Ubuntu 16.04 安装教程、Caffe+Ubuntu14.04+CUDA7.5安装笔记的有价值信息。

本文目录一览:- CAFFE 安装笔记【windows 和 ubuntu】(caffe安装教程windows)

- Build caffe using qmake (ubuntu&windows)

- caffe 安装(Ubuntu 14.04 +caffe+GTX 1080+CUDA8.0 +cuDNN v5)

- Caffe+Ubuntu 16.04 安装教程

- Caffe+Ubuntu14.04+CUDA7.5安装笔记

CAFFE 安装笔记【windows 和 ubuntu】(caffe安装教程windows)

第一部分是 windows 下安装 caffe 的心得;第二部分是 ubuntu 下安装 caffe 的心得:

第一部分:【Win10+VS2017 环境】

老版的 caffe 在 BVLC 的 github 上已经找不到,如果要想下载老版 caffe 可以下载微软的 caffe 版本:https://github.com/Microsoft/caffe

网上的大多安装 caffe 教程都是基于老版的 caffe。

常规错误参考:https://www.cnblogs.com/cxyxbk/p/5902034.html

win10+VS2017

如果只编译 libcaffe 项目按照网上的流程,基本可以 OK

如果还编译其他项目,可能会出现以下问题,以编译 upgrade_net_proto_binary 项目为例

一、错误 C2976 “std::array”: 模板 参数太少 caffe.managed \caffe-master-micorsoft\windows\caffe.managed\caffelib.cpp 68

类似这种错误,双击进去,将 array<array<float>^>^ 中的 array 改成 cli::array

即改成:cli::array<cli::array<float>^>^

参考:https://blog.csdn.net/m0_37287643/article/details/83020441

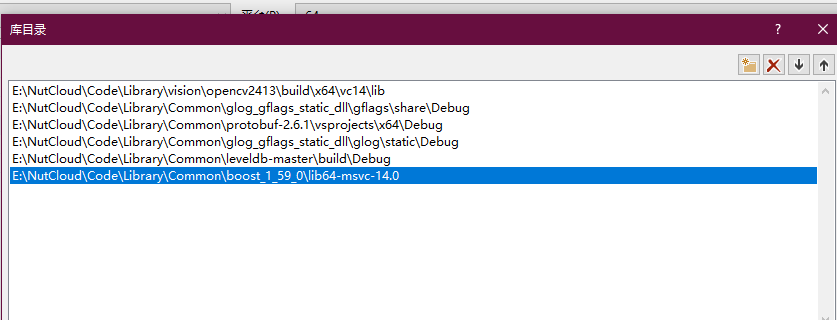

二、错误 LNK1104 无法打开文件 “libboost_date_time-vc140-mt-gd-1_59.lib” upgrade_net_proto_binary \caffe-master-micorsoft\windows\upgrade_net_proto_binary\LINK 1

缺少 boost 库 。注意版本号是 1.59

这里有 boost 安装流程:https://blog.csdn.net/u011054333/article/details/78648294

这里关联 boost 库: https://www.cnblogs.com/denggelin/p/5769480.html

三、错误 LNK2019 无法解析的外部符号 "__declspec (dllimport) void __cdecl google::InitGoogleLogging (char const *)" (__imp_?InitGoogleLogging@google@@YAXPEBD@Z),该符号在函数 main 中被引用 upgrade_net_proto_binary \caffe-master-micorsoft\windows\upgrade_net_proto_binary\upgrade_net_proto_binary.obj 1

没有安装 Google GLOG 库,这里有 https://download.csdn.net/download/colorsky100/10776566 下载,预编译版本的下载(实际上这个包同时提供了 GLOG 和 GFLAGS 库的包)

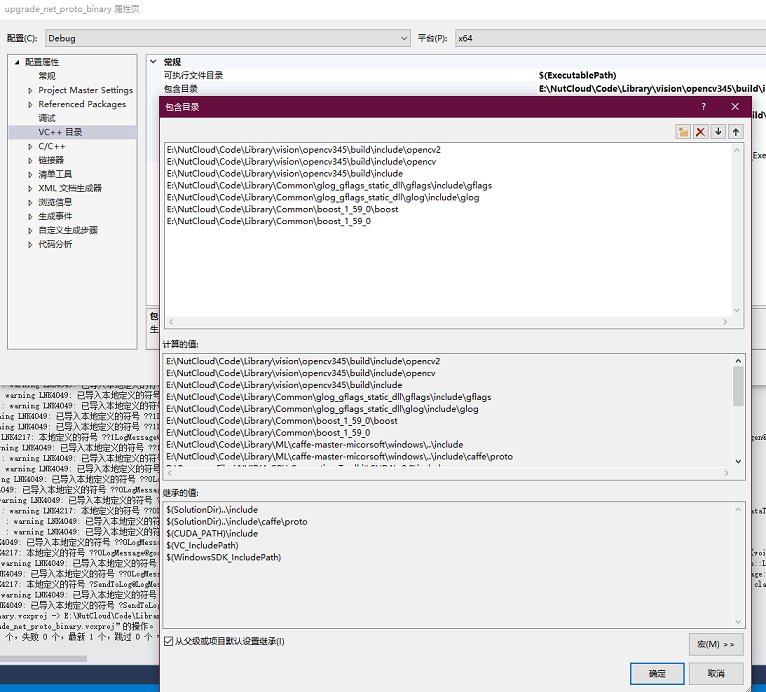

下载后分别在【项目属性页库 | VC++ 目录 | 库目录】添加 glog.dll 和 glog.lib 所在目录,在【项目属性页库 | VC++ 目录 | 包含目录】添加 include 所在目录,在【项目属性页库 | 链接器 | 输入 | 附加依赖型】添加 glog.lib

说明:glog 的预编译包下有三个目录 bin、share、static;其中 bin 中是 dll,share 是和 bin 配套的 lib,static 是不需要 dll 的完整的 lib。如果配的是 share 对应的 glog.lib,则必须配 bin,如果用 static 目录下的 glog.lib,那么库目录只需要添加一个 static 对应的目录

上面的描述是有错误的,库目录只需要配 lib 所在的路径,如果这个 lib 是个完全的 lib(即 static 目录下的 glog.lib),则编译完直接使用,如果这个 lib 还需要配 dll 使用(即 share 下的 glog.lib)。编译正常编译,但是在使用时,要么需要将 dll 所在目录设成环境变量,要么需要将 dll 文件(即 bin 下的 glog.dll)拷贝到项目目录下。 不论如何,dll 目录不需要配置在库目录中,都可以正常编译通过

注意,需要添加 debug 版本的动静态链接库文件,否则会报错误如下:

错误 LNK2038 检测到 “_ITERATOR_DEBUG_LEVEL” 的不匹配项:值 “0” 不匹配值 “2”(upgrade_net_proto_binary.obj 中) upgrade_net_proto_binary \caffe-master-micorsoft\windows\upgrade_net_proto_binary\glog.lib (logging.obj) 1

上述配置完后可能依然有错误,需要在【项目属性页库 | C/C++ 目录 | 预处理器 | 预处理器定义】中添加 GOOGLE_GLOG_DLL_DECL = 宏后解决,参考:https://www.cnblogs.com/21207-iHome/p/9297329.html

四、错误 LNK2001 无法解析的外部符号 "public: __cdecl google::protobuf::internal::LogMessage::LogMessage (enum google::protobuf::LogLevel,char const *,int)" (??0LogMessage@internal@protobuf@google@@QEAA@W4LogLevel@23@PEBDH@Z) upgrade_net_proto_binary \caffe-master-micorsoft\windows\upgrade_net_proto_binary\sgd_solver.obj 1

缺少 protobuff 库。这里有 protobuff 的下载、编译,比较麻烦。参考:https://blog.csdn.net/hp_cpp/article/details/81561310

编译完,回过来配置 caffe 的 upgrade_net_proto_binary 项目,继续添加 libprotocd.lib 和 libprotobufd.lib 库目录,依赖项添加 libprotocd.lib 和 libprotobufd.lib

如果出现错误:LNK2038 检测到 “RuntimeLibrary” 的不匹配项:值 “MTd_StaticDebug” 不匹配值 “MDd_DynamicDebug”(upgrade_net_proto_binary.obj 中) upgrade_net_proto_binary \caffe-master-micorsoft\windows\upgrade_net_proto_binary\libprotocd.lib (ruby_generator.obj) 1

则在 CMAKE 配置 protobuff 不能像帖子 https://blog.csdn.net/hp_cpp/article/details/81561310 推荐的那样,而是需要将 protobuff_BUILD_SHARED_LIBS 对应的复选框给勾上,即在 protobuff 编译完后的 debug 目录下要有 dll 文件

安装上面的安装还是会报无法解析外部符号问题,网上说是 protobuff 版本问题,改为 2.5.0 版本后还是有问题,又改为 2.6.1 版本,问题得到解决

需要注意的是:官网下载的 2.6.1 是 win32 的,需要改成 x64, 然后需要做以下修改:

只要编译 libprotobuf 项目和 libprotoc 项目,需要在属性页中改动以下几处:1.【常规 | Windows SDK 版本】下拉,选择合适的版本;2.【常规 | 配置类型】下拉为静态库;3.【C/C++| 常规 | 附加包含目录】设为../src;.;%(AdditionalIncludeDirectories);4.【C/C++| 代码生成 | 运行库】设为 MDd。

五、错误 LNK2019 无法解析的外部符号 "unsigned int __cdecl google::ParseCommandLineFlags (int *,char * * *,bool)" (?ParseCommandLineFlags@google@@YAIPEAHPEAPEAPEAD_N@Z),该符号在函数 "void __cdecl caffe::GlobalInit (int *,char * * *)" (?GlobalInit@caffe@@YAXPEAHPEAPEAPEAD@Z) 中被引用 upgrade_net_proto_binary \caffe-master-micorsoft\windows\upgrade_net_proto_binary\common.obj 1

缺少 gflags 库,在三中提供的预编译包中同时有 glog 和 gflags,正常配置到项目中即可

六、错误 LNK2019 无法解析的外部符号 "public: __cdecl cv::Exception::Exception (int,class std::basic_string<char,struct std::char_traits<char>,class std::allocator<char> > const &,class std::basic_string<char,struct std::char_traits<char>,class std::allocator<char> > const &,class std::basic_string<char,struct std::char_traits<char>,class std::allocator<char> > const &,int)" (??0Exception@cv@@QEAA@HAEBV?$basic_string@DU?$char_traits@D@std@@V?$allocator@D@2@@std@@00H@Z),该符号在函数 "public: unsigned char * __cdecl cv::Mat::ptr<unsigned char>(int)" (??$ptr@E@Mat@cv@@QEAAPEAEH@Z) 中被引用 upgrade_net_proto_binary \caffe-master-micorsoft\windows\upgrade_net_proto_binary\data_transformer.obj 1

这个明显是缺少 opencv 库,但是非常纠结的是官网提供的 opencv2.14 版本只提供 VC14(VS2015)的预编译版本,然后下载官网的 opencv3.4.5 版本,这个提供 VC15 版本。但是配置后还是报无法解析外部符号的问题,配置是没有问题的。

后来转过头直接用 opencv2.14.13 版本,居然没有问题,需要添加的依赖项为 opencv_core2413d.lib;opencv_highgui2413d.lib;opencv_imgproc2413d.lib;

七、错误 LNK2019 无法解析的外部符号 "public: __cdecl leveldb::WriteBatch::~WriteBatch (void)" (??1WriteBatch@leveldb@@QEAA@XZ),该符号在函数 "int`public: __cdecl caffe::db::LevelDBTransaction::LevelDBTransaction (class leveldb::DB *)''::`1''::dtor$1" (?dtor$1@?0???0LevelDBTransaction@db@caffe@@QEAA@PEAVDB@leveldb@@@Z@4HA) 中被引用 upgrade_net_proto_binary \caffe-master-micorsoft\windows\upgrade_net_proto_binary\db.obj 1

错误 LNK2001 无法解析的外部符号 "public: class std::basic_string<char,struct std::char_traits<char>,class std::allocator<char> > __cdecl leveldb::Status::ToString (void) const" (?ToString@Status@leveldb@@QEBA?AV?$basic_string@DU?$char_traits@D@std@@V?$allocator@D@2@@std@@XZ) upgrade_net_proto_binary \caffe-master-micorsoft\windows\upgrade_net_proto_binary\db_leveldb.obj 1

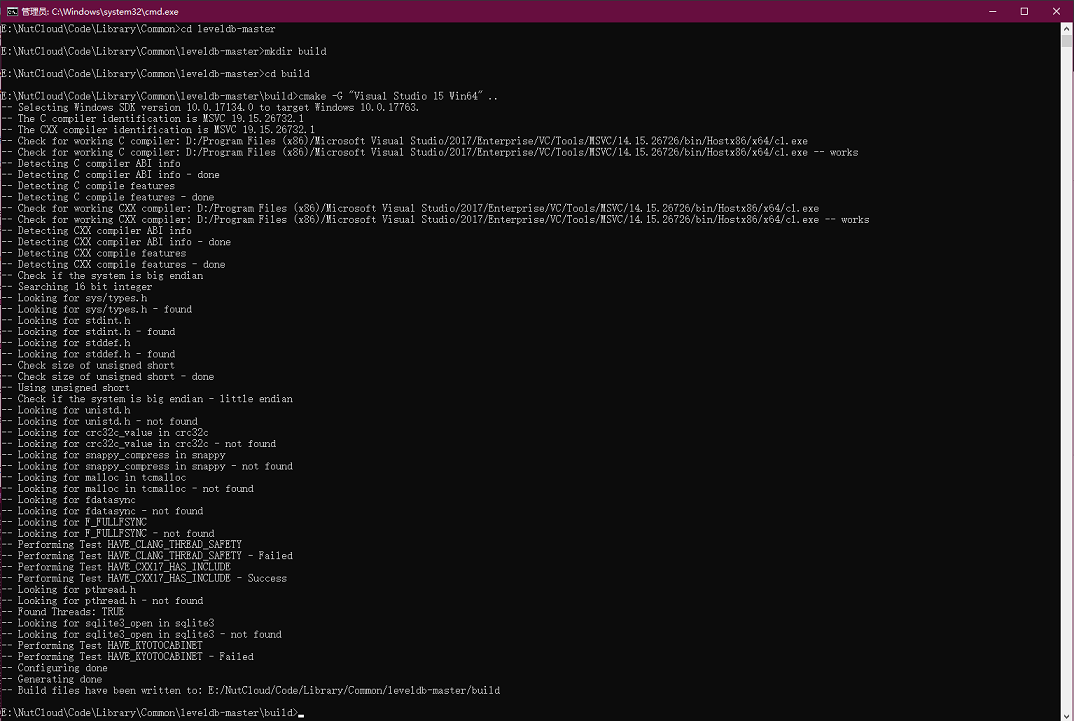

缺少 LevelDB 库,在官网 https://github.com/google/leveldb/ 下载好后,用 CMAKE-GUI 配置总报错 The C++ compiler "D:/Program Files (x86)/Microsoft Visual Studio/2017/Enterprise/VC/Tools/MSVC/14.15.26726/bin/Hostx86/x64/cl.exe" is not able to compile a simple test program.

后来按照官网的指示在 cmd 中用 cmake 指令,成功了

官网说明:

Building for Windows

First generate the Visual Studio 2017 project/solution files:

mkdir -p build

cd build

cmake -G "Visual Studio 15" ..

The default default will build for x86. For 64-bit run:

cmake -G "Visual Studio 15 Win64" ..

To compile the Windows solution from the command-line:

devenv /build Debug leveldb.sln

or open leveldb.sln in Visual Studio and build from within.

Please see the CMake documentation and CMakeLists.txt for more advanced usage.如下图,其中 build 是自己建的目录

CMAKE 后,直接双击 leveldb.sln 进 VS,然后只编译 leveldb 项目,回过头配置 caffe 的 upgrade_net_proto_binary。搞定

总结:

在 upgrade_net_proto_binary 项目中,需要的附加库包括:

1.BOOST 2.GLOG 3.PROTOBUFF 4.GFLAGS 5.OPENCV 6.LEVELDB 等一系列第三方库

最后的属性配置页如下:

包含目录:

库目录:

依赖项

2019.3.24

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

第二部分:【Ubuntu16.04 环境】

基本安装帖子 https://www.cnblogs.com/sunshineatnoon/p/4535329.html 操作

1. 安装 glog 库

直接 git clone https://github.com/google/glog/

然后进入 glog 目录:

./autogen.sh && ./configure && make && make install

如果报错:

./autogen.sh: 5: ./autogen.sh: autoreconf: not found

按照帖子 https://blog.csdn.net/mybelief321/article/details/9208073 操作

apt-get install autoconf

apt-get install automake

apt-get install libtool2019.5.27

2. libhdf5 包相关错误:

src/caffe/layers/hdf5_output_layer.cpp:4:18: fatal error: hdf5.h: 没有那个文件或目录

#include "hdf5.h"

在上面的帖子链接中,我没有按照他的步骤安装 libhdf5-serial-dev 包

正常 apt install libhdf5-serial-dev。安装后,依然报这个错误,此时按照帖子 https://www.cnblogs.com/xiangfeidemengzhu/p/7058391.html 操作。

首先查找 hdf5.h 的位置,终端输入: locate hdf5.h

可以看到在 /usr/include/hdf5/serial/ 下有,把这个路径添加到 Makefile.config 的 INCLUDE_DIRS 中,即:

INCLUDE_DIRS := $(PYTHON_INCLUDE) /usr/local/include /usr/include/hdf5/serial/

继续 make all,报错:

AR -o .build_release/lib/libcaffe.a

LD -o .build_release/lib/libcaffe.so.1.0.0

/usr/bin/ld: 找不到 -lhdf5_hl

/usr/bin/ld: 找不到 -lhdf5

collect2: error: ld returned 1 exit status

Makefile:582: recipe for target ''.build_release/lib/libcaffe.so.1.0.0'' failed

make: *** [.build_release/lib/libcaffe.so.1.0.0] Error 1

这个应该是找不到 hdf5 的 lib 文件。其实这个和上面找不到头文件是一个问题,就是 apt install libhdf5-serial-dev 库的时候安装的路径不在 caffe 库默认的编译路径下。

所以第一步还是 locate

locate libhdf5

然后可以看到有个结果是

/usr/lib/x86_64-linux-gnu/hdf5/serial/libhdf5.so

将这个路径添加到 Makefile.config 中去,即:

LIBRARY_DIRS := $(PYTHON_LIB) /usr/local/lib /usr/lib /usr/lib/x86_64-linux-gnu/hdf5/serial/

再 make all 解决。

3. gflags 包的问题:

AR -o .build_release/lib/libcaffe.a

LD -o .build_release/lib/libcaffe.so.1.0.0

/usr/bin/ld: /usr/local/lib/libgflags.a(gflags.cc.o): relocation R_X86_64_32 against `.rodata.str1.1'' can not be used when making a shared object; recompile with -fPIC

/usr/local/lib/libgflags.a: 无法添加符号:错误的值

collect2: error: ld returned 1 exit status

Makefile:572: recipe for target ''.build_release/lib/libcaffe.so.1.0.0'' failed

make: *** [.build_release/lib/libcaffe.so.1.0.0] Error 1

from:https://github.com/BVLC/caffe/issues/6317 追踪到 #6200,#2171,一路看过去

其中在 #2171 的讨论中,有一层楼说:

just recompile the gflags with CXXFLAGS += -fPIC makes things work

该层回复较多

再回过头看前面的帖子 https://blog.csdn.net/mybelief321/article/details/9208073

这个帖子在安装 gflags 库时明确说了要 export 环境变量,即

export CXXFLAGS="-fPIC"2019.5.29

4. gcc 版本问题

[ 89%] Linking CXX executable compute_image_mean

CMakeFiles/compute_image_mean.dir/compute_image_mean.cpp.o:在函数‘std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >* google::MakeCheckOpString<int, int>(int const&, int const&, char const*)’中:

compute_image_mean.cpp:(.text._ZN6google17MakeCheckOpStringIiiEEPNSt7__cxx1112basic_stringIcSt11char_traitsIcESaIcEEERKT_RKT0_PKc [_ZN6google17MakeCheckOpStringIiiEEPNSt7__cxx1112basic_stringIcSt11char_traitsIcESaIcEEERKT_RKT0_PKc]+0x50):对‘google::base::CheckOpMessageBuilder::NewString [abi:cxx11]()’未定义的引用

CMakeFiles/compute_image_mean.dir/compute_image_mean.cpp.o:在函数‘std::__cxx11::basic_string<char, std::char_traits<char>, std::allocator<char> >* google::MakeCheckOpString<unsigned long, int>(unsigned long const&, int const&, char const*)’中:

compute_image_mean.cpp:(.text._ZN6google17MakeCheckOpStringImiEEPNSt7__cxx1112basic_stringIcSt11char_traitsIcESaIcEEERKT_RKT0_PKc [_ZN6google17MakeCheckOpStringImiEEPNSt7__cxx1112basic_stringIcSt11char_traitsIcESaIcEEERKT_RKT0_PKc]+0x50):对‘google::base::CheckOpMessageBuilder::NewString [abi:cxx11]()’未定义的引用

collect2: error: ld returned 1 exit status

tools/CMakeFiles/compute_image_mean.dir/build.make:133: recipe for target ''tools/compute_image_mean'' failed

make[2]: *** [tools/compute_image_mean] Error 1

CMakeFiles/Makefile2:457: recipe for target ''tools/CMakeFiles/compute_image_mean.dir/all'' failed

make[1]: *** [tools/CMakeFiles/compute_image_mean.dir/all] Error 2

Makefile:127: recipe for target ''all'' failed

make: *** [all] Error 2

这里的本质原因是 gcc 版本问题,因为前期编译 cuda 的时候对 gcc 降低了版本。所以需要将 gcc 的版本提升到 5 以上,按照这个帖子的方法进行 https://blog.csdn.net/xuezhisdc/article/details/48650015 (虽然这个帖子是降版本)即:

cd /usr/bin

ls gcc*

sudo rm gcc

sudo ln -s gcc-5 gcc

sudo rm g++

sudo ln -s g++-5 g++

# 查看是否连接到5

ls –al gcc g++

gcc --version

g++ --version 完了之后,make clean

make all 还是报同样的错误。

然后再按照最开始的帖子 https://blog.csdn.net/mybelief321/article/details/9208073 的方法再安装一遍 glog,即

cd glog-0.3.3

./configure

make

sudo make install再 cd 到 caffe 目录

make clean

make all

2019.6.2

5. make pycaffe 相关问题

CXX/LD -o python/caffe/_caffe.so python/caffe/_caffe.cpp

python/caffe/_caffe.cpp:10:31: fatal error: numpy/arrayobject.h: 没有那个文件或目录

compilation terminated.

Makefile:517: recipe for target ''python/caffe/_caffe.so'' failed

make: *** [python/caffe/_caffe.so] Error 1

首先看自己机器是否已经安装了。因为 numpy 库是必装的,所以因为还是路径设置的问题

终端输入 locate arrayobject.h

找到

/home/qian/.local/lib/python3.5/site-packages/numpy/core/include/numpy/arrayobject.h

注意上面的错误提示是缺少 numpy/arrayobject.h,而不是缺少 arrayobject.h。所以在添加路径的时候,最后的 numpy 不要加上

修改 Makefile.config 如下:

PYTHON_INCLUDE := /usr/include/python3.5m \

/usr/lib/python3.5/dist-packages/numpy/core/include \

/home/qian/.local/lib/python3.5/site-packages/numpy/core/include

解决

继续 make,找不到 lib:

CXX/LD -o python/caffe/_caffe.so python/caffe/_caffe.cpp

/usr/bin/ld: 找不到 -lboost_python3

collect2: error: ld returned 1 exit status

Makefile:517: recipe for target ''python/caffe/_caffe.so'' failed

make: *** [python/caffe/_caffe.so] Error 1

首先终端查找:locate libboost_python3.so

找不到文件

再 locate libboost_python

大概结果如下:

/usr/lib/x86_64-linux-gnu/libboost_python-py27.a

/usr/lib/x86_64-linux-gnu/libboost_python-py27.so

/usr/lib/x86_64-linux-gnu/libboost_python-py27.so.1.58.0

/usr/lib/x86_64-linux-gnu/libboost_python-py35.a

/usr/lib/x86_64-linux-gnu/libboost_python-py35.so

/usr/lib/x86_64-linux-gnu/libboost_python-py35.so.1.58.0

/usr/lib/x86_64-linux-gnu/libboost_python.a

/usr/lib/x86_64-linux-gnu/libboost_python.so

因为我编译的是 python3 版本。所以很明显我需要创建一个指向 libboost_python_py35.so 的软链接,名为 libboost_python3.so

root@qian-desktop:/usr/lib/x86_64-linux-gnu# ln -s libboost_python-py35.so libboost_python3.so

make pycaffe 解决

6. pip3 install -r requirements.txt 相关问题

ERROR: matplotlib 3.0.3 has requirement python-dateutil>=2.1, but you''ll have python-dateutil 1.5 which is incompatible.

ERROR: pandas 0.24.2 has requirement python-dateutil>=2.5.0, but you''ll have python-dateutil 1.5 which is incompatible.

已经终端 pip3 install --upgrade python-dateutil 依然报此错误

解决办法:https://blog.csdn.net/CAU_Ayao/article/details/83538024

gedit requirements.txt

python-dateutil>=1.4,<2

改为:

python-dateutil // 不加版本号就是默认为最新版本

继续 pip3 install -r requirements.txt,问题解决。

2019.6.4

如果升级 pip3 后之后出现:from pip import main ImportError: cannot import name ''main'' 错误

解决办法 https://blog.csdn.net/qq_38522539/article/details/80678412

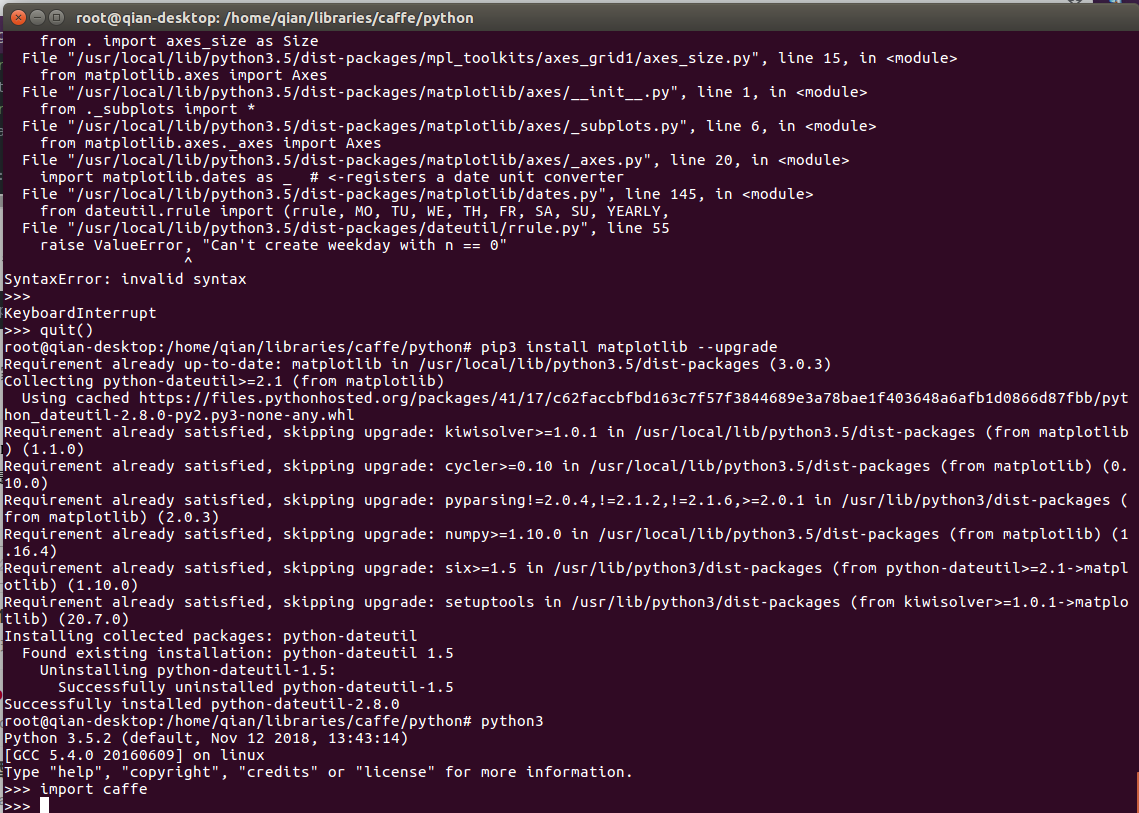

7. import caffe 相关错误

caffe 模块找不到错误

解决办法:

export PYTHONPATH=/home/qian/libraries/caffe/python

需要升级 matplotlib 错误

>>> import caffe

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/qian/libraries/caffe/python/caffe/__init__.py", line 1, in <module>

from .pycaffe import Net, SGDSolver, NesterovSolver, AdaGradSolver, RMSPropSolver, AdaDeltaSolver, AdamSolver, NCCL, Timer

File "/home/qian/libraries/caffe/python/caffe/pycaffe.py", line 15, in <module>

import caffe.io

File "/home/qian/libraries/caffe/python/caffe/io.py", line 2, in <module>

import skimage.io

File "/usr/local/lib/python3.5/dist-packages/skimage/__init__.py", line 135, in <module>

from .data import data_dir

File "/usr/local/lib/python3.5/dist-packages/skimage/data/__init__.py", line 13, in <module>

from ..io import imread, use_plugin

File "/usr/local/lib/python3.5/dist-packages/skimage/io/__init__.py", line 15, in <module>

reset_plugins()

File "/usr/local/lib/python3.5/dist-packages/skimage/io/manage_plugins.py", line 91, in reset_plugins

_load_preferred_plugins()

File "/usr/local/lib/python3.5/dist-packages/skimage/io/manage_plugins.py", line 71, in _load_preferred_plugins

_set_plugin(p_type, preferred_plugins[''all''])

File "/usr/local/lib/python3.5/dist-packages/skimage/io/manage_plugins.py", line 83, in _set_plugin

use_plugin(plugin, kind=plugin_type)

File "/usr/local/lib/python3.5/dist-packages/skimage/io/manage_plugins.py", line 254, in use_plugin

_load(name)

File "/usr/local/lib/python3.5/dist-packages/skimage/io/manage_plugins.py", line 298, in _load

fromlist=[modname])

File "/usr/local/lib/python3.5/dist-packages/skimage/io/_plugins/matplotlib_plugin.py", line 3, in <module>

from mpl_toolkits.axes_grid1 import make_axes_locatable

File "/usr/local/lib/python3.5/dist-packages/mpl_toolkits/axes_grid1/__init__.py", line 1, in <module>

from . import axes_size as Size

File "/usr/local/lib/python3.5/dist-packages/mpl_toolkits/axes_grid1/axes_size.py", line 15, in <module>

from matplotlib.axes import Axes

File "/usr/local/lib/python3.5/dist-packages/matplotlib/axes/__init__.py", line 1, in <module>

from ._subplots import *

File "/usr/local/lib/python3.5/dist-packages/matplotlib/axes/_subplots.py", line 6, in <module>

from matplotlib.axes._axes import Axes

File "/usr/local/lib/python3.5/dist-packages/matplotlib/axes/_axes.py", line 20, in <module>

import matplotlib.dates as _ # <-registers a date unit converter

File "/usr/local/lib/python3.5/dist-packages/matplotlib/dates.py", line 145, in <module>

from dateutil.rrule import (rrule, MO, TU, WE, TH, FR, SA, SU, YEARLY,

File "/usr/local/lib/python3.5/dist-packages/dateutil/rrule.py", line 55

raise ValueError, "Can''t create weekday with n == 0"

^

SyntaxError: invalid syntax

解决办法:https://blog.csdn.net/quantum7/article/details/83475530

2019.6.5

终于搞定。。。

Build caffe using qmake (ubuntu&windows)

1. Problem

I want to build caffe on windows. Windows official version is a little complex,since I need to change dependent libs frequently. For a cross-platform solution,Qt is an awesome choice.

2. Solution

Platform: Windows10(x64)+ VS2013 (msvc120)

2.1 Prepare

Caffe uses a lot of cpp libs: boost,gflags,glog,hdf5,LevelDB,Imdb,openblas,opencv,protobuf. I upload msvc120_x64 version here.

2.2 Code

Qt uses qmake to manage project. All you need just a *.pro file,as CMakeLists.txt for cmake.

Here is libcaffe.pro

TEMPLATE = lib CONfig(debug,debug|release): TARGET = libcaffed CONfig(release,debug|release): TARGET = libcaffe INCLUDEPATH += include src include/caffe/proto DEFInes += USE_OPENCV cpu_ONLY CONfig += dll staticlib # Input HEADERS += \ include/caffe/blob.hpp\ include/caffe/caffe.hpp\ include/caffe/common.hpp\ include/caffe/data_reader.hpp\ include/caffe/data_transformer.hpp\ include/caffe/filler.hpp\ include/caffe/internal_thread.hpp\ include/caffe/layer.hpp\ include/caffe/layers/absval_layer.hpp\ include/caffe/layers/accuracy_layer.hpp\ include/caffe/layers/argmax_layer.hpp\ include/caffe/layers/base_conv_layer.hpp\ include/caffe/layers/base_data_layer.hpp\ include/caffe/layers/batch_norm_layer.hpp\ include/caffe/layers/batch_reindex_layer.hpp\ include/caffe/layers/bias_layer.hpp\ include/caffe/layers/bnll_layer.hpp\ include/caffe/layers/Box_annotator_ohem_layer.hpp\ include/caffe/layers/concat_layer.hpp\ include/caffe/layers/contrastive_loss_layer.hpp\ include/caffe/layers/conv_layer.hpp\ include/caffe/layers/crop_layer.hpp\ include/caffe/layers/cudnn_conv_layer.hpp\ include/caffe/layers/cudnn_lcn_layer.hpp\ include/caffe/layers/cudnn_lrn_layer.hpp\ include/caffe/layers/cudnn_pooling_layer.hpp\ include/caffe/layers/cudnn_relu_layer.hpp\ include/caffe/layers/cudnn_sigmoid_layer.hpp\ include/caffe/layers/cudnn_softmax_layer.hpp\ include/caffe/layers/cudnn_tanh_layer.hpp\ include/caffe/layers/data_layer.hpp\ include/caffe/layers/deconv_layer.hpp\ include/caffe/layers/dropout_layer.hpp\ include/caffe/layers/dummy_data_layer.hpp\ include/caffe/layers/eltwise_layer.hpp\ include/caffe/layers/elu_layer.hpp\ include/caffe/layers/embed_layer.hpp\ include/caffe/layers/euclidean_loss_layer.hpp\ include/caffe/layers/exp_layer.hpp\ include/caffe/layers/filter_layer.hpp\ include/caffe/layers/flatten_layer.hpp\ include/caffe/layers/hdf5_data_layer/.hpp\ include/caffe/layers/hdf5_output_layer.hpp\ include/caffe/layers/hinge_loss_layer.hpp\ include/caffe/layers/im2col_layer.hpp\ include/caffe/layers/image_data_layer.hpp\ include/caffe/layers/infogain_loss_layer.hpp\ include/caffe/layers/inner_product_layer.hpp\ include/caffe/layers/input_layer.hpp\ include/caffe/layers/log_layer.hpp\ include/caffe/layers/loss_layer.hpp\ include/caffe/layers/lrn_layer.hpp\ include/caffe/layers/lstm_layer.hpp\ include/caffe/layers/memory_data_layer.hpp\ include/caffe/layers/multinomial_logistic_loss_layer.hpp\ include/caffe/layers/mvn_layer.hpp\ include/caffe/layers/neuron_layer.hpp\ include/caffe/layers/parameter_layer.hpp\ include/caffe/layers/pooling_layer.hpp\ include/caffe/layers/power_layer.hpp\ include/caffe/layers/prelu_layer.hpp\ include/caffe/layers/psroi_pooling_layer.hpp\ include/caffe/layers/python_layer.hpp\ include/caffe/layers/recurrent_layer.hpp\ include/caffe/layers/reduction_layer.hpp\ include/caffe/layers/relu_layer.hpp\ include/caffe/layers/reshape_layer.hpp\ include/caffe/layers/rnn_layer.hpp\ include/caffe/layers/roi_pooling_layer.hpp\ include/caffe/layers/scale_layer.hpp\ include/caffe/layers/sigmoid_cross_entropy_loss_layer.hpp\ include/caffe/layers/sigmoid_layer.hpp\ include/caffe/layers/silence_layer.hpp\ include/caffe/layers/slice_layer.hpp\ include/caffe/layers/smooth_l1_loss_layer.hpp\ include/caffe/layers/smooth_l1_loss_ohem_layer.hpp\ include/caffe/layers/softmax_layer.hpp\ include/caffe/layers/softmax_loss_layer.hpp\ include/caffe/layers/softmax_loss_ohem_layer.hpp\ include/caffe/layers/split_layer.hpp\ include/caffe/layers/spp_layer.hpp\ include/caffe/layers/tanh_layer.hpp\ include/caffe/layers/threshold_layer.hpp\ include/caffe/layers/tile_layer.hpp\ include/caffe/layers/window_data_layer.hpp\ include/caffe/layer_factory.hpp\ include/caffe/net.hpp\ include/caffe/parallel.hpp\ include/caffe/proto/caffe.pb.h\ include/caffe/sgd_solvers.hpp\ include/caffe/solver.hpp\ include/caffe/solver_factory.hpp\ include/caffe/syncedmem.hpp\ include/caffe/util/benchmark.hpp\ include/caffe/util/blocking_queue.hpp\ include/caffe/util/cudnn.hpp\ include/caffe/util/db.hpp\ include/caffe/util/db_leveldb.hpp\ include/caffe/util/db_lmdb.hpp\ include/caffe/util/device_alternate.hpp\ include/caffe/util/format.hpp\ include/caffe/util/hdf5.hpp\ include/caffe/util/im2col.hpp\ include/caffe/util/insert_splits.hpp\ include/caffe/util/io.hpp\ include/caffe/util/math_functions.hpp\ include/caffe/util/mkl_alternate.hpp\ include/caffe/util/rng.hpp\ include/caffe/util/signal_handler.h\ include/caffe/util/upgrade_proto.hpp SOURCES += \ src/caffe/blob.cpp \ src/caffe/common.cpp \ src/caffe/data_reader.cpp \ src/caffe/data_transformer.cpp \ src/caffe/internal_thread.cpp \ src/caffe/layer.cpp \ src/caffe/layers/absval_layer.cpp \ src/caffe/layers/accuracy_layer.cpp \ src/caffe/layers/argmax_layer.cpp \ src/caffe/layers/base_conv_layer.cpp \ src/caffe/layers/base_data_layer.cpp \ src/caffe/layers/batch_norm_layer.cpp \ src/caffe/layers/batch_reindex_layer.cpp \ src/caffe/layers/bias_layer.cpp \ src/caffe/layers/bnll_layer.cpp \ src/caffe/layers/Box_annotator_ohem_layer.cpp \ src/caffe/layers/concat_layer.cpp \ src/caffe/layers/contrastive_loss_layer.cpp \ src/caffe/layers/conv_layer.cpp \ src/caffe/layers/crop_layer.cpp \ src/caffe/layers/cudnn_conv_layer.cpp \ src/caffe/layers/cudnn_lcn_layer.cpp \ src/caffe/layers/cudnn_lrn_layer.cpp \ src/caffe/layers/cudnn_pooling_layer.cpp \ src/caffe/layers/cudnn_relu_layer.cpp \ src/caffe/layers/cudnn_sigmoid_layer.cpp \ src/caffe/layers/cudnn_softmax_layer.cpp \ src/caffe/layers/cudnn_tanh_layer.cpp \ src/caffe/layers/data_layer.cpp \ src/caffe/layers/deconv_layer.cpp \ src/caffe/layers/dropout_layer.cpp \ src/caffe/layers/dummy_data_layer.cpp \ src/caffe/layers/eltwise_layer.cpp \ src/caffe/layers/elu_layer.cpp \ src/caffe/layers/embed_layer.cpp \ src/caffe/layers/euclidean_loss_layer.cpp \ src/caffe/layers/exp_layer.cpp \ src/caffe/layers/filter_layer.cpp \ src/caffe/layers/flatten_layer.cpp \ src/caffe/layers/hdf5_data_layer.cpp \ src/caffe/layers/hdf5_output_layer.cpp \ src/caffe/layers/hinge_loss_layer.cpp \ src/caffe/layers/im2col_layer.cpp \ src/caffe/layers/image_data_layer.cpp \ src/caffe/layers/infogain_loss_layer.cpp \ src/caffe/layers/inner_product_layer.cpp \ src/caffe/layers/input_layer.cpp \ src/caffe/layers/log_layer.cpp \ src/caffe/layers/loss_layer.cpp \ src/caffe/layers/lrn_layer.cpp \ src/caffe/layers/lstm_layer.cpp \ src/caffe/layers/lstm_unit_layer.cpp \ src/caffe/layers/memory_data_layer.cpp \ src/caffe/layers/multinomial_logistic_loss_layer.cpp \ src/caffe/layers/mvn_layer.cpp \ src/caffe/layers/neuron_layer.cpp \ src/caffe/layers/parameter_layer.cpp \ src/caffe/layers/pooling_layer.cpp \ src/caffe/layers/power_layer.cpp \ src/caffe/layers/prelu_layer.cpp \ src/caffe/layers/psroi_pooling_layer.cpp \ src/caffe/layers/recurrent_layer.cpp \ src/caffe/layers/reduction_layer.cpp \ src/caffe/layers/relu_layer.cpp \ src/caffe/layers/reshape_layer.cpp \ src/caffe/layers/rnn_layer.cpp \ src/caffe/layers/roi_pooling_layer.cpp \ src/caffe/layers/scale_layer.cpp \ src/caffe/layers/sigmoid_cross_entropy_loss_layer.cpp \ src/caffe/layers/sigmoid_layer.cpp \ src/caffe/layers/silence_layer.cpp \ src/caffe/layers/slice_layer.cpp \ src/caffe/layers/smooth_l1_loss_layer.cpp \ src/caffe/layers/smooth_L1_loss_ohem_layer.cpp \ src/caffe/layers/softmax_layer.cpp \ src/caffe/layers/softmax_loss_layer.cpp \ src/caffe/layers/softmax_loss_ohem_layer.cpp \ src/caffe/layers/split_layer.cpp \ src/caffe/layers/spp_layer.cpp \ src/caffe/layers/tanh_layer.cpp \ src/caffe/layers/threshold_layer.cpp \ src/caffe/layers/tile_layer.cpp \ src/caffe/layers/window_data_layer.cpp \ src/caffe/layer_factory.cpp \ src/caffe/net.cpp \ src/caffe/parallel.cpp \ src/caffe/solver.cpp \ src/caffe/solvers/adadelta_solver.cpp \ src/caffe/solvers/adagrad_solver.cpp \ src/caffe/solvers/adam_solver.cpp \ src/caffe/solvers/nesterov_solver.cpp \ src/caffe/solvers/rmsprop_solver.cpp \ src/caffe/solvers/sgd_solver.cpp \ src/caffe/syncedmem.cpp \ src/caffe/util/benchmark.cpp \ src/caffe/util/blocking_queue.cpp \ src/caffe/util/cudnn.cpp \ src/caffe/util/db.cpp \ src/caffe/util/db_leveldb.cpp \ src/caffe/util/db_lmdb.cpp \ src/caffe/util/hdf5.cpp \ src/caffe/util/im2col.cpp \ src/caffe/util/insert_splits.cpp \ src/caffe/util/io.cpp \ src/caffe/util/math_functions.cpp \ src/caffe/util/signal_handler.cpp \ src/caffe/util/upgrade_proto.cpp \ src/caffe/proto/caffe.pb.cc win32{ # opencv PATH_OPENCV_INCLUDE = "H:\3rdparty\OpenCV\opencv310\build\include" PATH_OPENCV_LIBRARIES = "H:\3rdparty\OpenCV\opencv310\build\x64\vc12\lib" VERSION_OPENCV = 310 INCLUDEPATH += $${PATH_OPENCV_INCLUDE} CONfig(debug,debug|release){ LIBS += -L$${PATH_OPENCV_LIBRARIES} -lopencv_core$${VERSION_OPENCV}d LIBS += -L$${PATH_OPENCV_LIBRARIES} -lopencv_highgui$${VERSION_OPENCV}d LIBS += -L$${PATH_OPENCV_LIBRARIES} -lopencv_imgcodecs$${VERSION_OPENCV}d LIBS += -L$${PATH_OPENCV_LIBRARIES} -lopencv_imgproc$${VERSION_OPENCV}d } CONfig(release,debug|release){ LIBS += -L$${PATH_OPENCV_LIBRARIES} -lopencv_core$${VERSION_OPENCV} LIBS += -L$${PATH_OPENCV_LIBRARIES} -lopencv_highgui$${VERSION_OPENCV} LIBS += -L$${PATH_OPENCV_LIBRARIES} -lopencv_imgcodecs$${VERSION_OPENCV} LIBS += -L$${PATH_OPENCV_LIBRARIES} -lopencv_imgproc$${VERSION_OPENCV} } #glog INCLUDEPATH += H:\3rdparty\glog\include LIBS += -LH:\3rdparty\glog\lib\x64\v120\Debug\dynamic -llibglog #boost INCLUDEPATH += H:\3rdparty\boost\boost_1_59_0 CONfig(debug,debug|release): BOOST_VERSION = "-vc120-mt-gd-1_59" CONfig(release,debug|release): BOOST_VERSION = "-vc120-mt-1_59" LIBS += -LH:\3rdparty\boost\boost_1_59_0\lib64-msvc-12.0 \ -llibboost_system$${BOOST_VERSION} \ -llibboost_date_time$${BOOST_VERSION} \ -llibboost_filesystem$${BOOST_VERSION} \ -llibboost_thread$${BOOST_VERSION} \ -llibboost_regex$${BOOST_VERSION} #gflags INCLUDEPATH += H:\3rdparty\gflags\include CONfig(debug,debug|release): LIBS += -LH:\3rdparty\gflags\x64\v120\dynamic\Lib -lgflagsd CONfig(release,debug|release): LIBS += -LH:\3rdparty\gflags\x64\v120\dynamic\Lib -lgflags #protobuf INCLUDEPATH += H:\3rdparty\protobuf\include CONfig(debug,debug|release): LIBS += -LH:\3rdparty\protobuf\lib\x64\v120\Debug -llibprotobuf CONfig(release,debug|release): LIBS += -LH:\3rdparty\protobuf\lib\x64\v120\Release -llibprotobuf # hdf5 INCLUDEPATH += H:\3rdparty\hdf5\include LIBS += -LH:\3rdparty\hdf5\lib\x64 -lhdf5 -lhdf5_hl -lhdf5_tools -lhdf5_cpp # levelDb INCLUDEPATH += H:\3rdparty\LevelDB\include CONfig(debug,debug|release): LIBS += -LH:\3rdparty\LevelDB\lib\x64\v120\Debug -lLevelDb CONfig(release,debug|release): LIBS += -LH:\3rdparty\LevelDB\lib\x64\v120\Release -lLevelDb # lmdb INCLUDEPATH += H:\3rdparty\lmdb\include CONfig(debug,debug|release): LIBS += -LH:\3rdparty\lmdb\lib\x64 -llmdbD CONfig(release,debug|release): LIBS += -LH:\3rdparty\lmdb\lib\x64 -llmdb #openblas INCLUDEPATH += H:\3rdparty\openblas\include LIBS += -LH:\3rdparty\openblas\lib\x64 -llibopenblas }

You neeed to change library path to your own.

Done.

You can find caffe project on my github

caffe 安装(Ubuntu 14.04 +caffe+GTX 1080+CUDA8.0 +cuDNN v5)

一、硬件与环境

显卡:GTX 1080

系统:Ubuntu 14.04

CUDA:cuda_8.0.44_linux.run(一定是下载这个)

cuDNN:cudnn-8.0-linux-x64-v5.1.tgz

GTX1080显卡必须用CUDA 8.0版本。切记,千万不要下载 deb 包,否则后方无数坑在等着你。GTX1080显卡必须用cuDNN-8.0-V5.1版本,不然用 caffe 跑模型,用 cpu或GPU显卡跑精度正常,一旦开启cuDNN模式,精度(acc)立刻下降到 0.1 左右,loss 非常大。下载需注册。最好注册一个账号,选择对应的版本,不要用网上其他教程给的现成的包,出问题的概率非常大。

二、安装

1 安装依赖库

sudo apt-get install --no-install-recommends libboost-all-dev sudo apt-get install libprotobuf-dev libleveldb-dev libsnappy-dev libopencv-dev libhdf5-serial-dev protobuf-compiler sudo apt-get install libgflags-dev libgoogle-glog-dev liblmdb-dev

2 安装 BLAS

BLAS (Basic Linear Algebra Subprograms)是一个基本线性代数运算库,除了BLAS还有Intel的MKL(Math Kernel Library),这个库需要购买或者用edu邮箱申请,相应的这个库比BLAS运算效率高,如果有GPU的情况下这个库就不是很重要了,所以这里我们用免费的BLAS,安装命令如下。

sudo apt-get install libatlas-base-dev

3 安装opencv

OpenCV的全称是:Open Source Computer Vision Library。OpenCV是一个基于BSD许可(开源)发行的跨平台计算机视觉库,可以运行在Linux、Windows和Mac OS操作系统上。它轻量级而且高效——由一系列 C 函数和少量 C++ 类构成,同时提供了Python、Ruby、MATLAB等语言的接口,实现了图像处理和计算机视觉方面的很多通用算法。caffe里用到很多caffe的函数,包括基本的图像处理,需要执行以下命令:

3.1 安装cmake等编译opencv需要用到的工具

sudo apt-get install build-essential sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev sudo apt-get install python-dev python-numpy libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff-dev libjasper-dev libdc1394-22-dev

3.2 下载opencv

mkdir ~/opencv cd ~/opencv wget https://github.com/Itseez/opencv/archive/3.0.0-alpha.zip -O opencv-3.0.0-alpha.zip unzip opencv-3.0.0-alpha.zip cd opencv-3.0.0-alpha

3.3 编译安装opencv

mkdir build cd build cmake .. sudo make sudo make install sudo /bin/bash -c 'echo "/usr/local/lib" > /etc/ld.so.conf.d/opencv.conf' sudo ldconfig

4 安装CUDA

CUDA(Compute Unified Device Architecture),是显卡厂商NVIDIA推出的运算平台。 CUDA™是一种由NVIDIA推出的通用并行计算架构,该架构使GPU能够解决复杂的计算问题。 它包含了CUDA指令集架构(ISA)以及GPU内部的并行计算引擎。 开发人员现在可以使用C语言来为CUDA™架构编写程序,C语言是应用最广泛的一种高级编程语言。caffe中几乎所有的layer都有GPU实现的版本,利用cuda使得训练速度大大提升。CUDA相应也是比较难以安装的一个依赖包了,这次安装的过程 中出现最多问题的就是CUDA的安装。

在安装之前需要说明一些情况,Ubuntu14.04安装的时候自带了一个名为nouveau的驱动,这个驱动为安装带来了很多麻烦。如果你原本的显示设备是NVIDIA的卡的话,尤其是单GPU的话,那你安装的时候需要先关闭GUI,执行Ctrl+Alt+F1后进入tty1,执行sudo stop lightdm关闭显示器管理器,禁用旧的显卡驱动:

cd /etc/modprobe.d/ sudo vim nvidia-installer-disable-nouveau.conf

输入以下内容,保存并退出:

blacklist nouveau options nouveau modeset=0

打开/etc/default/grub,在文件末尾添加:

rdblacklist nouveau nouveau.modeset=0

然后再安装NVIDIA驱动:

sudo sh ./NVIDIA-Linux-x86_64-352.30.run

安装CUDA成功之后再执行

sudo start lightdm

5 安装 cuDNN

cd ~ sudo tar xvf cudnn-8.0-linux-x64-v5.1.tgz cd cuda/include sudo cp *.h /usr/local/include/ cd ../lib64 sudo cp lib* /usr/local/lib/ cd /usr/local/lib# sudo chmod +r libcudnn.so.5.1.5 sudo ln -sf libcudnn.so.5.1.5 libcudnn.so.5 sudo ln -sf libcudnn.so.5 libcudnn.so sudo ldconfig

注意:libcudnn.so后面跟的数字可能和你下载的 cudnn 包小版本的不同而不同,去~/cuda/lib64下看一眼,相对应地进行修改。

6 安装 caffe

6.1 下载 caffe

cd ~ git clone https://github.com/BVLC/caffe.git

6.2安装依赖

sudo apt-get install libatlas-base-dev libprotobuf-dev libleveldb-dev libsnappy-dev libopencv-dev libboost-all-dev libhdf5-serial-dev libgflags-dev libgoogle-glog-dev liblmdb-dev protobuf-compiler

6.3 编译 caffe

cd ~/caffe cp Makefile.config.example Makefile.config vim Makefile.config

修改Makefile.config文件:

USE_CUDNN := 1,开头的#删除

USE_OPENCV := 0,开头的#删除

OPENCV_VERSION := 3,开头的#删除 因为我安装的是opencv3

make all make test make runtest

OK现在就完成了,体验下1080显卡的霸气。

Caffe+Ubuntu 16.04 安装教程

引言

由于最近安装caffe 然后遇到一些问题 所以自己写个教程方便别人使用;我

所遇到的问题是关于hdf5 文件的问题

caffe 安装

更新下载必要的安装包

sudo apt-get update sudo apt-get upgrade sudo apt-get install git sudo apt-get install libprotobuf-dev libleveldb-dev libsnappy-dev libopencv-dev libhdf5-serial-dev protobuf-compiler sudo apt-get install --no-install-recommends libboost-all-dev sudo apt-get install libatlas-base-dev sudo apt-get install python-dev sudo apt-get install libgflags-dev libgoogle-glog-dev liblamdb-dev

下载caffe 源码:

git clone https://github.com/bvlc/caffe.git cd caffe/ mv Makefile.config.example Makefile.config

修改Makefile.config(重要一步)

//如果你不使用GPU的话,就将

# cpu_ONLY := 1

修改成:

cpu_ONLY := 1

//若使用cudnn,则将

# USE_CUDNN := 1

修改成:

USE_CUDNN := 1

//若使用的opencv版本是3的,则将

# OPENCV_VERSION := 3

修改为:

OPENCV_VERSION := 3

//若要使用python来编写layer,则需要将

# WITH_PYTHON_LAYER := 1

修改为

WITH_PYTHON_LAYER := 1

//重要的一项

将# Whatever else you find you need goes here.下面的

INCLUDE_Dirs :=

修改为:

INCLUDE_Dirs :=

为hdf5 创建链接

\\首先执行下面两句话:

find . -type f -exec sed -i -e 's^"hdf5.h"^"hdf5/serial/hdf5.h"^g' -e 's^"hdf5_hl.h"^"hdf5/serial/hdf5_hl.h"^g' '{}' \;

cd /usr/lib/x86_64-linux-gnu

\\然后根据情况执行下面两句:

sudo ln -s libhdf5_serial.so.10.1.0 libhdf5.so sudo ln -s libhdf5_serial_hl.so.10.0.2 libhdf5_hl.so

\\注意:这里的10.1.0和10.0.2根据不同的系统可能对应的数字会不同,比如在ubuntu15.10中其数字就是8.0.2.

\\具体的数字可以在打开的文件中查看对应文件后面跟的数字

编译所有的文件

cd cd caffe/ make all -j4 make test -j4 make runtest -j4

如果是8核的可以换 make -j8 按照自己核数写就可以了 可以加速

参考文章

http://www.jb51.cc/blog/autocyz/article/p-6076613.html

Caffe+Ubuntu14.04+CUDA7.5安装笔记

ubuntu 14.04安装

-

先到官网下载ubuntu14.04,网址:http://www.ubuntu.com/download/desktop

ubuntu-14.04.4-desktop-amd64.iso -

参考:Ubuntu14.04 安装及使用:[1]制作安装U盘制作安装U盘

然后参考:Ubantu14.04安装教程安装系统 -

分区:

- boot 设置 200M 主分区

- / 设置 50000M

- swap 设置 4000M

- home 设置 剩余M

cuda7.5安装

- cuda7.5下载:地址https://developer.nvidia.com/cuda-downloads

文件: cuda_7.5.18_linux.run - 登录界面前按Ctrl+Alt+F1进入命令提示符 【禁用nouveau驱动】

- 执行命令: sudo vi /etc/modprobe.d/blacklist-nouveau.conf

-

输入以下内容

blacklist nouveau options nouveau modset=0

最后保存退出(:wq)

-

执行命令: sudo update-initramfs -u

再执行命令: lspci | grep nouveau 查看是否有内容

如果没有内容 ,说明禁用成功,如果有内容,就重启一下再查看

sudo reboot

重启后,进入登录界面的时候,不要登录进入桌面,直接按Ctrl+Alt+F1进入命令提示符。 -

重启后,登录界面时直接按Ctrl+Alt+F1进入命令提示符

-

安装依赖项:

sudo service lightdm stop

sudo apt-get install g++

sudo apt-get installGit

sudo apt-get install freeglut3-dev -

假设cuda_7.5.18_linux.run位于 ~ 目录,切换到~目录: cd ~

-

执行命令: sudo sh cude_7.5.18_linux.run

-

安装的时候,要让你先看一堆文字(EULA),我们直接不停的按空格键到100%,然后输入一堆accept,yes,yes或回车进行安装。安装完成后,重启,然后用ls查看一下:

ls /dev/nvidia*

会看到/dev目录下生成多个nvidia开头文件(夹)

或者输入命令: sudo nvcc –version 会显示类似以下信息- 1

- 2

- 3

- 4

- 5

- 1

- 2

- 3

- 4

- 5

-

配置环境变量

执行命令: sudo vi /etc/profile

文件底部添加以下内容:- 1

- 2

- 1

- 2

-

编译samples

安装成功后在~目录下可以看到一个NVIDIA_CUDA-7.5_Samples文件夹,切换到目录

输入sudo make, 大概等个十多分钟后就会把全部的samples编译完毕。生成的可执行文件位于

NVIDIA_CUDA-7.5_Samples/bin/x86_64/Linux/release 目录下

比如运行 ./nbody可以看到以下demo

cuda安装过程中遇到的问题

Q1

–

- 在执行命令: sudo apt-get install g++ 时出现以下错误

g++ : Depends: g++-4.8 (>= 4.8.2-5~) but it is not going to be installed -

是因为ubuntu 14.04的源过旧或不可访问导致,可以通过更新源解决。

-

首先,备份原始源文件source.list

sudo cp /etc/apt/sources.list /etc/apt/sources.list_backup -

然后

sudo gedit /etc/apt/source.list

在文件尾部添加以下内容 - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

-

最后 sudo apt-get update

Q2

- 1

- 2

- 3

- 4

- 5

- 6

- 1

- 2

- 3

- 4

- 5

- 6

solution:

- 1

- 2

注意最后的一串密钥就是报错信息里的,每个人的不一样

安装caffe

- 下载caffe:执行命令: sudo git clonehttps://github.com/BVLC/caffe.git

-

安装依赖项:

sudo apt-get install libatlas-base-dev

sudo apt-get install libprotobuf-dev

sudo apt-get install libleveldb-dev

sudo apt-get install libsnappy-dev

sudo apt-get install libopencv-dev

sudo apt-get install libboost-all-dev

sudo apt-get install libhdf5-serial-dev

sudo apt-get install libgflags-dev

sudo apt-get install libgoogle-glog-dev

sudo apt-get install liblmdb-dev

sudo apt-get install protobuf-compiler -

编译caffe

cd ~/caffe

sudo cp Makefile.config.example Makefile.config

make all -

配置运行环境

sudo vi /etc/ld.so.conf.d/caffe.conf

添加内容:

/usr/local/cuda/lib64 - 更新配置

sudo ldconfig - caffe测试,执行以下命令:

cd ~/caffe

sudo sh data/mnist/get_mnist.sh

sudo sh examples/mnist/create_mnist.sh

最后测试:

sudo sh examples/mnist/train_lenet.sh

运行结果如下:

其他依赖项

我们查看caffe目录下 Makefile.config 内容如下:

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

- 79

- 80

- 81

- 82

- 83

- 84

- 85

- 86

- 87

- 88

- 89

- 90

- 91

- 92

- 93

- 94

- 95

- 96

- 97

- 98

- 99

- 100

- 101

- 102

- 103

- 104

- 105

- 106

- 107

- 108

- 109

- 110

- 111

可以看到诸如

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

都是使用默认的设置,我们可以安装其他依赖项提高caffe运行效率

opencv3.0安装

-

github上有人写好完整的运行脚本自动下载OpenCV,编译,安装,配置等

-

Caffe + Ubuntu 15.04 + CUDA 7.5 新手安装配置指南作者 Xin-Yu Ou(欧新宇) 可以到他的网盘中下载

PS:为了方便大家使用,我提供一个百度云盘,用于分享部分安装过程中需要用到的软件包和链接地址(所有软件包仅供学术交流使用,请大家尽量去官网下载。)。百度云盘链接:http://pan.baidu.com/s/1qX1uFHa密码:wysa -

在Install-OpenCV-master文件夹中包含安装各个版本opencv脚本

-

切换到目录执行:

sudo sh Ubuntu/dependencies.sh

安装依赖项 -

执行opencv3.0安装脚本

sudo sh Ubuntu/3.0/opencv3_0_0.sh

等待安装完成即可 -

修改Makefile.config

- 1

- 2

- 3

- 4

- 1

- 2

- 3

- 4

-

(可选)opencv3.1已经发布,如果要安装最新的opencv3.1,我们可以先执行

sudo sh get_latest_version_download_file.sh

获取最新的地址,然后更新opencv3_0_0.sh中的下载地址,同时需要修正文件名等 - 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

安装opencv3遇到的问题

- 在执行

sudo sh Ubuntu/3.0/opencv3_0_0.sh

出现有个地方一直卡住了,显示在下载一个文件: ippicv_linux_20141027.tgz

因为墙的原因,这个文件无法下载下来 -

[其他文档] ippicv_linux_20141027.tgz处下载文件 ippicv_linux_20141027.tgz

-

下载后拷贝到opencv/3rdparty/ippicv/downloads/linux-8b449a536a2157bcad08a2b9f266828b/ 目录下即

- http://stackoverflow.com/questions/25726768/opencv-3-0-trouble-with-installation

安装BLAS——选择MKL

- 首先下载 MKL(Intel(R) Parallel Studio XE Cluster Edition for Linux 2016)

网址:https://software.intel.com/en-us/intel-education-offerings

Caffe + Ubuntu 15.04 + CUDA 7.5 新手安装配置指南作者 Xin-Yu Ou(欧新宇) 可以到他的网盘中下载, 需要自己申请序列号 -

下载完成后: parallel_studio_xe_2016.tgz

-

执行以下命令:

$ tar zxvf parallel_studio_xe_2016.tar.gz$ chmod a+x parallel_studio_xe_2016 -R

$ sh install_GUI.sh

-

环境配置:

$ sudo gedit /etc/ld.so.conf.d/intel_mkl.conf

然后添加以下内容/opt/intel/lib/intel64 /opt/intel/mkl/lib/intel64

配置生效: sudo ldconfig -v

安装MKL完成 -

修改Makefile.config

- 1

- 2

- 3

- 4

- 5 # BLAS choice: # atlas for ATLAS (default) # mkl for MKL # open for OpenBlas BLAS := mkl

cuDNN安装

-

cudnn下载

下载地址:https://developer.nvidia.com/cudnn

或者到网盘:http://pan.baidu.com/s/1bnOKBO下载

下载相应文件cudnn-7.0-linux-x64-v4.0-rc.tgz,放到~根目录下 -

切换到~目录,执行命令

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10 sudo tar xvf cudnn-7.0-linux-x64-v4.0-rc.tgz cd cuda/include sudo cp *.h /usr/local/include/ cd ../lib64 sudo cp lib* /usr/local/lib/ cd /usr/local/lib sudo chmod +r libcudnn.so.4.0.4 sudo ln -sf libcudnn.so.4 libcudnn.so.4 libcudnn.so sudo ldconfig

-

修改Makefile.config

- 1

- 2

- 3

- 4 # cuDNN acceleration switch (uncomment to build with cuDNN). USE_CUDNN := 1

cudnn版本问题

在make工程的时候出现以下错误:

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

解决方案:

更换V3版本cudnnCaffe 工程的一些编译错误以及解决方案

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

重新编译测试caffe

-

编译

sudo make clean

sudo make all -

sample测试: ( 比不使用cudnn快很多)

sh data/mnist/get_mnist.sh

sh examples/mnist/create_mnist.sh -

我们可以将迭代次数增加到50000次

sudo gedit examples/mnist/lenet_solver.prototxt

修改max_iter: 50000

最后:

sh examples/mnist/train_lenet.sh

编译Python接口

依赖项

- 1

- 1

- 2

- 3

- 4

编译matlab接口

- 安装matlab2014

sh /usr/local/MATLAB/R2014a/bin/matlab - Makefile.config 中修改 : MATLAB_DIR := /usr/local/MATLAB/R2014a

- sudo make matcaffe -j8

其他

- Vi编辑命令常用vi编辑器命令行

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

- 50

- 51

- 52

- 53

- 54

- 55

- 56

- 57

- 58

- 59

- 60

- 61

- 62

- 63

- 64

- 65

- 66

- 67

- 68

- 69

- 70

- 71

- 72

- 73

- 74

- 75

- 76

- 77

- 78

-

搜狗输入法安装

Ubuntu14.04安装搜狗输入法 -

im-config 然后 ibus选取fcitx

-

fcitx-config-gtk3

参考资料

- Caffe学习系列(1):安装配置ubuntu14.04+cuda7.5+caffe+cudnn

- Caffe + Ubuntu 15.04 + CUDA 7.5 新手安装配置指南

- ubuntu 14.04 install g++问题"g++:Depends:g++-4.8(>= 4.8.2-5

- ippicv_linux_20141027.tgz

- http://stackoverflow.com/questions/25726768/opencv-3-0-trouble-with-installation

今天关于CAFFE 安装笔记【windows 和 ubuntu】和caffe安装教程windows的介绍到此结束,谢谢您的阅读,有关Build caffe using qmake (ubuntu&windows)、caffe 安装(Ubuntu 14.04 +caffe+GTX 1080+CUDA8.0 +cuDNN v5)、Caffe+Ubuntu 16.04 安装教程、Caffe+Ubuntu14.04+CUDA7.5安装笔记等更多相关知识的信息可以在本站进行查询。

本文标签:

![[转帖]Ubuntu 安装 Wine方法(ubuntu如何安装wine)](https://www.gvkun.com/zb_users/cache/thumbs/4c83df0e2303284d68480d1b1378581d-180-120-1.jpg)